Neural Network Quantization for Overhead Reduction in AIML Model Sharing

Explore the implementation of neural network quantization for reducing communication overhead in AIML model sharing. Discussing the sharing of model parameters between STAs and the benefits of quantization in reducing computational complexity and storage requirements. Learn about different categories of neural network quantization techniques and their implications on model sharing efficiency.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

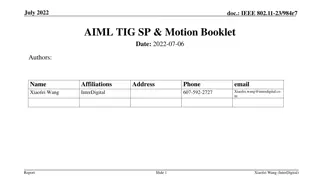

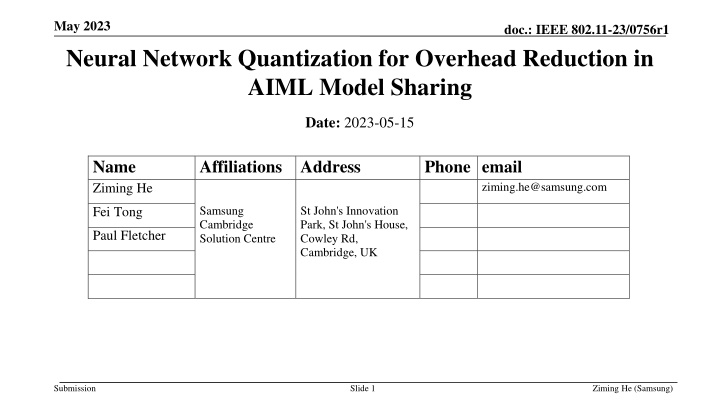

May 2023 Neural Network Quantization for Overhead Reduction in AIML Model Sharing doc.: IEEE 802.11-23/0756r1 Date: 2023-05-15 Name Ziming He Affiliations Address Samsung Cambridge Solution Centre Phone email ziming.he@samsung.com St John's Innovation Park, St John's House, Cowley Rd, Cambridge, UK Fei Tong Paul Fletcher Submission Slide 1 Ziming He (Samsung)

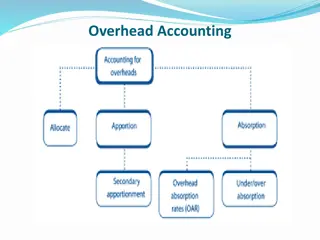

May 2023 doc.: IEEE 802.11-23/0756r1 Background and introduction A use case for AIML model sharing has been defined in [1]. AIML model sharing seems to have the most significant standard impact and its technical feasibility needs to be well discussed [2]. It has been discussed in [2] how to share AIML model parameters between STAs. Neural network model is a key category of AIML models under discussion in AIML TIG (e.g., [3]-[6]) In this contribution, we will discuss what parameters can be shared for neural network based AIML model. Submission Slide 2 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Neural Network Model Sharing A feedforward neural network: Weighting and bias coefficients (W, b) is trained at a STA The STA may need to send the trained coefficients to the other STAs (i.e., model sharing) The format of the coefficients is usually in single- precision floating-point (32-bits), thus requires a lots of communication overhead, especially for large models Similar for other models, such as convolutional and recurrent neural network models ?0 ??= ???? 1?? 1 + ??,? 1,? Submission Slide 3 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Neural Network Quantization for AIML Model Sharing Neural network quantization is a well-known AI technique to reduce computational complexity and model storage requirement [7], we propose to use the technique for communication overhead reduction for AIML model sharing. Submission Slide 4 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Fundamentals of Neural Network Quantization x: a single-precision floating point coefficient to be quantized xint: quantized integer from x b: bit width of xint (<32) s: scaling factor z: zero-point After quantization, STAs can share xint, s and z, stead of share x Submission Slide 5 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Categories of Neural Network Quantization Post-training quantization (PTQ) Very effective and fast to implement because it does not require retraining of the network with labelled data. It may not be enough to mitigate the large quantization error introduced by low-bit quantization. Quantization-aware training (QAT) Treats scaling factor and zero point as learnable parameters to be optimized during training. Aiming for low-bit quantization, such as 4-bits and below. Submission Slide 6 Ziming He (Samsung)

May 2023 Example: AIML Beamforming Using Quantized Autoencoder doc.: IEEE 802.11-23/0756r1 AIML training phase Legacy beamforming Submission Slide 7 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Model Sharing Overhead Reduction for Autoencoder- based Beamforming Scheme in [5] Method Required overhead per model sharing (bits) Encoder without quantization (single-precision floating point) 104192 (3256 coefficients) Encoder with 8-bits-PTQ on weighting coefficients 27456 Model sharing overhead during training is reduced by 74% Submission Slide 8 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 PER Performance with Autoencoder-based Beamforming MCS11 Same PER performance with and without quantization MCS9 MCS7 MCS4 Submission Slide 9 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Standard impact Signalling and protocol related to model parameters sharing, such as floating- point/integer neural network coefficients and other quantization parameters (e.g., scaling factor, zero point) Submission Slide 10 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Summary We have proposed to use neural network quantization for communication overhead reduction in AIML model sharing We have given an example of using quantized autoencoder for training overhead reduction in AIML beamforming Submission Slide 11 Ziming He (Samsung)

May 2023 doc.: IEEE 802.11-23/0756r1 Question By considering existing use case for AIML Model Sharing in [1], do you think this contribution should be considered as a separate use case for AIML TIG or merged into [1]? Submission Slide 12 Ziming He (Samsung)

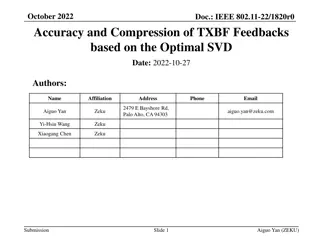

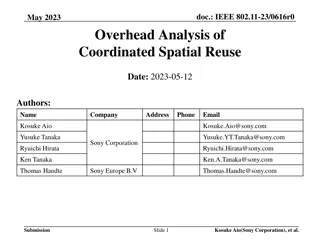

May 2023 doc.: IEEE 802.11-23/0756r1 References [1] 11-23/0050r3, Proposed Technical Report Text for AIML Model Sharing Use case [2] 11-23/0397r1, Technical Feasibility Analysis of ML Model Sharing [3] 11-22/1934r5, Proposed IEEE 802.11 AIML TIG Technical Report Text for the CSI Compression Use Case [4] 11-23/0290r1, Study on AI CSI Compression [5] 11-23/0755r0, AIML Assisted Complexity Reduction For Beamforming CSI Feedback Using Autoencoder [6] 11-23/0750r0, Discussions on Neural Network Model Sharing for WLAN [7] M. Nagel, et al., A White Paper on Neural Network Quantization , Qualcomm AI Research, Jun. 2021. Submission Slide 13 Ziming He (Samsung)