Oracle 12.2 Multi-Tenancy Features

Nordea is exploring Oracle 12.2 new features for Multi-Tenancy to leverage lower footprints. Sessions cover topics like creating pluggable databases, backup/restore, DataGuard setup, and security considerations. Learn how Nordea is maximizing the potential of Multi-Tenancy with an ULA license.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Oracle 12.2 New features Multi-Tenancy Pitfalls and promises In order to harvest promised lower foot-prints on Multi-Tenancy, Nordea is on the way to use this feature from 12.2 and onwards. We have however found a few areas where some pieces in the puzzle are still missing. Thus this session. 23rd of October 2017 6thof November 2018 Martin Jensen - Nordea

Agenda Overview Create Pluggable database / Cloning Create / start services for specific PDBs Backup / Restore DataGuard setup Resource Profiles Security More Database Parameters AWR for individual Pluggable Databases A small snag

Overview Oracle 12.2 have removed most restrictions from 12.1 for Multi-Tenancy, so now is the time where Nordea can fully utilize this new feature area, where Nordea currently holds an ULA license. Basically a few large rac-enabled container databases are being populated with a number of pluggable databases. A container database (CDB) is a database of containers (each with a con_id). In each of these containers a pluggable database (PDB) may be located.

Create Pluggable Database / Cloning A pluggable database may be created in a number of different ways Creating a new Pluggable database Through a PLUGIN potentially following a PLUGOUT (not to environments with DG) Copy a Pluggable database / Clone (not to environments with DG) Refresh a PDB Clone (not to environments with DG) Relocate a PDB from one container to another (not to environments with DG) Create a thin Snapshot Clone of a PDB (Only on Exadata using ASM snapshot volumes) Convert a non CDB (old style) database to a Multi Tenancy with a PDB

Create Pluggable Database / Cloning Creating a new Pluggable database From the root of a container database (CDB$ROOT) it is possiblle to create a new pluggable database, internally using the SEED database as the template: CREATE PLUGGABLE DATABASE <pdb_name> ADMIN USER <pdb_admin_name> IDENTIFIED BY <passwd> ROLES=(CONNECT, DBA); ALTER PLUGGABLE DATABASE <pdb_name> OPEN INSTANCES = ALL; ALTER SESSION SET CONTAINER = <pdb_name>; In Nordea the <pdb_admin_name> account name is chosen to be PDB_ADMIN with connect, resource, dba roles. If you are using SQLplus then remember to set the prompt to tell in which container you are working: https://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:446220075876

Create Pluggable Database / Cloning Copy a Pluggable database from one container database to another without standby. Version 12.2 does allow a clone of a pluggable database to be created from one container database to another (with some restrictions) even as the source pluggable database is in READ WRITE mode and being used. The create pluggable database will (when it s opened) have a consistent content from the source pluggable from when all the data have been copied over to the new pluggable. CREATE USER c##remote_clone_user IDENTIFIED BY <passwd> CONTAINER=ALL; GRANT CREATE SESSION, CREATE, SYSOPER, CREATE PLUGGABLE DATABASE TO c##remote_clone_user CONTAINER=ALL; Create a database link in the target_cdb container pointing to the source_cdb, and test the link. Note that there seems to be an issue using the scan listener in this database link, which is why the VIP address is used (SR 3-15433875101: PDB Hot Clone fails with TNS error). CREATE DATABASE LINK clone_link_to_<cdb> < CONNECT TO c##remote_clone_user IDENTIFIED BY <passwd> USING '(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=<host>- vip.<domain>)(PORT=1521)))(CONNECT_DATA=(SERVICE_NAME=<source_cdb>.<domain>.n et)))';

Create Pluggable Database / Cloning Copy a Pluggable database from one container database to another do the copy Create the target_pdb as a clone of the source_pdb, without having to switch the source_pdb into READ ONLY mode, and take advantage of our usage on Oracle Managed Files (OMF). CREATE PLUGGABLE DATABASE <target_pdb> FROM <source_pdb>@<clone_link>; ALTER PLUGGABLE DATABASE <target_pdb> OPEN INSTANCES = (<list of instances>); Check for possible errors: select * from PDB_PLUG_IN_VIOLATIONS where name = '<target_pdb>' order by time; There will probably be some Database option <option> mismatch: PDB installed version 12.2.0.1.0. CDB installed version NULL errors, which may be ignored due to the following MOS: "OPTION WARNING Database option mismatch: PDB installed version NULL" in PDB_PLUG_IN_VIOLATIONS (Doc ID 2020172.1) CDB:CLONE REMOTE PDB BY DB LINK FAILED WITH ORA-17628/ORA-19505 (Doc ID 1592033.1) Create Pluggable Database using Database Link Fails with ORA-65169 ORA-17628 ORA-19505 (Doc ID 2366828.1)

Create Pluggable Database / Cloning Refresh a PDB Clone in a container database with no standby. It is possible to create the clone of a PDB, and then at a later stage refresh the clone to get the latest content of the source pluggable database pushed to the clone. The procedure is very similar with normal cloning. CREATE PLUGGABLE DATABASE <target_pdb> FROM <source_pdb>@<clone_link> REFRESH MODE MANUAL; This may be a lengthy operation as large volumes of data would potentially need to be copied from one cluster to another. On the positive side however, the source PDB is still servicing the applications. So after the initial cloning has finished, it may be preferable to do a refresh to know more about the actual content in the cloned database. ALTER SESSION SET CONTAINER = <target_pdb>; ALTER PLUGGABLE DATABASE <target_pdb> REFRESH; Before the new clone can be opened it need to be disconnected from the refresh mode: ALTER PLUGGABLE DATABASE <target_pdb> REFRESH MODE NONE; ALTER SESSION SET CONTAINER = CDB$ROOT; ALTER PLUGGABLE DATABASE <target_pdb> OPEN INSTANCES = (<list of instances>);

Create Pluggable Database / Cloning Relocate a PDB from one container database to another container database with no standby. It is possible to combine the previous hot clone mechanism to actually move a pluggable database from one container database to a different one, even in different clusters using the same kind of database link (clone_link) as used before: CREATE PLUGGABLE DATABASE <target_pdb> FROM <source_pdb>@clone_link RELOCATE; This will leave the <target_pdb> in the RELOCATING state, with all content from the <source_pdb> from the time the relocate operation ends. In order to open the relocated PDB, transfer the last minute changes and drop the old PDB, we need to execute the open request: ALTER PLUGGABLE DATABASE <target_pdb> OPEN INSTANCES = (<list of instances>);

Convert a non CDB db to a MT It is possible (but irreversible) to convert a non CDB (old style) database to a pluggable database in a container database. 1. Set the database in read-only mode in 12.1 restriction removed in 12.2 2. Create the manifest (xml file) using dbms_pdb.describe 3. Copy / backup the database files to the right directory 4. reopen the database for use if needed 5. Plugin the db as PDB into a root container using the manifest 6. Cleanup SYS catalog - run $ORACLE_HOME/rdbms/admin/non_cdb_to_pdb.sql irreversible (resumable in 12.2) we will need to protect our self with a backup. These excellent links from Mike Dietrich walks through this procedure: https://mikedietrichde.com/2017/06/08/noncdb_to_pdb-sql-take-long/ https://mikedietrichde.com/2017/03/08/converting-an-12-1-non-cdb-and-plug-it-into-an-12-2-cdb/

Create the PDB with other character-set From 12.2 it is possible in a container database to have pluggable databases with different character sets as long as the Container database character set is AL32UTF8 . Unfortunately it is however not possible to specify that changed character set in a create pluggable database statement. So to get a pluggable database with a different character set in a container database, you will have to: - Create a container database with the desired character set. - Create your new pluggable database in that CDB, to get the requested character set. - Unplug / plugin or clone your new PDB to the right CDB See: https://mikedietrichde.com/2017/10/23/can-you-select-a-pdbs-character-set/

Create / start services for specific PDBs It is possible (and recommended) to create and start application specific services for the relevant pluggable databases, as RAC and standby issues to be addressed. srvctl add service -service <explicit_service_name> -db <container_db_name> -role primary - pdb <pdb_name> -preferred "<list of cluster db instances>" srvctl start service -service <explicit_service_name> -db <container_db_name> srvctl status service -service <explicit_service_name> -db <container_db_name> srvctl config service -service <explicit_service_name> -db <container_db_name> # add entry to tnsnames.ora using the explicitely create service name Which services do we have on my PDB: select name, creation_date, pdb, con_id from cdb_services where pdb = 'P_MJTST01_50OI_18100913_S' order by name;

Create / start services for specific PDBs To be able to persist that the same states / accessibility for the different PDBs would be the same after a restart of the container database, it is natural to use the relevant PDB procedures: ALTER PLUGGABLE DATABASE <pdb_name> discard state instances=all; ALTER PLUGGABLE DATABASE <pdb_name> save state instances=all; Unfortunately, the Database Administration manual chapter 41.4.7 Preserving or Discarding the Open Mode of PDBs When the CDB Restarts states that: For a PDB in an Oracle RAC CDB, SAVE STATE and DISCARD STATE only affect the mode of the current instance. They do not affect the mode of other instances, even if more than one instance is specified in the instances clause. So we should probably have all our PDBs started on all instances, and let the database services registered in the cluster to determine where the database sevices are enabled.

Backup / Restore The strategy is to continue to run the Nordea backup procedures at the (container) database level using RMAN, and then to restore pluggable databases (if need be) from the container database backup. This way a new pluggable in a container database will automatically inherit the backup characteristics. And a pluggable database may be restored without disturbing other pluggable databases in the same container database. Actually a full container database backup will group tablespaces from the same PDB into the same backup pieces in order to make PDB restore faster. Newly cloned pluggable databases with copied tablespaces, will have their first backup at the following incremental level 1 backup. Archivelog backups will not cover these newly created PDBs as default. The general backup procedure remains at the container database level, where backups are taken locally on the site where the physical database is located: Incremental level 0 backup once every weekend Incremental level 1 backup once every day Archivelog backup every hour (or second hour) Block-change-tracking enabled RMAN compression in use ( LOW , OPTIMIZE FOR LOAD TRUE and COMPRESSED ARCHIVELOG)

DataGuard setup At the Container database level force logging must be enabled. This setting is automatically inherited to all the pluggable databases in that container, and cannot be overwritten at the pluggable level. Please observe that the Pluggable database clone and relocate procedures do not work yet in a DataGuard environment

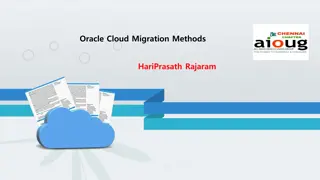

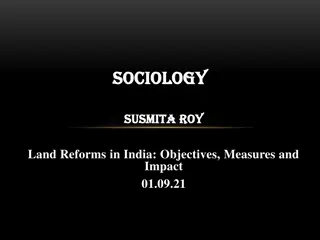

Using MT as a virtualization control This graph shows that it is possible to control the resources given to the PDB, and that it (of course) has a direct impact on performance. When the PDB is getting the same resources as the CDB, the PDB is slightly faster, due to smaller foot-print. We will rather overallocate the PDB resources in a controlled way, than overallocare the resources using a hypervisor Test 2 - 2 million transactions 2 PDBs Test 5 - 5 million transactions 5 PDBs 2500 3500 3000 2000 2500 Seconds Seconds 1500 2000 1500 1000 1000 500 500 0 0 0 1 2 3 4 5 0 1 2 3 4 5 CPUs on each CDB / PDB CPUs on each CDB / PDB T.2.a Exa-CDB T.2.b Exa-PDB T.5.a Exa-CDB T.5.b Exa-PDB T.2.a SOP-CDB T.2.b SOP-PDB T.5.a SOP-CDB T.5.b SOP-PDB

Resource Profiles We define 4 different resource profiles in order to span PDBs from the very large (one PDB in the CDB) to the relatively small where one Container database instance may hold 64 PDBs in total. And we think 4 different resource profiles are manageable: Resourc e Profile SGA Target SGA Min size PGA Target PGA Limit Cpu Count Max pdbs per cdb inst 1162M 4650M 18600M 74401M 581M 2325M 9300M 37200M 150M 602M 2411M 9644M 450M 1806M 7233M 28932M 1 2 4 64 16 4 1 rp1 rp2 rp3 rp4 14 To be adjusted SGA_MIN_SIZE must be equal or less than half the SGA_TARGET. Due to Bug 26160154 the usage of the SGA for a PDB may be bigger that the SGA_TARGET for that PDB and the sum of all SGA s from the PDB s may be bigger than the SGA of the CDB. PGA_AGGREGATE_TARGET is 3 times lower than PGA_AGGREGATE_LIMIT

Resource Profiles cont. It is possible to limit the IOPS and Megabytes per second for each of the PDBs using the max_iops and max_mbps Pluggable init parameters. These parameters are hard to test, because - If the values are too low the kernel ignores them. - All IO activity regarding Redo, DB writer, Archivelog, flashback logs are covered by the container database. - Setting the maximum size of the buffer-cache for a pluggable database is not very easy. See I/O Rate Limits for PDBs 12.2 New feature . (Doc ID 2164827.1)

Resource Profiles cont. It is recommended to create each pluggable database with a reasonable maximum size, and then to allow for extensions when extra storage capacity is needed and acknowledged. Pluggable databases will typically start with a 10Tb limit in order to protect the underlying ASM volume. When needed (possibly after an ORA-65114: space usage in container is too high error), this size may be increased like this from the actual pluggable database: ALTER PLUGGABLE DATABASE <pdb_name> STORAGE (MAXSIZE <size>); Similar settings may protect the local filesystems, covering audit and diag areas, using MAX_AUDIT_SIZE and MAX_DIAG_SIZE

Security Audit settings Each PDB will inherit the basic security settings from the container-database (audit_trail which is set to XML, EXTENDED) to have it s audit records shipped to SPLUNK User accounts and Roles It is possible to create container database wide user accounts and roles. Such phenomena need to be prefixed with C## (default of the COMMON_USER_PREFIX parameter). These common user accounts are immediately available in existing (and coming pluggable databases) in the container database and in the different pluggable databases. These users may get different default tablespaces, other preferences and privileges. But any database objects owned by such COMMON accounts are local to the PDB. Database Profiles Besides the existing database profiles delivered from Oracle (DEFAULT and ORA_STIG_PROFILE) , we have in Nordea the TECHNICAL_USER_PROFILE and the PERSONAL_USER_PROFILE database profiles. They are now called C##TECHNICAL_USER_PROFILE and the C##PERSONAL_USER_PROFILE at the Root container level. From the non-CDB databases we are used to have these database profiles using the NORDEA_VERIFY_FUNCTION password validation function, which is now available at the root container level as well.

AWR for individual Pluggable Databases It is possible to have snaps generated at the Pluggable database level in order to offer AWRs at the container as well as the pluggable database. AWR_PDB_AUTOFLUSH_ENABLED changed from default FALSE to TRUE, at the container level in order to support automatic AWRs per PDB. It is also possible to set this parameter at the PDB level in order to select specific PDBs for AWR support. AWR_SNAPSHOT_TIME_OFFSET change from 0 to 1000000 at the container level. Setting this parameter to 1000000 helps to create AWR snapshots with different offset based on database names and avoids CPU spike in the system. Also on the pluggable database the following workload repository setting need to be applied to automate the snap generation each hour. declare l_dbid number; BEGIN select dbid into l_dbid from cdb_pdbs; DBMS_WORKLOAD_REPOSITORY.MODIFY_SNAPSHOT_SETTINGS ( retention => 11520 -- 8 days, -- setting this value to 0 will disable the snap creation at the PDB level , interval => 60 -- 1 hour , topnsql => 'DEFAULT' , dbid => l_dbid -- dbid for the pdb - ); END;

Traces / tkprof a small snag In 12.2 it is possible from the database kernel to get access to tracefiles for a particular pluggable database through the GV$DIAG_TRACE_FILE and GV$DIAG_TRACE_FILE_CONTENTS views. Note that the GV$DIAG_TRACE_FILE holds a con_id, which is automatically used as a predicate, so that users from one PDB cannot directly see traces from other PDBs. Also note that both views are RAC-enabled and works well regardless which instance is serving the connection. This feature is used by tkprof when the new argument pdbtrace is used. Using this argument, it is no longer needed to have access to the actual database server filesystem, but instead to have privileged access to the pluggable database in question from where tkprof may then read and manage a generated trace-file. Unfortunately, it seems like tkprof is not using the RAC-enabled GV$DIAG_TRACE_FILE_CONTENTS view, but the one without G . suggesting that tkprof may only find tracefiles visible to the instance it is connected to.

Be careful out there, Martin Jensen - Nordea