Orthogonal and Symmetric Matrices

In this informative content, Hung-yi Lee discusses the concepts of orthogonal and symmetric matrices, exploring their properties, applications, and relationships with linear operators. Learn about norm-preservation, necessary conditions for norm-preserving matrices, and practical methods to check if a matrix is orthogonal. Dive into the world of linear algebra with a focus on orthogonal and symmetric matrices.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Orthogonal Matrices & Symmetric Matrices Hung-yi Lee

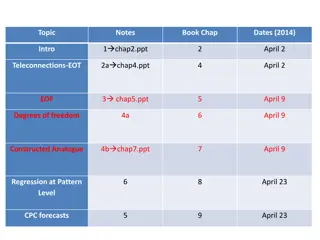

Outline Orthogonal Matrices Reference: Chapter 7.5 Symmetric Matrices Reference: Chapter 7.6

Orthogonal Matrix An nxn matrix Q is called an orthogonal matrix if the columns of Q are orthonormal. Orthogonal operator: standard matrix is an orthogonal matrix. unit unit is an orthogonal matrix. orthogonal

Norm-preserving A linear operator is norm-preserving if ? ? = ? For all u Example: linear operator T on R2that rotates a vector by . Is T norm-preserving? ? =1 0 Example: linear operator T is reflection Is T norm-preserving? 0 1

Norm-preserving A linear operator is norm-preserving if ? ? = ? For all u Example: linear operator T is projection Is T norm-preserving? ? =1 0 0 0 Example: linear operator U on Rnthat has an eigenvalue 1. U is not norm-preserving, since for the corresponding eigenvector v, U(v) = v = v v .

Norm-preserving Necessary conditions: Norm- preserving Orthogonal Matrix ? ??? Linear operator Q is norm-preserving qj = 1 qj = Qej = ej qiand qjare orthogonal qi+ qj 2= Qei+ Qej 2= Q(ei+ ej) 2 = ei+ ej 2= 2 = qi 2+ qj 2

Those properties are used to check orthogonal matrix. Orthogonal Matrix Q is an orthogonal matrix ???= ?? ? is invertible, and ? 1= ?? ?? ?? = ? ? for any u and v ?? = ? for any u Simple inverse Q preserves dot projects Q preserves norms Norm- preserving Orthogonal Matrix

Orthogonal Matrix 1 ?? ??= ????? Q is an orthogonal matrix ??? = ?? ? is invertible, and ? 1= ?? ?? ?? = ? ? for any u and v ?? = ? for any u 1 ? ?: 0 ? = ?: 1 2 ??? i-j entry is ????? 3 ?? ?? = ?????= ?????? = ???? = ??? = ? ? 2 ?? ?? = ? ? ?? ?? = ? ? 3 2= ? 2 ?? ?? = ?

Orthogonal Matrix Let P and Q be n x n orthogonal matrices ???? = 1 ?? is an orthogonal matrix ? 1 is an orthogonal matrix ?? is an orthogonal matrix = Check by ?? 1= ??? Check by ? 1 1= ? 1 ? Proof (a) QQT= In det(In) = det(QQT) = det(Q)det(QT) = det(Q)2 det(Q) = 1. (b) (PQ)T = QTPT= Q 1P 1 = (PQ) 1. (C) (Q-1)-1 = (QT)-1= (Q-1)T Rows and columns

Orthogonal Operator Applying the properties of orthogonal matrices on orthogonal operators T is an orthogonal operator ? ? ? ? = ? ? for all ? and ? ? ? = ? for all ? Preserves dot product Preserves norms T and U are orthogonal operators, then ?? and ? 1 are orthogonal operators.

Example: Find an orthogonal operator T on R3such that 0 1 0 1 2 Norm-preserving ? = 0 1 2 1 2 Find ? 1 first Because ? 1= ?? ? = ? 1?2 ?? = ?2 ? = 0 1 2 Also orthogonal 1 2 ? 1= 0 1 2 1 2 0 1 0 1 2 ? 1= 0 0 1 2 1 2 0 1 0 1 2 0 1 1 2 2 0 0 1 1 1 2 ? = ? 1 ?= 1 2 2 0 0 2?1+ 0?2+ 1 1 2?3= 0

Conclusion Orthogonal Matrix (Operator) Columns and rows are orthogonal unit vectors Preserving norms, dot products Its inverse is equal its transpose

Outline Orthogonal Matrices Reference: Chapter 7.5 Symmetric Matrices Reference: Chapter 7.6

Eigenvalues are real The eigenvalues for symmetric matrices are always real. Consider 2 x 2 symmetric matrices How about more general cases? ??? ? ??2 = ?2 ? + ? ? + ?? ?2 The symmetric matrices always have real eigenvalues.

Symmetric matrix A always has eigenvalue. Eigenvalues are real A symmetric matrix A has an eigenvalue ? ? = ? + ?? ? ? = ? ? ? ? = ? ? Av = ?? ?? = ?? ? = ? ?T? ?T?? = ? ?1+ ?1? ?2+ ?2? ?1 ?1? ?2 ?2? ? = ? ?T?? ?T?T? = ? ?T? T? = ? ? = ?T? = ? ? ? = ? 2+ ?T? =? 2+ ?1 ? = 0 > 0 2+ ?2 2+ ?2 = ?1

Orthogonal Eigenvectors A is symmetric ??? ? ??? Factorization ?1? ?2 ?2 ? ?? ?? = ? ?1 ?1 ?1 ?2 ?2 ?? Eigenvalue: ?? ?? ?2 ?1 Eigenspace: (dimension) orthogonal Independent

Orthogonal Eigenvectors A is symmetric. If ? and ? are eigenvectors corresponding to eigenvalues ? and ? (? ?) ? and ? are orthogonal.

Diagonalization A = ?DP? A is P?A? = D symmetric P is an orthogonal matrix D is a diagonal matrix : simple P?A? = D P 1A? = D A = ?DP 1 Diagonalization A = ?DP? P consists of eigenvectors , D are eigenvalues

A is P?A? = D Diagonalization symmetric ?:? ? ? has eigenvalue ? ??1= ??1 ?1 is unit vector Find an orthonormal basis ?1,?2, ,?? = ? eigenvector don t care by the Extension Theorem and Gram-Schmidt Process ???? =? ?????= ?????? ?= ???? symmetric ?????1 = ????1 = ????1 = ????1 ?1? ?2? ? 0 1 0 ? 0 0 A = ? ?1 = ? = symmetric

A is P?A? = D Diagonalization symmetric ?:? ? ortho ? ? 0 0 1 0 ???? = ? ?? ? = ? = 0 A 0 A 0 ? ortho sym symmetric symmetric ortho ??????? =? ? 0 1 ? 1 0 0 0 = ? ?? ? ? ? 0 0 0 0 A ?

A is P?A? = D Diagonalization symmetric ?:? ? ??????? =? ? ?? ? = ? 1 ? 1 0 0 0 0 ? ? 0 0 0 0 A A ? ortho ortho ? ? 0 0 ? 0 = = ??????? ? ?? ? 0 0 0 A = ?

Diagonalization Example A = ?DP? 2 2 A = ?DP 1 = A 2 5 P?A? = D A has eigenvalues 1= 6 and 2= 1, with corresponding eigenspaces E1 = Span{[ 1 2 ]T} and E2 = Span{[ 2 1 ]T} orthogonal B1 = {[ 1 2 ]T/ 5} and B2 = {[ 2 1 ]T/ 5} 1 2 2 6 0 0 1 1 = = and . P D 1 5

Example of Diagonalization of Symmetric Matrix A = ?DP? A = ?DP 1 P is an orthogonal matrix Gram- Schmidt independent 1= 2 1 1 6 6 1 1 0 1 0 1 1 1 2 Eigenspace: ???? , ???? , 2 normali zation 0 2 6 Not orthogonal 2= 8 1 1 1 3 3 3 1 1 1 Eigenspace: ???? ???? normalization 1 1 6 6 1 1 1 3 3 3 2 0 0 0 2 0 0 0 8 1 1 2 ? = ? = 2 0 2 6

Diagonalization P is an orthogonal matrix A is P?A? = D symmetric A = ?DP? P consists of eigenvectors , D are eigenvalues Finding an orthonormal basis consisting of eigenvectors of A (1) Compute all distinct eigenvalues 1, 2, , kof A. (2) Determine the corresponding eigenspaces E1, E2, , Ek. (3) Get an orthonormal basis Bifor each Ei. (4) B = B 1 B 2 B kis an orthonormal basis for A.

Diagonalization of Symmetric Matrix ? = ?1?1+ ?2?2+ + ???? ? ?1 ? ?2 ? ?? Orthonormal basis ?B ? ?B ? ?B simple Eigenvectors form the good system ? 1 ? 1 ? ? Properly selected Properly selected ? = ??? 1 ? ? ? A is symmetric

Spectral Decomposition Orthonormal basis Let P = [ u1u2 un] and D = diag[ 1 2 n]. A = PDPT = P[ 1e1 2e2 nen]PT = [ 1Pe1 2Pe2 nPen]PT= [ 1u1 2u2 nun]PT nx1 1xn ?1 ?2 ?? = ?1P1+ ?2P2+ + ??P? ?? are symmetric

Spectral Decomposition Orthonormal basis A = PDPT Let P = [ u1u2 un] and D = diag[ 1 2 n]. = ?1P1+ ?2P2+ + ??P? = ?? = ?

Spectral Decomposition Example 3 4 3 ? = Find spectrum decomposition. 4 4 5 2 5 1 5 Eigenvalues 1= 5 and 2= 5. ?= ?1= ?1?1 2 5 1 5 2 5 An orthonormal basis consisting of eigenvectors of A is 2 1 5 ?1 2 5 4 5 ?= ?2= ?2?2 ?2 5 1 2 5 5 ? = , ? = ?1?1+ ?2?2

Conclusion Any symmetric matrix has only real eigenvalues has orthogonal eigenvectors. is always diagonalizable P?A? = D A = ?DP? A is symmetric P is an orthogonal matrix