Personalized News Article Recommendation Through Contextual Bandits Approach

Explore various applications of contextual bandits in recommending personalized news articles, learning to grasp through self-supervision, interactive personalized music recommendation, and more. Also, discover the impact of contextual bandits in wellness and education sectors, along with references for evaluation.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

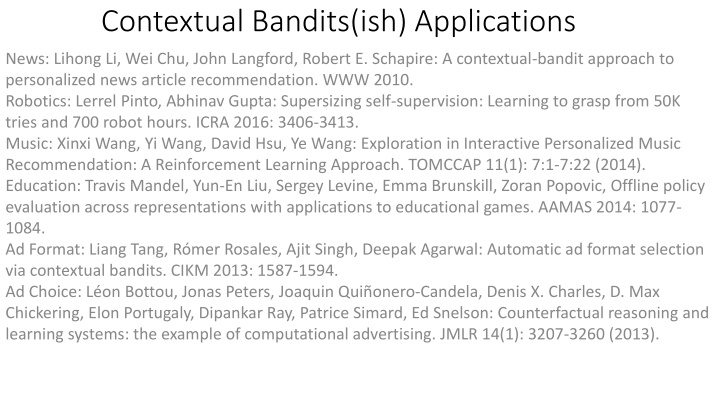

Contextual Bandits(ish) Applications News: Lihong Li, Wei Chu, John Langford, Robert E. Schapire: A contextual-bandit approach to personalized news article recommendation. WWW 2010. Robotics: Lerrel Pinto, Abhinav Gupta: Supersizing self-supervision: Learning to grasp from 50K tries and 700 robot hours. ICRA 2016: 3406-3413. Music: Xinxi Wang, Yi Wang, David Hsu, Ye Wang: Exploration in Interactive Personalized Music Recommendation: A Reinforcement Learning Approach. TOMCCAP 11(1): 7:1-7:22 (2014). Education: Travis Mandel, Yun-En Liu, Sergey Levine, Emma Brunskill, Zoran Popovic, Offline policy evaluation across representations with applications to educational games. AAMAS 2014: 1077- 1084. Ad Format: Liang Tang, R mer Rosales, Ajit Singh, Deepak Agarwal: Automatic ad format selection via contextual bandits. CIKM 2013: 1587-1594. Ad Choice: L on Bottou, Jonas Peters, Joaquin Qui onero-Candela, Denis X. Charles, D. Max Chickering, Elon Portugaly, Dipankar Ray, Patrice Simard, Ed Snelson: Counterfactual reasoning and learning systems: the example of computational advertising. JMLR 14(1): 3207-3260 (2013).

Wellness Contextual Bandits Work P. Paredes, R. Gilad-Bachrach, M. Czerwinski, A. Roseway, K. Rowan and J. Hernandez, "Pop Therapy: Coping with stress through pop-culture," in Proceedings of the 8th International Conference on Pervasive Computing Technologies for Healthcare, 2014. I. Hochberg, G. Feraru, M. Kozdoba, S. Mannor, M. Tennenholtz, E. Yom-Tov (2016) "Encouraging Physical Activity in Diabetes Patients Through Automatic Personalized Feedback Via Reinforcement Learning Improves Glycemic Control" Diabetes Care 39(4): e59-e60 S. M. Shortreed, E. Laber, D. Z. Lizotte, S. T. Stroup, J. Pineau and S. A. Murphy, "Informing sequential clinical decision-making through reinforcement learning: an empirical study," Machine learning, vol. 84, no. 1-2, pp. 109- 136, 2011. I. Nahum-Shani, E. B. Hekler and D. Spruijt-Metz, "Building health behavior models to guide the development of just-in-time adaptive interventions: A pragmatic framework," Health Psychology, vol. 34, p. 1209, 2015. I. Nahum-Shani, S. S. Smith, A. Tewari, K. Witkiewitz, L. M. Collins, B. Spring and S. Murphy, "Just in time adaptive interventions (jitais): An organizing framework for ongoing health behavior support," Methodology Center technical report, 2014. P. Klasnja, E. B. Hekler, S. Shiffman, A. Boruvka, D. Almirall, A. Tewari and S. A. Murphy, "Microrandomized trials: An experimental design for developing just-in-time adaptive interventions," Health Psychology, vol. 34, p. 1220, 2015. Y. Zhao, M. R. Kosorok and D. Zeng, "Reinforcement learning design for cancer clinical trials," Statistics in medicine, vol. 28, no. 26, p. 3294, 2009.

Evaluation References IPS: D. G. Horvitz & D. J. Thompson, A Generalization of Sampling Without Replacement from a Finite Universe, Journal of the American Statistical AssociationVolume 47, 1952 - Issue 260. Double Robust: Miroslav Dud k, John Langford, Lihong Li: Doubly Robust Policy Evaluation and Learning. ICML 2011: 1097-1104. Weighted IPS 1: Augustine Kong: A Note on Importance Sampling using Standardized Weights, http://statistics.uchicago.edu/techreports/tr348.pdf Weighted IPS 2: Adith Swaminathan, Thorsten Joachims: The Self-Normalized Estimator for Counterfactual Learning. NIPS 2015: 3231- 3239 Clipping: Oliver Bembom and Mark J. van der Laan: Data-adaptive selection of the truncation level for inverse probability of treatment-weighted estimators. 2008

Learning from Exploration References Policy Gradient: Ronald J. Williams: Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Machine Learning 8: 229- 256 (1992). Importance Weighted Multiclass: B. Zadrozny, Policy mining: Learning decision policies from fixed sets of data, Ph.D. Thesis, University of California, San Diego, 2003. A. Beygelzimer and J. Langford, The Offset Tree for Learning with Partial Labels KDD 2009. M. Dudik, J. Langford and L. Li, Doubly Robust Policy Evaluation and Learning, ICML 2011. Multitask Regression: Unpublished, but in Vowpal Wabbit.

Learning & Exploration Evaluation Progressive Validation: Avrim Blum, Adam Kalai, John Langford: Beating the Hold-Out: Bounds for K-fold and Progressive Cross-Validation. COLT 1999: 203- 208. Progressive Validation 2: Nicol Cesa-Bianchi, Alex Conconi, Claudio Gentile: On the Generalization Ability of On-Line Learning Algorithms. IEEE Trans. Information Theory 50(9): 2050-2057 (2004). Nonstationary Policy: Miroslav Dud k, Dumitru Erhan, John Langford, Lihong Li: Sample-efficient Nonstationary Policy Evaluation for Contextual Bandits. UAI 2012: 247-254 Miroslav Dud k, Dumitru Erhan, John Langford, Lihong Li: Doubly Robust Policy Evaluation and Optimization, Statistical Science 2014.

Exploration Algorithm References Thompson: W. R. Thompson. On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika, 25(3-4):285 294, 1933. Epoch Greedy: J. Langford and T. Zhang, The Epoch-Greedy Algorithm for Contextual Multi-armed Bandits, NIPS 2007. EXP4: Peter Auer, Nicol Cesa-Bianchi, Yoav Freund, Robert E. Schapire: The Nonstochastic Multiarmed Bandit Problem. SIAM J. Comput. 32(1): 48-77 (2002) Polytime: Miroslav Dud k, Daniel J. Hsu, Satyen Kale, Nikos Karampatziakis, John Langford, Lev Reyzin, Tong Zhang: Efficient Optimal Learning for Contextual Bandits. UAI 2011: 169-178 Cover & Bag: A. Agarwal, D. Hsu, S. Kale, J. Langford, L. Li, R. Schapire, Taming the Monster: A Fast and Simple Algorithm for Contextual Bandits, ICML 2014. Bootstrap: D. Eckles and M. Kaptein, Thompson Sampling with Online Bootstrap, arxiv.org/1410.4009

References: Systems Technical debt paper: Sculley, D and Holt, Gary and Golovin, Daniel and Davydov, Eugene and Phillips, Todd and Ebner, Dietmar and Chaudhary, Vinay and Young, Michael, Machine Learning: The High Interest Credit Card of Technical Debt, NIPS 2014 Workshop on Software Engineering for Machine Learning Decision Service paper: Alekh Agarwal, Sarah Bird, Markus Cozowicz, Luong Hoang, John Langford, Stephen Lee, Jiaji Li, Dan Melamed, Gal Oshri, Oswaldo Ribas, Siddhartha Sen, Alex Slivkins, Making Contextual Decisions with Low Technical Debt, Arxiv 2016. NEXT System: Kevin Jamieson, Lalit Jain, Chris Fernandez, Nick Glattard and Rob Nowak: NEXT: A System for Real-World Development, Evaluation, and Application of Active Learning, NIPS 2015 StreamingBandit System: Maurits Kaptein and Jules Kruijswijk, StreamingBandit: Developing Adaptive Persuasive Systems, Arxiv 2016

References: Non-stationarity MAB references Deepayan Chakrabarti, Ravi Kumar, Filip Radlinski, and Eli Upfal, Mortal multi-armed bandits, NIPS 2009 Peter Auer, Nicolo Cesa-Bianchi, Yoav Freund, and Robert E Schapire, The nonstochastic multiarmed bandit problem, SIAM Journal on Computing, 2002 Omar Besbes, Yonatan Gur, and Assaf Zeevi, Stochastic multi-armed-bandit problem with non-stationary Rewards, NIPS 2014 Chen-Yu Wei, Yi-Te Hong, and Chi-Jen Lu , Tracking the best expert in non-stationary stochastic environments, NIPS 2016 Aggregating CB algorithms Alekh Agarwal, Haipeng Luo, Behnam Neyshabur, and Robert E Schapire, Corralling a band of bandit algorithms, COLT 2017

References: Combinatorial actions Semibandits S. Kale, L. Reyzin, and R. E. Schapire, Non-stochastic bandit slate problems, NIPS 2010 B. Kveton, Z. Wen, A. Ashkan, and C. Szepesv ri, Tight regret bounds for stochastic combinatorial semi-bandits, AISTATS 2015 A. Krishnamurthy, A. Agarwal and M. Dudik, Contextual semibandits with supervised learning oracles, NIPS 2016 Slates S. Kale, L. Reyzin, and R. E. Schapire, Non-stochastic bandit slate problems, NIPS 2010 A. Swaminathan, A. Krishnamurthy, A. Agarwal, M. Dudik, J. Langford, D. Jose and I. Zitouni, Off-policy evaluation for slate recommendation, Arxiv 2016 Cascading B. Kveton, Z. Wen, A. Ashkan, and C. Szepesv ri, Tight regret bounds for stochastic combinatorial semi-bandits, AISTATS 2015 S. Li, B. Wang, S. Zhang, W. Chen, Contextual Combinatorial Cascading Bandits, ICML 2016

References: Combinatorial actions (contd.) Diverse Rankings F. Radlinski, R. Kleinberg and T. Joachims, Learning Diverse Rankings with Multi-Armed Bandits, ICML 2008 A. Slivkins, F. Radlinski and S. Gollapudi, Ranked bandits in metric spaces: learning optimally diverse rankings over large document collections, JMLR 2013 M. Streeter and D. Golovin, An online algorithm for maximizing submodular functions, NIPS 2009