Pointer Network and Sequence-to-Sequence in Machine Learning

Delve into the fascinating world of Pointer Network and Sequence-to-Sequence models in machine learning. Explore the interconnected concepts of encoder-decoder architecture, attention mechanisms, and the application of these models in tasks like summarization, machine translation, and chat-bot development. Witness how the process flow guides the output generation based on attention weights, ending when the 'END' token receives the highest attention. Uncover the potential of these models in various real-world applications.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

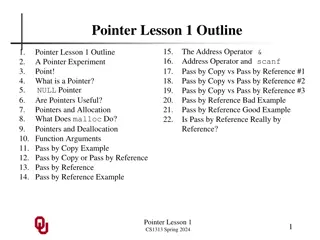

Pointer Network 5 3 4 2 7 6 NN ?1 ?1 ?2 ?2 ?3 ?3 ?4 ?4 coordinate of P1

Sequence-to-sequence? machine ~ learning ~ Encoder Decoder

Problem? Sequence-to-sequence? 1 4 2 {1, 2, 3, 4, END} ~ ~ ~ Of course, one can add attention. ?2 ?2 ?3 ?3 ?4 ?4 ?1 ?1 Encoder Decoder

?0 ?0: END Pointer Network Attention Weight key ?0 0.5 0 1 2 3 4 ?2 ?2 ?3 ?3 ?4 ?4 ?1 ?1 ?0 ?0

?0 ?0: END Pointer Network Output: 1 What decoder can output depends on the input. ~ argmax from this distribution key ?0 ?1 0.0 0.5 0.3 0.2 0.0 ?1 ?1 0 1 2 3 4 ?2 ?2 ?3 ?3 ?4 ?4 ?1 ?1 ?0 ?0

?0 ?0: END Pointer Network Output: 4 What decoder can output depends on the input. ~ argmax from this distribution key ?0 ?1 ?2 0.0 0.0 0.1 0.2 0.7 ?1 ?1 ?4 ?4 0 1 2 3 4 The process stops when END has the largest attention weights. ?2 ?2 ?3 ?3 ?4 ?4 ?1 ?1 ?0 ?0

https://arxiv.org/abs/1704.04368 Applications - Summarization

More Applications Machine Translation Chat-bot User: X Machine: