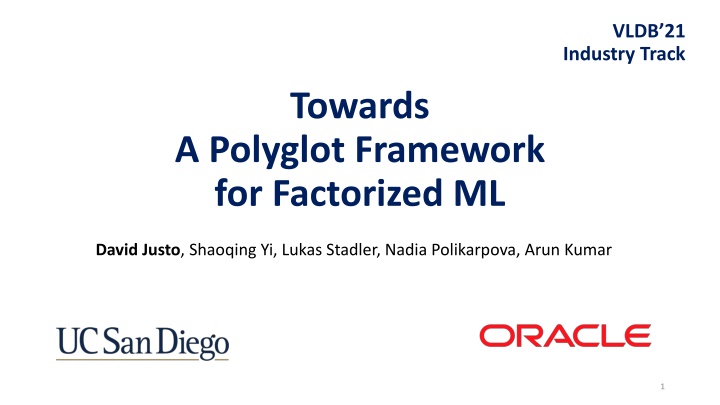

Polyglot Framework for Factorized ML in Industry Track VLDB21

"Explore a polyglot framework for factorized machine learning discussed in the industry track at VLDB21. Learn about challenges and solutions in maintaining dev tools across multiple programming languages, with a focus on data normalization and optimization."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

VLDB21 Industry Track Towards A Polyglot Framework for Factorized ML David Justo, Shaoqing Yi, Lukas Stadler, Nadia Polikarpova, Arun Kumar 1

A Polyglot Data Science World A Polyglot Data Science World 2

Challenge Challenge Maintaining dev-tools across multiple PLs is hard to scale 3

Morpheus Morpheus 4

De De- -normalizing leads to redundancy normalizing leads to redundancy JOIN A relational dataset The dataset post-join 5

De De- -normalizing leads to redundancy normalizing leads to redundancy 6

De De- -normalizing leads to redundancy normalizing leads to redundancy Op( ) 7

De De- -normalizing leads to redundancy normalizing leads to redundancy Op( ) Op( ) Op( ) 8

De De- -normalizing leads to redundancy normalizing leads to redundancy Op( ) Op( ) Op( ) 9

A development challenge A development challenge New PL New Optimization Opt.1 Massive burden for a Opt.3 Opt.2 research team 10

Key Idea Key Idea We need to disentangle the specification of rewrite rules from target-PL domain knowledge 11

Trinity Trinity A framework to support and develop polyglot/ multi-language factorized ML systems 12

Contributions Contributions To our knowledge, the first system to generalize factorized ML to multiple PLs at once Extend GraalVM s interoperability abstractions with Matrix support Demonstrate perf improvements in 3 new leaner prototypes for R, JS, and Python 13

Agenda Agenda Introduction GraalVM Architecture Evaluation Future Directions 14

Agenda Agenda Introduction GraalVM Architecture Evaluation Future Directions 15

Your new PL here! Shared Interpreter 16

Agenda Agenda Introduction GraalVM Architecture Evaluation Future Directions 17

MorpheusDSL MatrixLib Matrix is sparse in ? Yes No Normalized Matrix Call Foo() Call Bar() Op( ) 18

MorpheusDSL MorpheusDSL Morpheus rewrite rules as AST nodes 19

A MorpheusDSL Rewrite A MorpheusDSL Rewrite * Some PL, e.g R 5 MorpheusDSL MorpheusDSL semantics 20

A MorpheusDSL Rewrite A MorpheusDSL Rewrite Some PL, e.g R * * * MorpheusDSL 5 5 5 MorpheusDSL semantics 21

MatrixLib MatrixLib Unified Matrix interoperability abstraction 22

MatrixLib allows 1 MatrixLib allows 1st st class Matrix interop class Matrix interop You mean %*% ? Sure thing! Invoke MatrixLib s multiplication with 5 You mean __mul__ ? Sure thing! 23

MatrixLib allows 1 MatrixLib allows 1st st class Matrix interop class Matrix interop MatrixLib Invoke MatrixLib s multiplication with 5 Matrix is sparse in ? Yes No Call Foo() Call Bar() 24

Built-in interop In-PL invocation MatrixLib interop MorpheusDSL MatrixLib PL Domain knowledge Op( ) Op( ) Normalized Matrix Op( ) Op( ) 25

Built-in interop In-PL invocation MatrixLib interop MorpheusDSL MatrixLib PL Domain knowledge Op( ) Op( ) Normalized Matrix Op( ) Op( ) 26

Agenda Agenda Introduction GraalVM Architecture Evaluation Future Directions 27

Evaluation Evaluation Algorithms: Logistic Regression K-Means Clustering Linear Regression GNMF Clustering Baselines (in R): Morpheus Materialized Setting: R language Movies dataset (3-table join) 28

Model Training Time (lower is better) Model Training Time (lower is better) 8.56x speed-up 8.13x speed-up LogReg 165 sec Algorithms Morpheus Trinity 5.05x speed-up Materialized 4.98x speed-up Kmeans 358 sec 0 100 Training Time (seconds) 200 300 400 29

Model Training Time (lower is better) Model Training Time (lower is better) 7.55x speed-up 7.49x speed-up LinReg 132 sec Morpheus Algorithms Trinity Materialized 0.79x slow-down GNMF 0.88x slow-down 256 sec 0 100 Training Time (seconds) 200 300 400 30

Aside: When is Factorized ML slower? Aside: When is Factorized ML slower? 1. Unexpected variance in cost of LA operators 2. Minimal redundancy introduced by the join 31

Model Training Time Model Training Time LogReg Kmeans Algorithms Morpheus Trinity LinReg Materialized GNMF 0 100 Training Time (seconds) 200 300 400 32

Evaluation Summary Evaluation Summary Trinity (multi-PL) achieves comparable speed-ups to Morpheus (single PL) Trinity s relative performance difference with Morpheus is small, no larger than 20% 33

Takeaways Takeaways Trinity is a polyglot framework for Factorized ML Solves a developability challenge for today s polyglot Data Science landscape Performance comparable to single-language Morpheus implementations 34

Agenda Agenda Introduction GraalVM Architecture Evaluation Future Directions 35

Where to go next? Where to go next? 1. Remove dependency on GraalVM 2. Automatic PL-specific fine-tuning 3. User Study on debuggability and productivity 36

VLDB21 Industry Track Towards A Polyglot Framework for Factorized ML https://adalabucsd.github.io/morpheus.html 37

Backup Slides Backup Slides 38

Where to go next? Where to go next? Matrix is sparse in ? Trinity Trinity Yes No + Call Bar() Call Foo() + Automatic discovery of PL- aware perf knowledge Trinity as a transpiler 39

Where to go next? Where to go next? User Study: productivity and debuggability 40

Options Options FFIs not PL-general not efficient Compiler hard to extend Shared Runtime challenge: how many PLs are supported 41

JS and polyglot ( JS and polyglot (R+Python R+Python) Evaluation ) Evaluation Algorithm: Linear Regression Setting: synthetic 2-table join dataset Baselines: Materialized TR = 10 , FR = 5 ??= 104,ds= 20 42

JS and polyglot ( JS and polyglot (R+Python R+Python) Evaluation ) Evaluation Algorithm: Linear Regression Setting: synthetic 2-table join dataset Baselines: Materialized TR = 10 , TR = 5 ??= 104,ds= 20 43

Aside: GNMF analysis Aside: GNMF analysis 44

Aside: GNMF analysis Aside: GNMF analysis 45

Aside: GNMF analysis Aside: GNMF analysis 46

Aside: GNMF analysis Aside: GNMF analysis 47

Aside: GNMF analysis Aside: GNMF analysis 48

Aside: GNMF analysis Aside: GNMF analysis 49

Aside: GNMF analysis Aside: GNMF analysis In GraalVM s R: addition operator had a higher cost than anticipated. 50