Predicting Replicated Database Scalability in Multi-Master Systems

Explore the scalability of replicated databases in multi-master systems, discussing the potential for achieving high throughput by adding replicas, the impact of workload scaling on replicated databases, and the differences between read and update transactions in maintaining database state consistency. The study delves into the architecture of multi-master and single-master setups, the role of load balancers, and the considerations for introducing additional replicas. It also touches on upcoming topics such as queuing models and experimental validations in the context of servicing demands and transaction requirements within standalone database management systems.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

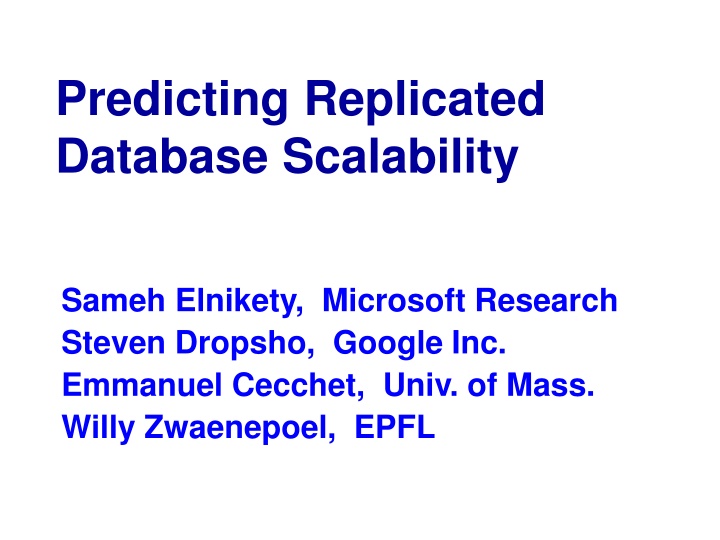

Predicting Replicated Database Scalability Sameh Elnikety, Microsoft Research Steven Dropsho, Google Inc. Emmanuel Cecchet, Univ. of Mass. Willy Zwaenepoel, EPFL

Motivation Environment E-commerce website DB throughput is 500 tps Is 5000 tps achievable? Yes: use 10 replicas Yes: use 16 replicas No: faster machines needed How tx workload scales on replicated db? Single DBMS 2

Multi-Master Single-Master Master Replica 1 Slave 1 Replica 2 Slave 2 Replica 3 3

Background: Multi-Master Replica 1 Standalone DBMS Replica 2 Load Balancer Replica 3 4

Read Tx Read tx does not change DB state Replica 1 Replica 2 T Load Balancer Replica 3 5

Update Tx Update tx changes DB state Replica 1 Cert Replica 2 T Load Balancer ws ws ws ws Replica 3 6

Additional Replica Replica 1 Cert Replica 2 T Load Balancer ws ws ws Replica 3 Replica 3 Replica 4 7

Coming Up Standalone DBMS Service demands Multi-master system Service demands Queuing model Experimental validation 8

Standalone DBMS Required readonly tx: R update tx: W Transaction load readonly tx: R update tx: W / (1 - A1) Single DBMS Abort probability is A1 Submit W / (1 - A1) update tx Commited tx: W Aborted tx: W A1 / (1- A1) 9

Standalone DBMS Required readonly tx: R update tx: W Transaction load readonly tx: R update tx: W / (1 - A1) Single DBMS W = R rc + (1) Load wc (1 ) A 1 10

Service Demand W = R rc + (1) Load wc (1 ) A 1 Pw = Pr rc + (1) D wc (1 ) A 1 11

Multi-Master with N Replicas Required (whole system of N replicas) Readonly tx: N R Update tx: N W Transaction load per replica Readonly tx: R Update tx: W / (1 - AN) Writeset: W (N - 1) W ( ) N = Load R rc + + ( 1) wc W N ws MM (1 ) A N 12

MM Service Demand W ( ) N = Load R rc + + ( 1) wc W N ws MM (1 ) A N Pw + 1) D N = Pr rc + ( ( ) N wc Pw ws MM (1 ) A N Explosive cost! 13

Compare: Standalone vs MM Standalone: Pw = Pr rc + (1) D wc (1 ) A 1 Multi-Master: Pw + 1) D N = Pr rc + ( ( ) N wc Pw ws MM (1 ) A N Explosive cost! 14

Readonly Workload Standalone: Pw = Pr rc + (1) D wc (1 ) A 1 Multi-Master: Pw + 1) D N = Pr rc + ( ( ) N wc Pw ws MM (1 ) A N Explosive cost! 15

Update Workload Standalone: Pw = Pr rc + (1) D wc (1 ) A 1 Multi-Master: Pw + 1) D N = Pr rc + ( ( ) N wc Pw ws MM (1 ) A N Explosive cost! 16

Closed-Loop Queuing Model Load balancer & network delay LB Cert Replica i Pw LB CPU Cert ... ... LB Cert Disk Certifier delay N replicas TT TT Think time ... TT 17

Mean Value Analysis (MVA) Standard algorithm Iterates over the number of clients Inputs: Number of clients Service demand at service centers Delay time at delay centers Outputs: Response time Throughput 18

Using the Model Load balancer & network delay LB Cert Replica i Pw LB CPU Cert ... ... LB Cert Disk Certifier delay N replicas TT TT Think time ... TT 19

Standalone Profiling (Offline) Copy of database Log all txs, (Pr : Pw) Python script replays txs Readonly (rc) Updates (wc) Writesets Instrument db with triggers Play txs to log writesets Play writesets (ws) 20

MM Service Demand Pw + 1) D N = Pr rc + ( ( ) N wc Pw ws MM (1 ) A N Explosive cost! 21

Abort Probability Predicting abort probability is hard Single-master No prediction needed Measure offline on master Multi-master Approximate using ( ) CW N N = (1) L (1 ) (1 ) A A 1 N Sensitivity analysis in the paper 22

Using the Model 1 ms Load balancer & network delay LB Cert Replica i Pw LB CPU Cert ... ... LB Cert Disk Certifier delay N replicas TT 1.5 fsync() TT Think time # clients, think time ... TT 23

Experimental Validation Compare Measured performance vs model predictions Environment Linux cluster running PostgreSQL TPC-W workload Browsing (5% update txs) Shopping (20% update txs) Ordering (50% update txs) RUBiS workload Browsing (0% update txs) Bidding (20% update txs) 24

Multi-Master TPC-W Performance Throughput Response time 25

6.7 X 15% Ordering, 50% u 15.7 X Browsing, 5% u 26

Multi-Master RUBiS Performance Throughput Response time 27

16 X Browsing, 0% u bidding, 20% u 3.4 X 28

Model Assumptions Database system Snapshot isolation No hotspots Low abort rates Server system Scalable server (no thrashing) Queuing model & MVA Exponential distribution for service demands 29

Checkout the Paper Models Single-Master Multi-Master Experimental results TPC-W RUBiS Sensitivity analysis Abort rates Certifier delay 30

Related Work Urgaonkar, Pacifici, Shenoy, Spreitzer, Tantawi. An analytical model for multi-tier internet services and its applications. Sigmetrics 2005. 31

Conclusions Derived an analytical model Predicts workload scalability Implemented replicated systems Multi-master Single-master Experimental validation TPC-W RUBiS Throughput predictions match within 15% 32

Danke Schn! Questions? Predicting Replicated Database Scalability 33