Quantum Kernel Learning for Bosonic Modes and Universal Quantum Computing

Explore the intersection of quantum computing and machine learning with a focus on quantum kernel learning for bosonic modes. Discover the potential of universal continuous-variable quantum computing and Kerr nonlinearity in encoding operations. Dive into the challenges and opportunities of utilizing quantum systems for advanced computational tasks.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Quantum Kernel Learning for Bosonic Modes Carolyn E. Wood The University of Queensland

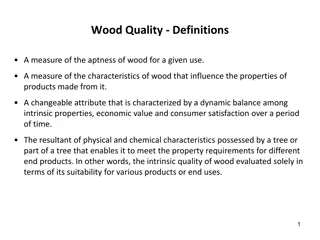

Quantum Kernel Based ML Current Open Questions: - When do quantum computers become necessary or useful for ML tasks? - How do we find useful kernels for quantum machine learning? - Related to computational complexity? Quantum utility reached when problem too hard for classical computer

Quantum Kernel Based ML Feature map ? ? : data multi-dimensional feature space. Quantum states also access high-dimension (Hilbert) spaces Kernel ? ?, ? is inner product of the encoding quantum state 2 ?( ?, ?) = ? ? Schuld, M., arxiv:2101.11020 (2021)

Discrete vs Continuous Variables Discrete case (qubits): Example: Measuring a particle s spin gives up or down | 1 | 0 Continuous case (bosonic modes): Measuring particle s position can yield infinitely many results (+ an uncertainty) Continuous variables hard to simulate due to infinite dimensions

Universal CV Quantum Computing An algebra for universal simulation of a single bosonic mode: ?,? ,?2,? 2, ? ? Universal := Computer can do any unitary operation on desired variables. 2 ?,? generate displacements ?2,? 2 generate squeezing 2 Kerr nonlinearity (strength photon number dependent) ? ? Lloyd, S. and Braunstein, S.L. (1999), PRL, 82, 1784 Bartlett, S.D. and Sanders, B.C. (2002), PRA, 65, 042304

Kerr NL Encoding Wigner negativity More quantum ? Too complex for a classical computer? Interaction Hamiltonian for a two-mode coherent state with cross-Kerr NL: ??= ? ? ? 2+ 2 ? ? ? ? ? 2+ ? ? ? Two-mode coherent state: | ( ?) = ? ?? ?1? ? 2+?2? ? 2+2?1?2? ?? ? |?0,?0 Chabaud, U. et al. Witnessing Wigner Negativity Quantum 5, 471 (2021). Henderson, L.J., Goel, R. & Shrapnel, S., arXiv:2401.05647

Kerr Kernel Two-mode coherent state (number basis): ???? ?!?!? ?? ?1?2+?2?2+2?1?2?? | ( ?) = ? ?2 |?,? ?,?,=0 ??,? ? Kernel from inner product: 2 ?( ?, ?) = ? ? 2 ?2 ?+? ?!?! = ? 2 ?2 ?,?,=0 ? ?(??,? ? ??,?? )

Today: Can a ML algorithm learn with a CV quantum kernel? Compared with standard classical kernels?

ML Algorithm and task Support Vector Machine: Binary classification task Chatterjee, R. & Yu, T., Quant. Info. & Comp., 17, 15&16, 1292 (2017), arxiv:1612.03713

Data & Encoding 1. Data: Generate pairs of random real numbers on the unit square ? = ?1,?2 0,1]2 2. Encoding: Classical data into quantum state | ? 3. Measurement: displaced parity operator ? | ?? | ? -> labels

Labelling Data Data labelled according to: ? | ?? ? | ?? | ( ?) > 0 ? ? = +? | ( ?) < 0 ? ? = ?

munu2 munu1 munu4 munu3

Results: Coherent Kerr Kernel SVM Training set: 3500 data points with labels Scikit-learn SVC with custom kernel Confusion Matrix -1 True Label +1 +1 -1 Predicted Label munu1 munu2 munu3 munu4 Acc. Prec. Acc. Prec. Acc. Prec. Acc. Prec. Kerr 0.9693 0.9762 0.9520 0.9680 0.9800 0.9801 0.976 0.9829 RBF (Tuned) 0.9533 0.9623 0.9240 0.9502 0.9760 0.9731 0.9753 0.9820

Results: Classical Kernel SVM Confusion Matrix (Tuned) Training set: 3500 data points with labels Scikit-learn SVC with radial basis function kernel Compare default (a) and tuned (b) hyperparameters C and gamma -1 True Label +1 -1 +1 Predicted Label C Gamma Accuracy Precision Recall F1-score Not tuned 1 scale 0.8287 0.8372 0.8976 0.8663 Tuned 1000 100 0.9533 0.9623 0.9623 0.9623

Results: Classical Kernel SVM Confusion Matrix (Tuned) Training set: 3500 data points with labels Scikit-learn SVC with radial basis function kernel Compare default (a) and tuned (b) hyperparameters C and gamma -1 True Label +1 -1 +1 munu4 Predicted Label munu3 munu1 munu2 Acc. Prec. Acc. Prec. Acc. Prec. Acc. Prec. Kerr 0.9693 0.9762 0.9520 0.9680 0.9800 0.9801 0.976 0.9829 RBF (Tuned) 0.9533 0.9623 0.9240 0.9502 0.9760 0.9731 0.9753 0.9820

Squeezed States? Positive Squeezing Negative Squeezing Encoding different, depending on whether positive or negative squeezing. Much harder computationally (3500pt set ~12 days)

Summary + Next? Can the Kerr kernel learn? Kerr kernel outperforms classical out of the box Next: Include dissipation/photon loss Look at experiments, e.g. superconducting circuits arxiv:2404.01787 with Sally Shrapnel and Gerard Milburn c.wood2@uq.edu.au carolyn-e-wood

Measurement Random displacements in phase space: ? ?,? = ?? ? ? ??? ? ? ? ? = ??+ ???; ? = ??+ ??? Parity operator (eigenvalues 1): = ??? ? ?+ ? ? Each restricted to unit disk to minimise distance between data points in feature space. Full measurement: ? | ? ?,? ? ?,? | ?

Varying ? and ? (previous slides) ? ? 0.468 0.401 ? 0.645 + 0.419 ? 1 0.944 + 1.154 ? 0.487 0.972 ? 2 0.735 + 0.161 ? 1.024 + 0.031 ? 3 0.400 + 0.604 ? 0.249 + 0.766 ? 4

Support Vector Machine Essentially a Lagrange optimization process. ? ?? 1 ? ?? ? = 2 ?,?=1 ???????? ?(??,??) ?=1 1. Input training and test data sets: Classical data + binary labels 2. Custom kernel: Our Kerr kernel 3. Compare accuracy against classical kernel

SVM Implementation 1. Recall our encoding state: ???? ?!?!? ???,? ? | ( ?) = ? ?2 |?,? ?,?,=0 Basis must be truncated for feasibility ? & ? must stay above mean photon number Choosing ? = 1.0 allows ?,? = 10 2. Data (original numbers, not encoded) + labels into SVM (Kerr kernel) in Python package scikit-learn 3. Repeated with classical kernel (radial basis function)