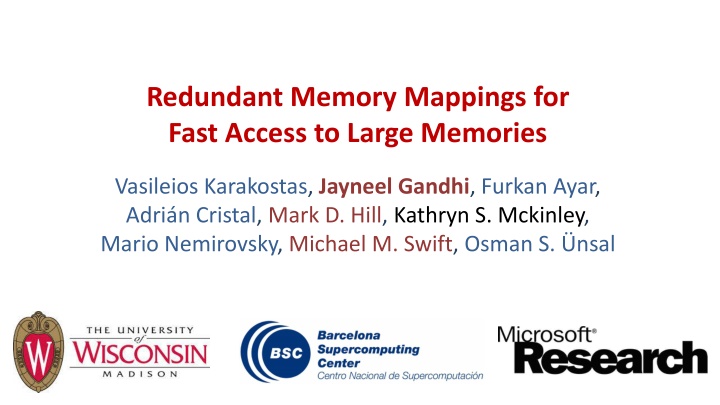

Redundant Memory Mappings for Fast Access to Large Memories

This presentation discusses a proposal for Redundant Memory Mappings to reduce virtual memory overheads significantly, providing a compact representation for large memories. It explores range translations, caching mechanisms, and the flexibility of 4KB paging resulting in a drastic reduction of overheads.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Redundant Memory Mappings for Fast Access to Large Memories Vasileios Karakostas, Jayneel Gandhi, Furkan Ayar, Adri n Cristal, Mark D. Hill, Kathryn S. Mckinley, Mario Nemirovsky, Michael M. Swift, Osman S. nsal

Executive Summary Problem: Virtual memory overheads are high (up to 41%) Proposal: Redundant Memory Mappings Propose compact representation called range translation Range Translation arbitrarily large contiguous mapping Effectively cache, manage and facilitate range translations Retain flexibility of 4KB paging Result: Reduces overheads of virtual memory to less than 1% 2

Outline Motivation Virtual Memory Refresher + Key Technology Trends Previous Approaches Goals + Key Observation Design: Redundant Memory Mappings Results Conclusion 3

Virtual Memory Refresher Virtual Address Space Physical Memory Process 1 Page Table Process 2 Challenge: How to reduce costly page walks? TLB (Translation Lookaside Buffer) 4

Two Technology Trends 10 Memory capacity for $10,000* 10,000 TB Year Processor L1 DTLB entries 1,000 1 Memory size 100 100 1999 2001 Pent. III Pent. 4 72 64 GB 10 10 1 1 2008 Nehalem 96 100 0 2012 IvyBridge 100 MB 2015 Broadwell 100 10 0 1 0 1980 1985 1990 1995 2000 2005 2010 2015 Years TLB reach is limited *Inflation-adjusted 2011 USD, from: jcmit.com 5

0. Page-based Translation Virtual Memory TLB VPN0 PFN0 Physical Memory 6

1. Multipage Mapping Virtual Memory Clustered TLB Sub-blocked TLB/CoLT VPN(0-3) PFN(0-3) Bitmap Map Physical Memory [ASPLOS 94, MICRO 12 and HPCA 14] 7

2. Large Pages Virtual Memory Large Page TLB VPN0 PFN0 Physical Memory [Transparent Huge Pages and libhugetlbfs] 8

3. Direct Segments LIMIT BASE Virtual Memory Direct Segment (BASE,LIMIT) OFFSET OFFSET Physical Memory [ISCA 13 and MICRO 14] 9

Can we get best of many worlds? Multipage Mapping Large Pages Direct Segments Our Proposal Flexible alignment Arbitrary reach Multiple entries Transparent to applications Applicable to all workloads 10

Key Observation Virtual Memory Physical Memory 11

Key Observation Shared Lib. Code Stack Heap Virtual Memory 1. Large contiguous regions of virtual memory 2. Limited in number: only a few handful Physical Memory 12

Compact Representation: Range Translation BASE1 LIMIT1 Virtual Memory OFFSET1 Range Translation 1 Physical Memory Range Translation: is a mapping between contiguous virtual pages mapped to contiguous physical pages with uniform protection 13

Redundant Memory Mappings Range Translation 3 Virtual Memory Range Translation 2 Range Translation 4 Range Translation 1 Range Translation 5 Physical Memory Map most of process s virtual address space redundantly with modest number of range translations in addition to page mappings 14

Outline Motivation Design: Redundant Memory Mappings A. Caching Range Translations B. Managing Range Translations C. Facilitating Range Translations Results Conclusion 15

A. Caching Range Translations V47 . V12 L1 DTLB L2 DTLB Range TLB Page Table Walker Enhanced Page Table Walker P47 . P12 16

A. Caching Range Translations V47 . V12 Hit L1 DTLB L2 DTLB Range TLB Enhanced Page Table Walker P47 . P12 17

A. Caching Range Translations V47 . V12 Miss L1 DTLB Refill Hit L2 DTLB Range TLB Enhanced Page Table Walker P47 . P12 18

A. Caching Range Translations V47 . V12 Refill Miss L1 DTLB Hit L2 DTLB Range TLB Enhanced Page Table Walker P47 . P12 19

A. Caching Range Translations V47 . V12 BASE 1 > Refill LIMIT 1 OFFSET 1 Protection 1 Miss L1 DTLB Entry 1 Hit L2 DTLB Range TLB LIMIT N > BASE N OFFSET N Protection N Entry N L1 TLB Entry Generator Logic: (Virtual Address + OFFSET) Protection P47 . P12 20

A. Caching Range Translations V47 . V12 Miss L1 DTLB Miss Miss L2 DTLB Range TLB Enhanced Page Table Walker P47 . P12 21

B. Managing Range Translations Stores all the range translations in a OS managed structure Per-process like page-table Range Table RTC RTD RTF RTG CR-RT RTA RTB RTE 22

B. Managing Range Translations On a L2+Range TLB miss, what structure to walk? A) Page Table B) Range Table C) Both A) and B) D) Either? Is a virtual page part of range? Not known at a miss 23

B. Managing Range Translations Redundancy to the rescue One bit in page table entry denotes that page is part of a range 2 1 3 Application resumes memory access Range Table Walk (Background) Page Table Walk RTC RTD RTF RTG CR-RT CR-3 Part of a range RTA RTB RTE Insert into L1 TLB Insert into Range TLB 24

C. Facilitating Range Translations Demand Paging Virtual Memory Physical Memory Does not facilitate physical page contiguity for range creation 25

C. Facilitating Range Translations Eager Paging Virtual Memory Physical Memory Allocate physical pages when virtual memory is allocated Increases range sizes Reduces number of ranges 26

Outline Motivation Design: Redundant Memory Mappings Results Methodology Performance Results Virtual Contiguity Conclusion 27

Methodology Measure cost on page walks on real hardware Intel 12-core Sandy-bridge with 96GB memory 64-entry L1 TLB + 512-entry L2 TLB 4-way associative for 4KB pages 32-entry L1 TLB 4-way associative for 2MB pages Prototype Eager Paging and Emulator in Linux v3.15.5 BadgerTrap for online analysis of TLB misses and emulate Range TLB Linear model to predict performance Workloads Big-memory workloads, SPEC 2006, BioBench, PARSEC 28

Comparisons 4KB: Baseline using 4KB paging THP: Transparent Huge Pages using 2MB paging [Transparent Huge Pages] CTLB: Clustered TLB with cluster of 8 4KB entries [HPCA 14] DS: Direct Segments [ISCA 13 and MICRO 14] RMM: Our proposal: Redundant Memory Mappings [ISCA 15] 29

Performance Results 45% 40% Execution Time Overhead 35% 30% Assumptions: 25% Measured using performance counters Modeled based on emulator 5/14 workloads Rest in paper RMM: 32 entry fully-associative Both in parallel with L2 CTLB: 512 entry fully-associative 20% 15% 10% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 30

Performance Results Overheads of using 4KB pages are very high 45% 40% Execution Time Overhead 35% 30% 25% 20% 15% 10% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 31

Performance Results Clustered TLB works well, but limited by 8x reach 45% 40% Execution Time Overhead 35% 30% 25% 20% 15% 10% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 32

Performance Results 2MB page helps with 512x reach: Overheads not very low 45% 40% Execution Time Overhead 35% 30% 25% 20% 15% 10% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 33

Performance Results Direct Segment perfect for some but not all workloads 45% 40% Execution Time Overhead 35% 30% 25% 20% 15% 10% 0.00% 0.00% 0.06% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 34

Performance Results RMM achieves low overheads robustly across all workloads 45% 40% Execution Time Overhead 35% 30% 25% 20% 15% 10% 1.06% 0.25% 0.00% 0.40% 0.00% 0.14% 0.06% 0.26% 5% 0% RMM RMM RMM RMM RMM DS DS DS DS DS THP THP THP THP CTLB CTLB CTLB CTLB CTLB THP 4KB 4KB 4KB 4KB 4KB cactusADM canneal graph500 mcf tigr 35

Why low overheads? Virtual Contiguity Paging 4KB + 2MB THP Ideal RMM ranges #of ranges to cover more than 99% of memory Benchmark # of ranges cactusADM canneal 1365 + 333 10016 + 359 112 77 49 4 graph500 8983 + 35725 86 3 mcf 1737 + 839 55 1 tigr 28299 + 235 16 3 1000s of TLB entries required Only 10s-100s of ranges per application Only few ranges for 99% coverage 36

Summary Problem: Virtual memory overheads are high Proposal: Redundant Memory Mappings Propose compact representation called range translation Range Translation arbitrarily large contiguous mapping Effectively cache, manage and facilitate range translations Retain flexibility of 4KB paging Result: Reduces overheads of virtual memory to less than 1% 37

Questions ? 38