Regression Modeling in Statistics and Data Analysis

Explore regression modeling in statistics with Professor William Greene, covering linear regression models, least squares results, predictor variables, and more to assess the relationship between variables.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

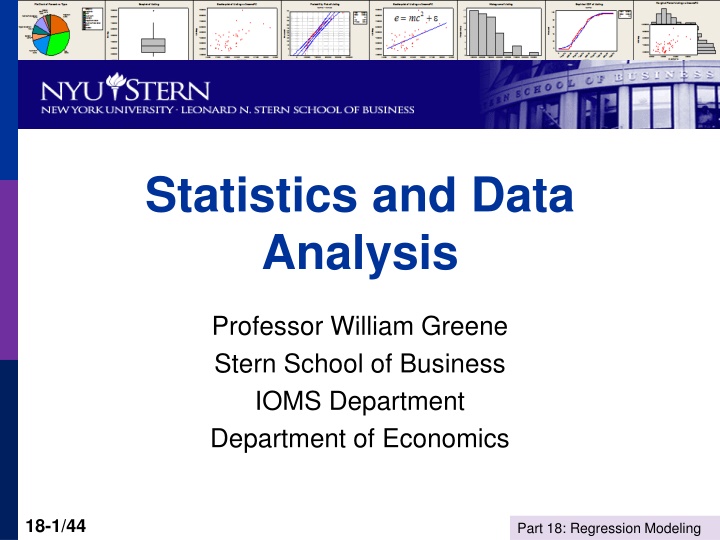

Statistics and Data Analysis Professor William Greene Stern School of Business IOMS Department Department of Economics 18-1/44 Part 18: Regression Modeling

Statistics and Data Analysis Part 18 Regression Modeling 18-2/44 Part 18: Regression Modeling

Linear Regression Models Least squares results Regression model Sample statistics Estimates of population parameters How good is the model? In the abstract Statistical measures of model fit Assessing the validity of the relationship 18-3/44 Part 18: Regression Modeling

Regression Model Regression relationship yi = + xi + i Random i implies random yi Observed random yi has two unobserved components: Explained: + xi Unexplained: i Random component i zero mean, standard deviation , normal distribution. 18-4/44 Part 18: Regression Modeling

Linear Regression: Model Assumption 18-5/44 Part 18: Regression Modeling

Least Squares Results 18-6/44 Part 18: Regression Modeling

Using the Regression Model Prediction: Use xi as information to predict yi. y The natural predictor is the mean, xi provides more information. i y =a+bx With xi, the predictor is i 18-7/44 Part 18: Regression Modeling

Regression Fits Regression of salary vs. Regression of fuel bill vs. number years of experience of rooms for a sample of homes Scatterplot of FUELBILL vs ROOMS Scatterplot of SALARY vs YEARS 1400 100000 90000 1200 80000 1000 70000 FUELBILL SALARY 60000 800 50000 600 40000 30000 400 20000 200 10000 2 3 4 5 6 7 8 9 10 11 0 5 10 15 20 25 30 ROOMS YEARS 18-8/44 Part 18: Regression Modeling

Regression Arithmetic y = y +e = prediction + error y - y = y - y +e A few algebra steps later... i i ( ) i i i i ( ) ( ) 2 2 y - y + e N i=1 2 N i=1 N i=1 i TOTAL LARGE?? small?? TOTAL = Regression + Residual This is the analysis of (the) variance (of y); ANOVA y - y = i i 18-9/44 Part 18: Regression Modeling

Analysis of Variance ( ) ( ) 2 2 y -y + e N i=1 2 i N i=1 N i=1 TOTAL = Regression + Residual y -y = i i 18-10/44 Part 18: Regression Modeling

Fit of the Model to the Data The original question about the model fit to the data: ( ) ( ) 2 2 y -y + e N i=1 N i=1 N i=1 2 i TOTAL LARGE?? small?? y -y = i i TOTAL = Regression + Residual TOTAL SS = R TOTAL SS egr TOTAL SS ession SS Residual S TOTAL SS S + Proportion Expl 1 = ained + Proportion Unexplained 18-11/44 Part 18: Regression Modeling

Explained Variation The proportion of variation explained by the regression is called R-squared (R2) It is also called the Coefficient of Determination 18-12/44 Part 18: Regression Modeling

Movie Madness Fit R2 18-13/44 Part 18: Regression Modeling

Regression Fits Scatterplot of Cost vs Output Regression of Foreign Box Office on Domestic Overseas = 6.693 + 1.051 Domestic 500 1400 S R-Sq R-Sq(adj) 73.0041 52.2% 52.1% R2 = 0.924 400 1200 R2 = 0.522 1000 300 800 Overseas Cost 200 600 400 100 200 0 0 0 10000 20000 30000 40000 50000 60000 70000 80000 0 100 200 300 400 500 600 Output Domestic Fitted Line Plot G = 1.928 + 0.000179 Income 7 S R-Sq R-Sq(adj) 0.370241 88.0% 87.8% R2 = 0.424 6 5 G 4 R2 = 0.880 3 10000 12500 15000 17500 20000 22500 25000 27500 Income 18-14/44 Part 18: Regression Modeling

R2 is still positive even if the correlation is negative. R2 = 0.338 18-15/44 Part 18: Regression Modeling

R Squared Benchmarks Aggregate time series: expect .9+ Cross sections, .5 is good. Sometimes we do much better. Large survey data sets, .2 is not bad. Scatterplot of Cost vs Output 500 400 300 Cost 200 100 0 0 10000 20000 30000 40000 50000 60000 70000 80000 Output R2 = 0.924 in this cross section. 18-16/44 Part 18: Regression Modeling

Correlation Coefficient r = Correlation(x,y) xy Sample Cov[x,y] = [Sample Standard deviation (x)] [Sample standard deviation (y)] (x -x) N (x -x)(y -y) 1 i i N-1 = i=1 N N 2 2 (y -y) 1 1 i i N-1 N-1 i=1 i=1 1 r 1 xy 18-17/44 Part 18: Regression Modeling

Correlations Scatterplot of Overseas vs Domestic 1400 1200 rxy = 0.723 1000 800 Overseas 600 400 200 0 0 100 200 300 400 500 600 Domestic Scatterplot of C5 vs C6 Fitted Line Plot GDPC = 19826 - 34508 GINI 9 S R-Sq R-Sq(adj) 6574.43 16.2% 15.8% 30000 8 7 25000 6 20000 5 C5 GDPC 15000 4 10000 3 2 5000 1 0 0 0 2 4 6 8 10 12 14 16 0.2 0.3 0.4 0.5 0.6 C6 GINI rxy = -.402 rxy = +1.000 18-18/44 Part 18: Regression Modeling

R-Squared is rxy2 R-squared is the square of the correlation between yi and the predicted yi which is a + bxi. The correlation between yi and (a+bxi) is the same as the correlation between yi and xi. Therefore, . A regression with a high R2 predicts yi well. 18-19/44 Part 18: Regression Modeling

Adjusted R-Squared We will discover when we study regression with more than one variable, a researcher can increase R2 just by adding variables to a model, even if those variables do not really explain y or have any real relationship at all. To have a fit measure that accounts for this, Adjusted R2 is a number that increases with the correlation, but decreases with the number of variables. N-1 N-K -1 2 2 R =1- (1-R ) K is the number of "x" variables in the equation. 18-20/44 Part 18: Regression Modeling

Movie Madness Fit 2 R 18-21/44 Part 18: Regression Modeling

Notes About Adjusted R2 2 2 2 2 (1) Adjusted R is denoted R . R is less than R . 2 (2) R is not the square of R. It is not the square of anything. Adjusted R squared is just a name, not a formula. 2 (3) Adjusting R makes no s in the model. You should pay no attention to R when K = 1. ense when there is only one variable 2 2 (4) R can be less than zero! See point (2). 18-22/44 Part 18: Regression Modeling

Is R2 Large? Is there really a relationship between x and y? We cannot be 100% certain. We can be statistically certain (within limits) by examining R2. F is used for this purpose. 18-23/44 Part 18: Regression Modeling

The F Ratio The original question about the model fit to the data: ( ) ( ) 2 2 y -y + e N i=1 N i=1 N i=1 2 i TOTAL LARGE?? small?? TOTAL = Regression + Residual We would like the Regression SS Residual SS to be small y -y = i i to be large and the ( ) 2 y -y N i=1 N i=1 (N-2) 2 (N-2)Regression SS Residual SS (N-2)R 1-R i = = F = 2 i 2 e 18-24/44 Part 18: Regression Modeling

Is R2 Large? Since F = (N-2)R2/(1 R2), if R2is large, then F will be large. For a model with one explanatory variable in it, the standard benchmark value for a large F is 4. 18-25/44 Part 18: Regression Modeling

Movie Madness Fit R2 F 18-26/44 Part 18: Regression Modeling

Why Use F and not R2? When is R2 large? we have no benchmarks to decide. How large is large? We have a table for F statistics to determine when F is statistically large: yes or no. 18-27/44 Part 18: Regression Modeling

F Table n2 is N-2 The critical value depends on the number of observations. If F is larger than the appropriate value in the table, conclude that there is a statistically significant relationship. There is a huge F table on pages 732-742 of your text. Analysts now use computer programs, not tables like this, to find the critical values of F for their model/data. 18-28/44 Part 18: Regression Modeling

Internet Buzz Regression Internet Buzz Regression n2 is N-2 Regression Analysis: BoxOffice versus Buzz The regression equation is BoxOffice = - 14.4 + 72.7 Buzz Predictor Coef SE Coef T P Constant -14.360 5.546 -2.59 0.012 Buzz 72.72 10.94 6.65 0.000 S = 13.3863 R-Sq = 42.4% R-Sq(adj) = 41.4% Analysis of Variance Source DF SS MS F P Regression 1 7913.6 7913.6 44.16 0.000 Residual Error 60 10751.5 179.2 Total 61 18665.1 18-29/44 Part 18: Regression Modeling

$135 Million Klimt, to Ronald Lauder http://www.nytimes.com/2006/06/19/arts/design/19klim.html?ex=1308369600&en=37eb32381038a74 9&ei=5088&partner=rssnyt&emc=rss 18-30/44 Part 18: Regression Modeling

$100 Million sort of Stephen Wynn with a Prized Possession, 2007 18-31/44 Part 18: Regression Modeling

An Enduring Art Mystery Graphics show relative sizes of the two works. The Persistence of Statistics. Hildebrand, Ott and Gray, 2005 Why do larger paintings command higher prices? The Persistence of Memory. Salvador Dali, 1931 18-32/44 Part 18: Regression Modeling

18-33/44 Part 18: Regression Modeling

Previously sold for exp(16.6374) = $16.8M 18-34/44 Part 18: Regression Modeling

Monet in Large and Small Sale prices of 328 signed Monet paintings Fitted Line Plot ln (US$) = 2.825 + 1.725 ln (SurfaceArea) 18 S R-Sq R-Sq(adj) 1.00645 20.0% 19.8% 17 16 The residuals do not show any obvious patterns that seem inconsistent with the assumptions of the model. 15 ln (US$) 14 13 12 11 6.0 6.2 6.4 6.6 6.8 7.0 7.2 7.4 7.6 ln (SurfaceArea) Log of $price = a + b log surface area + e 18-35/44 Part 18: Regression Modeling

The Data Histogram of ln (SurfaceArea) Histogram of ln (US$) 80 90 70 80 60 70 60 50 Frequency Frequency 50 40 40 30 30 20 20 10 10 0 0 10.5 12.0 13.5 15.0 16.5 3.2 4.0 4.8 5.6 6.4 7.2 8.0 8.8 ln (US$) ln (SurfaceArea) Note: Using logs in this context. This is common when analyzing financial measurements (e.g., price) and when percentage changes are more interesting than unit changes. (E.g., what is the % premium when the painting is 10% larger?) 18-36/44 Part 18: Regression Modeling

Monet Regression: There seems to be a regression. Is there a theory? 18-37/44 Part 18: Regression Modeling

Conclusions about F R2 answers the question of how well the model fits the data F answers the question of whether there is a statistically valid fit (as opposed to no fit). What remains is the question of whether there is a valid relationship i.e., is different from zero. 18-38/44 Part 18: Regression Modeling

The Regression Slope The model is yi = + xi+ i The relationship depends on . If equals zero, there is no relationship The least squares slope, b, is the estimate of based on the sample. It is a statistic based on a random sample. We cannot be sure it equals the true . To accommodate this view, we form a range of uncertainty around b. I.e., a confidence interval. 18-39/44 Part 18: Regression Modeling

Uncertainty About the Regression Slope Hypothetical Regression Fuel Bill vs. Number of Rooms The regression equation is Fuel Bill = -252 + 136 Number of Rooms This is b, the estimate of Predictor Coef SE Coef T P Constant -251.9 44.88 -5.20 0.000 Rooms 136.2 7.09 19.9 0.000 This Standard Error, (SE) is the measure of uncertainty about the true value. S = 144.456 R-Sq = 72.2% R-Sq(adj) = 72.0% The range of uncertainty is b 2 SE(b). (Actually 1.96, but people use 2) 18-40/44 Part 18: Regression Modeling

Internet Buzz Regression Internet Buzz Regression Range of Uncertainty for b is 72.72+1.96(10.94) to 72.72-1.96(10.94) = [51.27 to 94.17] Regression Analysis: BoxOffice versus Buzz The regression equation is BoxOffice = - 14.4 + 72.7 Buzz Predictor Coef SE Coef T P Constant -14.360 5.546 -2.59 0.012 Buzz 72.72 10.94 6.65 0.000 S = 13.3863 R-Sq = 42.4% R-Sq(adj) = 41.4% Analysis of Variance Source DF SS MS F P Regression 1 7913.6 7913.6 44.16 0.000 Residual Error 60 10751.5 179.2 Total 61 18665.1 18-41/44 Part 18: Regression Modeling

Elasticity in the Monet Regression: b = 1.7246. This is the elasticity of price with respect to area. The confidence interval would be 1.7246 1.96(.1908) = [1.3506 to 2.0986] The fact that this does not include 1.0 is an important result prices for Monet paintings are extremely elastic with respect to the area. 18-42/44 Part 18: Regression Modeling

Conclusion about b So, should we conclude the slope is not zero? Does the range of uncertainty include zero? No, then you should conclude the slope is not zero. Yes, then you can t be very sure that is not zero. Tying it together. If the range of uncertainty does not include 0.0 then, The ratio b/SE is larger than2. The square of the ratio is larger than 4. The square of the ratio is F. F larger than 4 gave the same conclusion. They are looking at the same thing. 18-43/44 Part 18: Regression Modeling

Summary The regression model theory Least squares results, a, b, s, R2 The fit of the regression model to the data ANOVA and R2 The F statistic and R2 Uncertainty about the regression slope 18-44/44 Part 18: Regression Modeling