Reinforcement Learning in Markov Decision Processes

Explore the concepts of Markov Decision Processes (MDP) in Reinforcement Learning (RL), including the Bellman equation, optimal value function, optimal policy, and state transitions. Dive into examples and illustrations to grasp core RL principles effectively.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

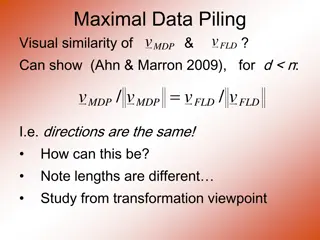

Markov Decision Process Hongning Wang CS@UVA

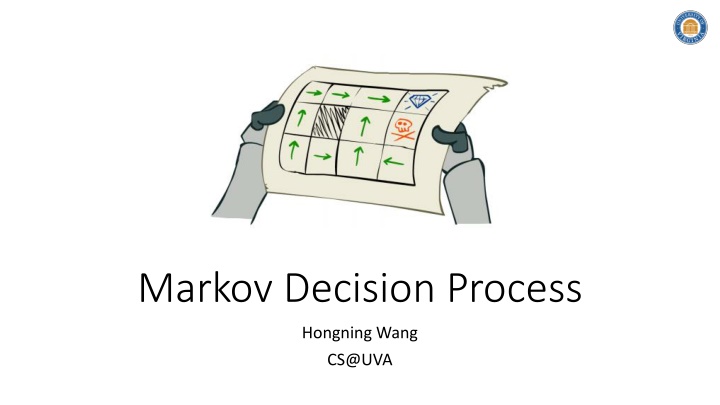

Outline Bellman equation Optimal value function and optimal policy Extensions of MDP CS@UVA RL2022-Fall 2

From the basics of RL With respect to history How did we reach the current observation ?1,?1,?1,?2,?2,?2, ,??,?? History Why do we care about history? In case this has happened before Generalize from history State a function of history ??= ? ?1,?1,?1,?2,?2,?2, ,?? = ?(?? 1,?? 1) Assume Markovian states CS@UVA RL2022-Fall 3

A typical illustration State in breakout game State transition CS@UVA RL2022-Fall 4

A toy example of MDP State transition ? ??+1= ? ??= ? ,??= 1 = 1.0 ? ??+1= ? ??= ? ,??= 2 = 0.2 CS@UVA RL2022-Fall 5

A typical illustration Expected reward for state-action pair CS@UVA RL2022-Fall 6

A toy example of MDP Expected reward for state-action pair ?(??= ? ,??= 2) = 2.0 CS@UVA RL2022-Fall 7

Agent vs., environment Depends on the definition of action sets, i.e., the problem we are looking at Where to draw the boundary? User interface is part of agent or environment in a recommender system? Motor is part of agent or environment in a self-driving car? CS@UVA RL2022-Fall 8

Bellman equation for value function ???? 1 ? Value function ???? = ?? ?=? Compute it in a recursive way Can we compute a bound of it? ?? ??? ?+1 Unique solution of this equation w.r.t a particular policy ? CS@UVA RL2022-Fall 9

Bellman equation for value function Action-value function ????,?? = ?? ?? ?=? Compute it in a recursive way ?? ??? ?+1 ? ?? ? ?,? ? ?? ? ? ??(? ,? ) ???,? = ? + ? CS@UVA RL2022-Fall 10

The lecture will start at 9:30am EDT Sli.do event code: 3851721

Recap: Bellman equation for value function ???? 1 ? Value function ???? = ?? ?=? Compute it in a recursive way Can we compute a bound of it? ?? ??? ?+1 Unique solution of this equation w.r.t a particular policy ? CS@UVA RL2022-Fall 12

Bellman equation for value function Gridworld example (?=0.9) Q: how do we get the values at the first place? A: solving a system of equations with |?| variables and |?| equations 0.25 2.3 + 0.7 0.4 + 0.4 0.9 = 0.675 A B ? = 0.25 [ 1.2 0.9 1.4 0.9 + 1 + 0.9? 2] CS@UVA RL2022-Fall 13

Bellman equation for value function A closer look with a matrix form + ?? ? ?,? ? ??(? ) ??? = ? ?,? ? ??= ??+ ????? ?? Complexity: ?( ?3) (? ????? )??= ?? State occupancy matrix ??= ? ????? 1?? ?? = ? ?? 1 ??= ? |?1= ?,? ?? ?=1 Proof of the solution s existence and uniqueness? Each row depicts the discounted probability of visiting state ? starting from state ? CS@UVA RL2022-Fall 14

Bellman equation for value function A closer look with a matrix form For any non-zero vector ? ?|?| ? ????? ? = ? ????? ? ? ? ???? ? ? ? ? > 0 ? is invertible if for any non-zero vector ?, ?? > 0 CS@UVA RL2022-Fall 15

Optimal policy How about Bellman s equation? Value function defines partial ordering of policies ? ? if and only if ??? ?? (?) for ? ? Finding optimal policy is easy when knowing the optimal value function ???? ? ? If we increase the reward of all state action pairs by a constant c, how would it affect an agent s behavior? RL2022-Fall 17.8 = 19.8 0.9 16.0 = 17.8 0.9 0.5 2 CS@UVA 16

Bellman equation for optimal value function It still holds (and of course!), but in a special form Optimal action-value function A.k.a Bellman optimality equation Obtained by optimal policy: optimal value is achieved by the best action under each state CS@UVA RL2022-Fall 17

Bellman equation for optimal action-value function It is also in a similar and special form No closed form solution! Q: How do we really find it? A: Solving a set of system equations, one equation for each state-action pair! Requirements: 1) Known environment dynamics 2) Markovian states 3) Abundant computational power Otherwise, we will approximate it! CS@UVA RL2022-Fall 18

Extensions of MDP Partially Observable Markov Decision Process (POMDP) Markov process vs., hidden Markov process? Now the agent needs to infer the posterior of states based on history, the so- called belief state Observations: ??= ?(??= ?|??= ?,??= ?) CS@UVA RL2022-Fall 19

Extensions of MDP Fixed horizon MDP Predefined length of interactions Continuous state/action space Make it functional, e.g., a linear quadratic model Continuous time Partial differential equations Ergodic MDP The underlying Markov process is ergodic Stationary distribution exists, which makes the calculation of value function easy CS@UVA RL2022-Fall 20

Takeaways Markovian states lead to Bellman expectation equation and optimality equation for MDPs Both of them result in a system of equations which has a unique solution CS@UVA RL2022-Fall 21

Suggested readings Chapter 3, Finite Markov Decision Processes CS@UVA RL2022-Fall 22