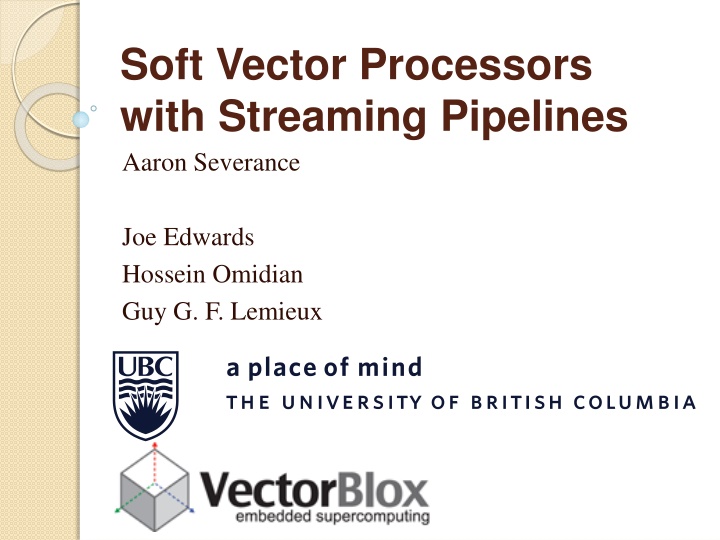

Soft Vector Processors and Streaming Pipelines in Action

"Explore the implementation of soft vector processors with streaming pipelines, addressing data parallel problems on FPGAs, overlays, processors, and examples like the N-Body Problem. Dive into custom vector instructions, VectorBlox MXP, CVI complications, and heterogeneous lanes for efficient processing."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Soft Vector Processors with Streaming Pipelines Aaron Severance Joe Edwards Hossein Omidian Guy G. F. Lemieux

Motivation Data parallel problems on FPGAs ESL? Overlays? Processors? 2

Example: N-Body Problem O(N2) force calculation Streaming Pipeline (custom vector instruction) O(N) housekeeping Overlay (soft vector processor) O(1) control Processor (ARM or soft-core) 3

Soft Vector Processor (SVP) Vector Instructions InACustom Custom Instr. A SrcA Out VectorBlox MXP Matrix Processor Scalar Processor SrcB InB ALU A Dst I$ D$ M M InACustom Custom Instr. B SrcA S Scratchpad Out SrcB InB SoC Fabric ALU B M DMA Dst S DDR2/ DDR3 4

VectorBlox MXP 1 to 128 parallel vector lanes (4 shown) 5

Custom Vector Instructions (CVIs) clock start valid A0 C0 x2 SUB B0 wr0 A1 C1 x2 SUB B1 wr1 A2 C2 x2 SUB B2 wr2 A3 C3 x2 SUB B3 wr3 2 opsize opcode 00 2 CVI within lanes difference squared Simple CVI parallel scalar CIs 7

CVI Complications (1) CVIs can be big e.g. square root, floating point Bigger than entire integer ALU Make them cheaper Don t replicate for every lane Reuse existing alignment networks No additional costs, buffering 8

CVI Complications (2) CVIs can be deep e.g. FP addition >> depth than MXP pipeline Execute stage is 3 cycles, stall-free CVI pipeline must warm up Don t writeback until valid data appears Best if vector length >> CVI depth 10

Multiple Operand CVIs Streaming vector data in: Scalar data in: Data out: xi (65) F xi dx . . . . . . (65) dF dF xj-1 xj xj+1 x y yi sqrtr 1.0 r (48) F yi dy . . . . . . yj-1 yj yj+1 (16) 19 69 70 71 72 73 74 Gmi Gm i mj . . . . . . m j-1 m m j+1 (65) j 1 2 68 2D N-body problem: 3 inputs, 2 outputs 11

4 Input, 2 Output CVI Option 1: Spatially Interleaved Easy for interleaved (Array-of-Struct) data But vector data is normally contiguous (SoA) 12

4 Input, 2 Output CVI Option 2: Time Interleaved Alternate operands every cycle Data is valid every 2 cycles 13

4 Input, 2 Output CVI Option 2 with Funnel Adapters Multiplex 2 CVI lanes to one pipeline Use existing 2D/3D instructions to dispatch 14

Building CVIs Streaming vector data in: Scalar data in: Data out: xi (65) F xi dx . . . . . . (65) dF dF xj-1 xj xj+1 x y yi sqrtr 1.0 r (48) F yi dy . . . . . . yj-1 yj yj+1 (16) 19 69 70 71 72 73 74 Gmi Gm i mj . . . . . . m j-1 m m j+1 (65) j 1 2 68 We created CVIs via 3 methods: 1. RTL 2. Altera s DSP Builder 3. Synthesis from C (custom LLVM solution) 15

Alteras DSP Builder Fixed or Floating-Point Pipelines Automatic pipelining given target Adapters provided to MXP CVI interface 16

Synthesis From C (using LLVM) for( int glane = 0 ; glane < CVI_LANES ; glane++ ) { f16_t gmm = f16_mul( ref_gm, m[glane] ); f16_t dx = f16_sub( ref_px, px[glane] ); f16_t dy = f16_sub( ref_py, py[glane] ); f16_t dx2 = f16_mul(dx,dx); f16_t dy2 = f16_mul(dy,dy); CVI templates provided Restricted C subset - Verilog Can run on scalar core for easy debugging f16_t r2 = f16_add(dx2,dy2); f16_t r = f16_sqrt(r2); f16_t rr = f16_div(F16(1.0),r); f16_t gmm_rr = f16_mul(rr,gmm_68); f16_t gmm_rr2 = f16_mul(rr,gmm_rr); f16_t gmm_rr3 = f16_mul(rr,gmm_rr2); f16_t dfx = f16_mul(dx,gmm_rr3); f16_t dfy = f16_mul(dy,gmm_rr3); f16_t result_x = f16_add(result_x[glane],dfx); f16_t result_y = f16_add(result_y[glane],dfy); result_x[glane] = result_x; result_y[glane] = result_y; } #define CVI_LANES 8 /* number of physical lanes */ typedef int32_t f16_t f16_t ref_px, ref_py, ref_gm; f16_t px[CVI_LANES], py[CVI_LANES], m[CVI_LANES]; f16_t result_x[CVI_LANES], result_y[CVI_LANES]; void force_calc() { for( int glane = 0 ; glane < CVI_LANES ; glane++ ) { //CVI code here } } 17

Performance/Area SVP Configuration V32, 16 physical pipelines Speedup/ALM Relative to Nios II/f MXP 1.1 MXP + DIV/SQRT 19.7 MXP + N-Body (floating-point) 68.7 MXP + N-Body (fixed-point) 116.0 19

Conclusions CVIs can incorporate streaming pipelines SVP handles control, light data processing Deep pipelines exploit FPGA strengths Efficient, lightweight interfaces Including multiple input & output operands Multiple ways to build and integrate 20

Thank You 21