Software and Computing

"Revivification of Interface Committee, DUNE computing restructuring, Offline software tutorials, First data transfers at scale, Establish hardware database, MCC generation milestones, Integrate DAQ access libraries, Beam instrument database replication, ProtoDUNE workflow freeze, ProtoDUNE Offline Reco/Analysis Campaign progress."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

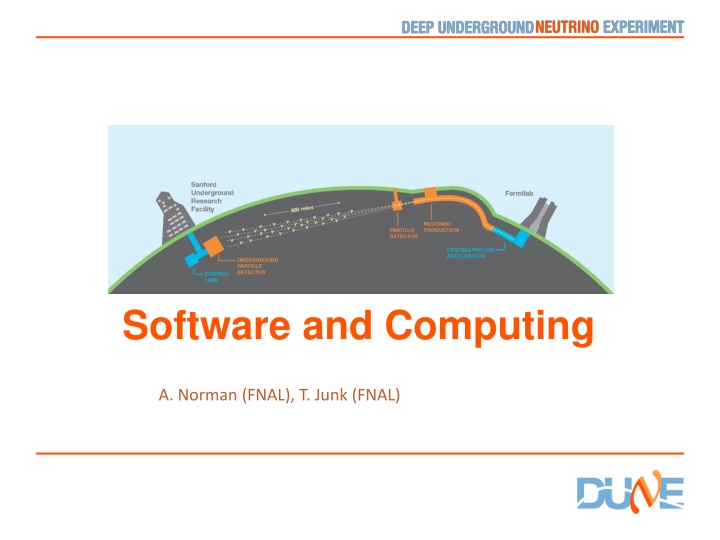

Software and Computing A. Norman (FNAL), T. Junk (FNAL)

Major Milestones Q4/2016: Revivification of Interface Committee (complete) Q4/2016: Restructure DUNE computing (complete) (Dec 2017) Q1/2017: DUNE Offline Software Tutorials (complete) Q1/2017: MCC 8 Generation (complete) Q1/2017: SCPMT Request @FNAL (complete) Q1/2017: First Data transfers at scale CERN to FNAL (complete) Q1/2017: First Data transfers at scale FNAL to CERN (complete) Q1/2017: End-to-End data transfer from EHN1 (delayed est. Q2/2017) Q2/2017: Establish hardware database (complete) Q2/2017: Populate S&C working groups w/ personnel (in progress) Q3/2017: MCC 9 Generation (on track/early) Q2/2017: Offline Software Spring Tutorials (w/ video) (complete) 6/21/17 A. Norman | DUNE 2

Major Milestones Q3/2017: Establish calib./cond. DBs (FNAL/CERN) (pending DB effort) Q3/2017: Integrate DAQ access libraries for data unpack (in progress) Q3/2017: Offline Software Summer Tutorials/Workshop (planned) Q3/2017: MCC 10 Generation (in planning) Q1/2017: Establish beam inst. DB replication for offline merge (in planning) Q2/2018: Freeze production/reco workflow Q3/2018: Store ProtoDUNE-SP/DP raw dataset @FNAL/CERN Q4/2018: ProtoDUNE Offline Reco/Analysis Campaign 6/21/17 A. Norman | DUNE 3

Milestone Notes files to be fed bad to the processing chain (i.e. convert reco hit back to a raw digit). Initial implementation of DAQ pack/unpack chain allows for simulation Artdaq/Art feature similar to model used by NOvA and MicroBooNE. Does not require full implementation of the hardware data format. (only need analog of rawdigit) Key milestones are dependent on external group s deliverables Ex. DAQ unpacking libraries required for data processing workflows Initial enumerations of databases have been made. Hosting plans developed. Still require effort to address details. Leveraging experience of personnel on NA61 & NA62 to handle beam instr. database. Most of work is at CERN within PD. Baseline plan is to replicate/dump to DBs hosted at FNAL and use existing DB interface modules in art/LarSoft. 6/21/17 A. Norman | DUNE 4

Response to LBNC Recommendations Ramp up the Fermilab (SCD or elsewhere) scientific computing effort for protoDUNE/TDR as proposed with the reminder that these efforts are 18 months out. From March 2017 Review: Response: This is not a recommendation that can be directly addressed by the computing coordination team due to the obvious lack of authorization that the coordination team has in respect to personnel allocations occurring outside the DUNE collaboration structure at national laboratories or universities. HOWEVER The DUNE computing coordination team agrees that increasing the human capital directed towards scientific computing is valuable and has the potential to advance both the DUNE TDR and ProtoDUNE efforts. The computing coordination team has worked with the FNAL Scientific Computing Division senior management to understand the current allocations of scientific staff towards the DUNE project. Based on this information SCD senior management is committed to redirecting the technical activities of the scientific personnel currently working DUNE/ProtoDUNE towards scientific projects on DUNE/ProtoDUNE. This planning and reallocation of effort will start during June as part of the budget and tactical planning for FY18. The computing coordination team will be involved in this process and will monitor its progress. In conjunction with the reallocation of FNAL SCD efforts, the computing coordination team is aggressively pursuing new sources of human capital outside of the laboratory. The team has been in contact with the P.I.s of multiple university groups look to join DUNE during the summer/fall of CY17 who have experience in neutrino or LHC computing. The coordination team is also working with the DUNE collaboration and management to encourage additional participation from within the collaboration in the computing activities. For the first check-in, we would like to have the information on assigned FTEs from the FNAL SCD to better understand the scope of work that is currently being undertaken 6/21/17 A. Norman | DUNE 5

Response in detail From March 2017 Review: Ramp up the Fermilab (SCD or elsewhere) scientific computing effort for protoDUNE/TDR as proposed with the reminder that these efforts are 18 months out. Response: The DUNE computing coordination team agrees that increasing the human capital directed towards scientific computing is valuable and has the potential to advance both the DUNE TDR and ProtoDUNE efforts. Translation: Yes of course we want more people! The current allocations of personnel have been made from SCD: Group Co-Leads Central Production Anna Mazacane (FNAL-SCD) TBD (University) Data Management Steve Timm (FNAL-SCD) TBD (University) Software Mgt. Tingjun Yang (FNAL-ND) TBD Databases (ProtoDUNE) Jon Paley (FNAL-ND) TBD Collaborative Tools Eileen Berman TBD 6/21/17 A. Norman | DUNE 6

Response in detail From March 2017 Review: Ramp up the Fermilab (SCD or elsewhere) scientific computing effort for protoDUNE/TDR as proposed with the reminder that these efforts are 18 months out. Response: The computing coordination team has worked with the FNAL Scientific Computing Division senior management to understand the current allocations of scientific staff towards the DUNE project. Estimate: 11.87 FTE of effort (technical and Scientific) across FNAL-SCD through both direct and indirect allocations (i.e. service model). Approximately 2.33 FTE are classed as Scientific (Postdocs, Assoc. Scientists and higher) and are directly budgeted to DUNE or LarSoft currently. (Comparison NOvA 2.1 FTE) Based on this information SCD senior management is committed to redirecting the technical activities of the scientific personnel currently working DUNE/ProtoDUNE towards scientific projects on DUNE/ProtoDUNE. This planning and reallocation of effort will start during June as part of the budget and tactical planning for FY18. The computing coordination team will be involved in this process and will monitor its progress. Rebalance scientific personnel towards more scientific projects on DUNE/PD (compared to technical projects) Tactical planning and budgeting for FY18 will reflect this. A. Norman involved directly in tactical planning from both sides. 6/21/17 A. Norman | DUNE 7

Response in detail From March 2017 Review: Ramp up the Fermilab (SCD or elsewhere) scientific computing effort for protoDUNE/TDR as proposed with the reminder that these efforts are 18 months out. Response:In conjunction with the reallocation of FNAL SCD efforts, the computing coordination team is aggressively pursuing new sources of human capital outside of the laboratory. The team has been in contact with the P.I.s of multiple university groups look to join DUNE during the summer/fall of CY17 who have experience in neutrino or LHC computing. The coordination team is also working with the DUNE collaboration and management to encourage additional participation from within the collaboration in the computing activities. Translation: There are a number of University groups who are looking to join DUNE or have added junior faculty that are not yet scooped up by other groups.In particular There are two University groups with neutrino experience (NOvA) which have been approached directly. (We are actively trying to poach them. One is a definite no till Sept+ ) There are also key people from Lariat who have been approached. (specifically for databases) Outside of neutrinos, there is one group looking to transition from CMS. (We are actively trying to poach them. May be base grant issues) 6/21/17 A. Norman | DUNE 8

Follow up on earlier LBNC Recs. From January Check-base meeting: ProtoDUNE SP & DP Computing plans, can some work be in common? In-progress: Last time Draft version 8.5 of the computing plan was made available. Since then the plan has been passed to PD-SP AND DP. PD-SP broke out specific sections into separate deliverables documents to mirror the structure of what the computing plan was trying to achieve. In addition an executive summary document was generated relating to the higher level computing plan and interactions, policies and planning. Currently the following documents are nearing final form: Dune Computing Plan, In Support of ProtoDUNE Program (Executive Summary) (https://goo.gl/ks4fbS ) ProtoDUNE-SP Software and Computing Plan (version 10.4) (https://goo.gl/vzt9Qz ) Data Reconstruction and Analysis Scope & Deliverables (DRA) (DUNE DocDB 3450) ProtoDUNE SP DAQ Overview (DUNE DocDB 3450) (https://goo.gl/XZHmKL ) Computing Deliverables Timelines (https://goo.gl/cXZsaQ ) 6/21/17 A. Norman | DUNE 9

Risk Updates evaluated in context of work being done for PDs Primary risks are still schedule not technical Leading ProtoDUNE Risks (impact to data taking) Personnel/effort availability (elevated) Budget for storage media in 18 (data taking) and additional allocation of media for analysis in 19 [FNAL/CERN] Detector noise & compression ratios Data inflation rates are higher than predicted (elevated) Data reduction proposal (reduced risk) Modified plan can be part of mainstream data processing Reco/Analysis memory footprint in LarSoft (> 2/4 GB) CERN/FNAL interfaces and interactions (reduced risk) Availability of Teir0 compute resources at CERN (reduced risk) Availability of grid compute resources in USA (reduced risk) Computing Risks for ProtoDUNE continue to be tracked and 6/21/17 A. Norman | DUNE 10

Leading Risks with Mitigation CERN/FNAL interfaces and interactions (reduced) Revivification of interface group CERN IT technical meetings Successful demonstrator projects (CERN FNAL) Personnel/effort availability ( elevated ) Engaging EOI process (DUNE) and Tactical planning/budgeting with FNAL-SCD to ramp and align personnel with estimated needs. Little to no interest from collaboration. Budget for storage media in 18 (data taking) and additional allocation of media for analysis in 19 [FNAL & CERN] Engaged with CERN to monitor and assess storage and data retention. Both FNAL/CERN on equal [technical] parity w.r.t. DUNE Engaged in DoE planning for compute/storage. Engaged in process with FNAL to plan for the 19 request Engaging with ProtoDUNE s to refine estimates of analysis flows and affects on storage Detector noise & compression ratios Active work toward risk mitigation Reviewing current requests by ProtoDUNE and performing worst case planning with CERN. Will allow for reallocation of resources based on triggers (i.e. noise rates higher than expected) 6/21/17 A. Norman | DUNE 11

Leading Risks with Mitigation Data inflation rates greater than expected (> 2x) Data reduction proposal by SP MCC9 providing measurement of supporting MC inflation under current workflow. Workflows presented at May collaboration meeting, and computing plans being examined to formalize the check points in the flows Data reduction proposal (reduced) Presentation (and discussion) at DRA workshop made clear that modified data reduction can be performed early in standard data processing. Minor impact on resources now. Availability of Tier 0 compute resources at CERN (reduced) Formal commitment of 1500 Tier 0 slots for DUNE/ProtoDUNE for next 5 years. Active work toward risk mitigation 6/21/17 A. Norman | DUNE 12

ProtoDUNE Computing Flow Reminder: Hierarchical Flow of data out of detector pit Passes through Tier 0 Archival copies of data at CERN & FNAL Active copies at CERN & FNAL for data processing Common interface to computing at both sites Want users/analysis to work seamlessly at both Labs 6/21/17 A. Norman | DUNE 13

Interface Committee Updates Addressing mainly communications lines and resource allocations Many posititive outcomes Meeting regularly (bi-weekly) Improved handling of CERN accounts for DUNE members Establishment of a dedicated IT for ProtoDUNE meeting (run by Xavier Espinal) Tier 0 resources and access Networking across CERN and FNAL boundaries Database support models Ensuring both SP and DP needs are represented Technical experts have been actively invited to participate on an individual basis relating to the meeting topic (ex. database experts from FNAL/CERN for discussion of database) S&C Coordinator Meetings are biweekly Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 14

Software Management revamp (happening now) Identified major issue with interdependencies between DuneTPC, LarSoft and non-DUNE experiments. Continues in lock step with LarSoft and will follow LarSoft build system Affects continuous integration environment Mainly impacts anyone working on duneTPC code No Cross Exp. Deps uBooNE Trying to rationalize build system & failure notification schemes duneTPC Lariat Placing code devel @CERN on parity with FNAL LarSoft Interface Boundary All CERN platforms have access to official releases via CVMFS currently LarSoft Adding devel support through build nodes at CERN S&C Coordinator Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 15

Data Management Baseline applications complete and integrated with CERN infrastructure Focusing on Data Movement CERN FNAL F-FTS (deployed), FTS-Light (ready for deployment in EHN1) F-FTS/SAM have EOS support via xroot Most of CERN/FNAL storage and data management interfaces/auth worked out. Tools are in production use for MCC9. Provides automated simulation distribution to CERN storage Will continue for Fall data challenges. Major focus is testing bandwidth between sites. Issues with asymmetric routing out of CERN. Requires non-CMS storage be including within LHCONE network. (in progress) S&C Coordinator Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 16

Bandwidth Rate tests (CERN to FNAL) Testing CERN EOS --> Fermilab dCache transfers Tests are outside of the automated F-FTS environment to determine scaling behavior Running 10/20/40 simultaneous transfer processes Test operates on representative 5G file copied out from EOS to dCache in repeated fashion See 6 Gbit/s (20 processes) to 8 Gbit/s (40 processes) 20 files, 2 hours 20 files, 1 hour 40 files, 1 hour 6/21/17 A. Norman | DUNE 17

Central Production MCC9 was advanced from fall to Summer to workout transition to centralized model Focused on MCC8 and MCC9 production campaigns Established formal DUNE Request Portal for tracking MC and other production requests. Full ticketing system with customized DUNE interface (Went live June 21) Allows for coordination across groups (i.e. software, data mgt, production). MCC9 Campaigns run by combination of A. Mazzacane and ProtoDUNE-SP people (Dorota in particular). Need more actual people to babysit these activities. Frustration expressed by both sides at initial effort needed to migrate to/use the tool suites. Work is boring. Actively seeking participation of University groups that may have students who can man production shifts . Many snags. Most self inflicted (i.e. not vetting sim jobs, filling up disk space with cruft). Competition for resource [DUNE on DUNE competition] (but avoids the Sinclair Submission phenomenon) But . S&C Coordinator Huge success in actually running and getting resources at scale Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 18

MCC9 Resources DUNE/ProtoDUNE software now portable. Monte Carlo campaigns run against Open Science Grid sites 6/21/17 A. Norman | DUNE 19

CERN Batch Integration MCC9 Demonstrated routing of jobs to CERN Tier 0. Issue is copy out of data and asymmetric routing. Temp fix to skip automatic copy back stages and land data on EOS Will be fixed with LHCONE integration. debug CERN integration Production Monitoring being used to 6/21/17 A. Norman | DUNE 20

MCC9 Jobs by Site 6/21/17 A. Norman | DUNE 21

Databases Extensive experience from NOvA J. Paley heading up efforts relating to PD Initial enumeration of needed DBs by PD-SP (no surprises) Instr. DBs from CERN may steal from NA62 for access methods Plans for replication being developed Hardware database in place N. Buchanan (Colorado State) and FNAL database group Still needs expanded involvement to address other databases needed for ProtoDUNE. Presents a risk to ProtoDUNE efforts if understaffed (or ignored till late in ProtoDUNE timelines) S&C Coordinator Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 22

Collaborative Tools Service portal is live (https://goo.gl/1eGAub) Collaboration DB is under development Reorg of documentation and jump points from DUNE pages S&C Coordinator Fermilab/CERN/D UNE Interface Committee Software Management Central Production Collaborative Tools Data Management Databases 6/21/17 A. Norman | DUNE 23

DUNE Portal Project Modeled after LPC portal Allows for requests directed to DUNE S&C to be routed/tracked Based on need for tracking/ticketing of requests and interaction between computing groups (i.e. production, software release, data management) Allows for common interface to access resources (i.e. DUNE/ProtoDUNE) i.e. Monte Carlo sample generation requests, data staging requests General interface for DUNE collaborators to get help make requests Does not rely on FNAL account scheme Initial version in production Expanded version in early Q3 6/21/17 A. Norman | DUNE 24

Follow up on Resource Requests in FY17/18/19 Presented Resource requests to FNAL based on: Current usage models, stated data event sizes, trigger rates, run durations provided by ProtoDUNE-SP and ProtoDUNE-DP Current event processing times (as of Jan 17) Then came May and numbers from MCC9 Event processing times (measured directly from MCC9) were 48 min/evt Driven by use of a new deep learning technique. 2-4x increase in processing if persists Also re-evaluated storage needs based on PD-SP and DP numbers. Reconciled most numbers from SP/DP in terms of triggers & evts to analyze DP stands out with a 2x factor that wasn t accounted for previously in data processing (increases processing needed) Inflation factors being re-evaluated in terms of realistic processing chain and checkpoints 6/21/17 A. Norman | DUNE 25

Follow up on Resource Requests in FY17/18/19 Basing assumptions on other neutrino experiment s experience Still hard to see how anything less than a 2x factor is achieved based on current processing Tradeoffs of time vs. storage 6/21/17 A. Norman | DUNE 26

Long Range Planning Recent exercise in planning for 2017+ All IF experiments evaluated including DUNE, PD-SP/DP Very conservative assumptions on evt size & processing Initial data inflation dominates storage needs Realistically analysis code doesn t get faster, it get s more complicated ProtoDUNE-SP long range planning exercise 6/21/17 A. Norman | DUNE 27

Resource Bottom Line Most likely scenario is higher storage foot print and higher CPU usage for new analysis techniques Affects both SP and DP due to LarSoft commonality (i.e. evt reco through a deep CNN is just going to be slow. If one experiment does it the other is sure to follow). Pushes a need to identify additional resources Need to examine alternate data reduction schemes and schemes to sparsify the events Still on track for data taking Deficits won t be realized until AFTER first production passes 6/21/17 A. Norman | DUNE 28

Summary/Outlook Computing plan is well developed for ProtoDUNE-SP. Mutliple documents now enumerate everything. Helping to frame direction that effort is directed towards Most CERN/FNAL initiatives (e.g. data movement) are demonstrated, on track, or pending external factors (EHN1 availability) Exception is bandwidth out of CERN (pursuing LHCONE membership) Need additional human resources across the board Most critical is database development and support Resource estimates are still in place and still scary Have been re-evaluated. SP and DP are more consistent than previously CPU requests may not fit in available resources in light of reco times 6/21/17 A. Norman | DUNE 29