Solving Data Classification Challenges with Support Vector Machines

Explore the limitations of perceptrons in classifying non-linearly separable data and how Support Vector Machines offer a solution through effective algorithms. Learn about transforming data spaces, avoiding overfitting, and addressing the issue of multiple hyperplanes in classification tasks.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

AI for Medicine Lecture 09: Support Vector Machines Part I February 09, 2022 Mohammad Hammoud Carnegie Mellon University in Qatar

Today Last Wednesday s Session: Perceptrons for Molecular Genetics Today s Session: SVMs- Part I Announcements: A2 is out. It is due on Feb 15 by midnight Quiz I will be on Feb 21

Outline SVM Motivation Background Objective Algorithm

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data The biggest problem is when data is not linearly separable (problem 1) In this case, any line through the examples will yield examples of both classes on at least one of the sides!

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data The biggest problem is when data is not linearly separable (problem 1) In principle, it is possible to transform the examples into another space, which makes them linearly separable

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data The biggest problem is when data is not linearly separable (problem 1) However, doing so could lead to overfitting (the situation where the classifier works well on training data but not on new data)

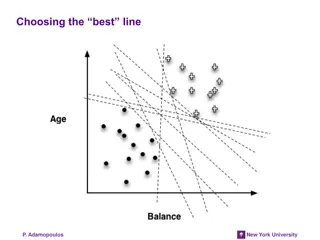

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data And even if the space is (or is made) linearly separable, there could be many hyperplanes, which are not all equally good (problem 2) An acceptable hyperplane and the new example indicated by ? will be classified as a square ?

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data And even if the space is (or is made) linearly separable, there could be many hyperplanes, which are not all equally good (problem 2) Another acceptable hyperplane, but the new example will now be classified as a circle (although it seems closer to squares!) ?

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data Yet, another problem is that perceptrons usually stop as soon as there are no misclassified examples (problem 3) This hyperplane just managed to accommodate the two squares it touches before stopped

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data Yet, another problem is that perceptrons usually stop as soon as there are no misclassified examples (problem 3) This hyperplane also just managed to accommodate the two circles it touches before stopped

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data Yet, another problem is that perceptrons usually stop as soon as there are no misclassified examples (problem 3) If either of these hyperplanes represents the final weight vector, the weights will be biased toward one of the classes

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data Yet, another problem is that perceptrons usually stop as soon as there are no misclassified examples (problem 3) For example, if this hyperplane is the one that the perceptron chooses, the example indicated by ? will be classified as a circle ?

Problems of Perceptron Perceptrons exhibit various limitations in their ability to classify data Yet, another problem is that perceptrons usually stop as soon as there are no misclassified examples (problem 3) However, if this hyperplane is the one that the perceptron selects, the example indicated by ? will be classified as a square! ?

Support Vector Machines A Support Vector Machine (SVM) is an improvement over a perceptron, whereby it addresses the three aforementioned problems An SVM selects one particular hyperplane (the green line in the figure) that not only separates the examples into two classes, but does so in a way that maximizes the margin (? ? in the figure), which is the distance between the hyperplane and the closest examples of the training set ? ? ? ? Support Vectors

Outline SVM Motivation Background Objective Algorithm

Vectors A vector can be visually represented as an arrow Head = 4 ? 3 ? 3 ? = (4, 3) Or: Tail 4 A vector can also be represented as an ordered list or a tuple by starting from its tail and asking how far away is its head in the: Horizontal direction Vertical direction

Unit Vectors Any vector can also be represented as a sum of scaled up versions of unit vectors It goes in the horizontal direction only and has length 1 = 1 0 ? ? = 4 3 ? 3 ? = 0 It goes in the vertical direction only and has length 1 ? 1 4 ? = 41 =4 0+0 =4 0+ 30 ?= 4 ? + 3 ? 3 3 1

Vector Magnitude How can we calculate the length (or magnitude) of a vector? Pythagorean theorem: ??+ ??= ?? ? c = ? = ??+ ?? b = 3 a = 4 Magnitude of the vector = ? = 32+ 42 = 25 = 5 How can we keep vector, ?, pointing to the same direction, but change its magnitude to 1 (i.e., turn it into a unit vector)?

Vector Normalization How can we construct a unit vector out of a given vector of any length? Input: Output: Vector of any length The vector with the same direction, but with length 1 Normalization

Vector Normalization How can we construct a unit vector out of a given vector of any length? Input: Output: ? ? ? = ? ? Normalization: ? ?

Vector Normalization How can we construct a unit vector out of a given vector of any length? ? ? ? = 4/5 3/5 3 = 4 ? ? = ? 3 4 Let us verify that its length is 1 ? = 32+ 42 = 25 = 5

Vector Normalization How can we construct a unit vector out of a given vector of any length? ? ? ? = 4/5 3/5 3 = 4 ? ? = ? 3 4 ? = (3 5)2+(4 5)2 ? = 32+ 42 = 25 = 5 = (9 + 16)/25 = 1

Vector Inner Product Assume the following two vectors: Project ? onto ? ? = ?1 ? = ?1 ? ?? ?? ?2 ?2 ? p What is the inner product of ? and ?? ?? ?? ?? ? = ? p is the length of the projection of ? onto ?

Vector Inner Product Assume the following two vectors: Project ? onto ? ? = ?1 ? = ?1 ? ?? ?? ?2 ?2 ? p What is the inner product of ? and ?? ?? ?? ?? ? = ? ? ?1 ?2 ?? ? = ?1 ?2 = ?1 ?1+?2 ?2 p is the length of the projection of ? onto ? Can be signed

Vector Inner Product Assume the following two vectors: ? = ?1 ? = ?1 ?2 ?2 ? Project ? onto ? ? What is the inner product of ? and ?? ?? p ?? ? = ? ? ?1 ?2 ?? ? = ?1 ?2 = ?1 ?1+?2 ?2 p is negative here

Outline SVM Motivation Background Objective Algorithm

What is the Objective of SVM? The objective of an SVM is to select a hyperplane w.x + b = 0 that maximizes the distance, ? ?, between the hyperplane and any example in the training set Intuitively, we are more certain of the class of examples that are far from the separating hyperplane than we are of examples near to that hyperplane ? ? ? ? w.x + b = 0

What is the Objective of SVM? The objective of an SVM is to select a hyperplane w.x + b = 0 that maximizes the distance, ? ?, between the hyperplane and any example in the training set Thus, it is desirable that all the training examples be as far from the hyperplane as possible (but on the correct side of that hyperplane, of course!) ? ? ? ? w.x + b = 0

What is the Objective of SVM? More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), maximize ? ?(by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, yi(w.xi+ b) ? ? Notice that yi, which must be +1 or 1, determines which side of the hyperplane the point ximust be on, so the relationship to ? ? is always correct!

What is the Objective of SVM? More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), maximize ? ?(by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, w.xi + b ? ?if yi = +1 yi(w.xi+ b) ? ? w.xi+ b -? ?if yi= 1 But, by increasing w and b, we can always allow a larger value of ? ?(e.g., If we replace w by 2w and b by 2b, then for all i, yi((2w).xi+ 2b) 2? ?

What is the Objective of SVM? More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), maximize ? ?(by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, w.xi + b ? ?if yi = +1 yi(w.xi+ b) ? ? w.xi+ b -? ?if yi= 1 Hence, 2w and 2b are always better than w and b, so there is no best choice and no maximum ? ?!

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector Consequently, the parallel hyperplanes that just touch the support vectors can be described by the equations w.x+b = +1 and w.x+b = 1

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector x1 Consider one of the support vectors, (say, x2, in the figure) and let x1 be the projection of x2 to the upper hyperplane x2 ? ? w.x + b = +1 ? ? w.x + b = -1 w.x + b = 0

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector x1 The distance from x2 to x1 in units of ? is 2? ?. That is, ? x2 ? x1 = x2 + 2? ? ? ? ? w.x + b = +1 ? ? w.x + b = -1 w.x + b = 0

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector x1 Since x1 is on the hyperplane defined by w.x + b = +1, we know that w.x1 + b = 1. If we substitute for x1: x2 ? x1 = x2 + 2? ? ? ? ? ? w.(x2 + 2? ? ? ) + b = 1 w.x + b = +1 ? ? w.x + b = -1 w.x + b = 0

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector Regrouping terms, we see: x1 = x2 + 2? ? x1 ? ? ? x2 w.(x2 + 2? ? ? ) + b = 1 ?.? w.x2 + b + 2? ? ? = 1 ? ? -1 w.x + b = +1 ? ? w.x + b = -1 w.x + b = 0

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector Regrouping terms, we see: x1 = x2 + 2? ? x1 ? ? ? x2 w.(x2 + 2? ? ? ) + b = 1 ?.? w.x2 + b + 2? ? ? = 1 ? ? ? w.x + b = +1 ? ? ? ? ? = 1 ? ? w.x + b = -1 w.x + b = 0 ? ? = ?

The Objective of SVM How can we solve this problem? By normalizing the weight vector w (i.e., ? ? ), thus making it a unit vector Regrouping terms, we see: x1 ? ? ? = ? x2 Hence, maximizing ? ? is the same as minimizing ? ? ? w.x + b = +1 ? ? w.x + b = -1 w.x + b = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), maximize ? ?(by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, w.xi+b ? ?if yi = +1 w.xi+ b ? ?if yi= 1

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, w.xi+b 1if yi = +1 But, why would this constraint serve in materializing large margin classification? w.xi+ b -1if yi= 1

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, For illustrative purposes, let us assume only two features (i.e., xi = [?? and b = 0 w.xi+b 1if yi = +1 ?, ?? ?] and w = [w1, w2]) w.xi+ b -1if yi= 1

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, What is the inner product of w and xi (i.e., w.xi)? ?? ? w.xi 1if yi = +1 ?? w2 w w.xi -1if yi= 1 ? w1 ??

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, What is the inner product of w and xi (i.e., w.xi)? ?? w.xi 1if yi = +1 Project xi onto w w pi. ? w.xi -1if yi= 1 ? + w2.?? ? = w1.??

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, What is the inner product of w and xi (i.e., w.xi)? ?? pi. ? 1if yi = +1 Project xi onto w w pi. ? pi. ? -1if yi= 1 ? + w2.?? ? = w1.??

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w pi. ? -1if yi= 1 ?? Project x1 onto w w.x = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w ?? p1 is positive and very small, hence, for p1. ? to be 1, ? has to be very large! pi. ? -1if yi= 1 ?? w.x = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w ?? But, the optimization objective is to minimize ? , hence, SMV will not prefer this decision boundary pi. ? -1if yi= 1 ?? w.x = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w ?? p2 is negative and very small, hence, for p2. ? to be -1, ? has to be very large! pi. ? -1if yi= 1 ?? w.x = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w ?? But, the optimization objective is to minimize ? , hence, again, SMV will not prefer this decision boundary pi. ? -1if yi= 1 ?? w.x = 0

The Objective of SVM More formally, the goal of any SVM can be stated as follows: Given a training set (x1, y1), (x2, y2), . . . , (xn, yn), minimize ? (by varying w and b) subject to the constraint that for all i = 1, 2, . . . , n, If SVM encounters this green decision boundary, will it choose it? pi. ? 1if yi = +1 w NO ?? pi. ? -1if yi= 1 ?? w.x = 0