Spiking Neural Networks: From Modeling to Computational Challenges

Explore the world of spiking neural networks through various aspects such as modeling spiking neurons, neural network structure, computational problems, and the role of randomness in breaking symmetry. Dive into topics like neuro- RAM units, analysis of neural networks, and the implications of randomness on neural leader elections. Discover the complexity measures and computational tradeoffs involved in studying biologically-inspired neural networks from a distributed, algorithmic perspective.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

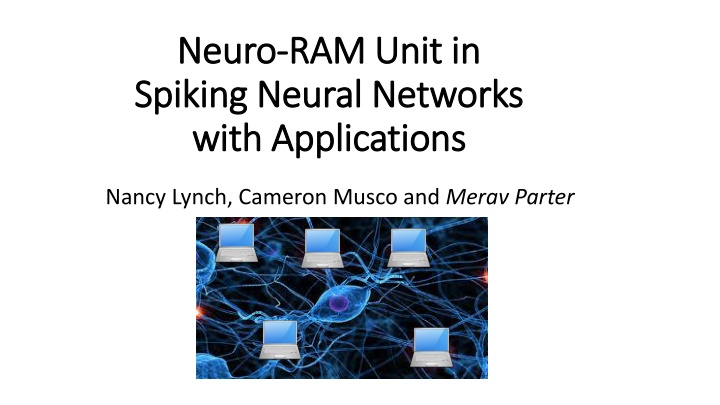

Neuro Neuro- -RAM Unit in RAM Unit in Spiking Neural Networks Spiking Neural Networks with Applications with Applications Nancy Lynch, Cameron Musco and Merav Parter

High Level Goal High Level Goal Study biologically neural networks from a distributed, algorithmic perspective.

Neural Networks Neural Networks Nodes (neurons), Edges (synapses). Directed weighted graph, weight indicates synaptic strength. Two types of neurons: Excitatory (all positive) and Inhibitory (all negative). ? ?

Modeling Spiking Neurons Modeling Spiking Neurons Node (e.g. neuron) is a probabilistic threshold gate 1 Neuron s threshold (bias): ? ? =: weighted sum of neighbors Det. Neuron Firing probability ? ? ? 0 1 3 2 1 ? Spiking Neuron Fires with probability 1 1+? (? ?) 1/2 weight ?

Modeling Spiking Neural Networks Modeling Spiking Neural Networks 0 2 Given: Weighted directed graph. Threshold for each neuron Initial states (0/1 to all nodes). 0 2 5 3 1 2 1 2 4 1 1 Dynamics: Synchronous discrete rounds. Firing in round ? depends on firing in round ? 1.

Computational Problems in SNN Computational Problems in SNN Target function ?: 0,1? 0,1? ? 0 1 1 0 0 Complexity measures: Size (number of aux. neurons) Auxiliary Neurons Time (number of rounds to convergence) ? 0 0 1

The Main Theme The Main Theme Computational tradeoffs Role of randomness Previous work [Lynch, Musco, P 17]: Neural leader election Randomness was crucial to break symmetry

Randomness Helps for Breaking Symmetry Randomness Helps for Breaking Symmetry ``Neural Leader Election : Given a subset of firing neurons, design a circuit that converges fast to one firing neuron 0 1 1 1 0 1 Input Theorem [Lynch-Musco-P 17]: : Circuit with (loglog?/log?) auxiliary neurons converging in ?-rounds. Auxiliary Neurons 0 0 0 1 0 0 Output

This Work: Similarity Testing This Work: Similarity Testing ?2 ?1 000010001000100 001010101000010 Auxiliary Neurons 1 ?? ?1= ?2 0 ?? ?1 ?2

Similarity Testing Similarity Testing - - Equality Equality ?1 ?2 ? ? Solution with two auxiliary threshold gates 000010001000100 001010101000010 2? 2? 2? 2? Not biologically plausible Not clear how to obtain sublinear size even with spiking neurons. ?1 ?2 ?2 ?1 1 1 ?1 ?2 ??? ?2 ?1

Approximation Similarity Testing Approximation Similarity Testing Distinguish between: ?1= ?2 vs. ??? ?1,?2 ?? ?2 ?1 000010001000100 001010101000010 Auxiliary Neurons Goal: sublinear size

Approximate Equality Approximate Equality Distinguish between: ?1= ?2 vs. ??? ?1,?2 ?? ?2 ?1 ? ? 000010001000100 001010101000010 Idea: Implementation: (I) Select log?/? indices at random Encoding random index log n spiking neurons (II) Check if ?1,?2match in these indices Random access memory

Key Building Block: Neuro Key Building Block: Neuro- -RAM RAM ? ? ?????: 0,1?+log ? 0,1 0 1 0 1 0 1 Input: ? bit vector ?, index vector ? Auxiliary Neurons Output: ?? ? 1 The Neuro-RAM construction is deterministic But the module is used when Y is random

Solving Approximate Testing with Neuro Solving Approximate Testing with Neuro- -RAM RAM ?2 ?1 1 0 1 0 1 0 1 1 log? random neurons ??2,1 ??1,1 = 0 ?1 1 ??2,? ??1,? = 1 ?? 1

Main Results (Neuro Main Results (Neuro- -RAM) RAM) Theorem 1 (Upper Bound): Deterministic circuit with ? ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds (? ?). Theorem 2 (Lower Bound): Every ?-round randomized circuit requires ? ? ?log?? auxiliary neurons.

Main Results (Applications) Main Results (Applications) Theorem 3 (Approximate Similarity Testing): There is SNN with ?( ???? ?/?) auxiliary neurons that solves ? approx. equality in ? ? rounds. (if ??= ??, w.h.p. fires 1, if ??? ??,?? ?? does not fire w.h.p.) Theorem 4 (Compression): Implementing JL random projection from dimension ? to ? < ? using ?(? ?)Neuro-RAM modules each with ? Beating the na ve solution when ? > ?. ? neurons.

High Level Idea of Neuro High Level Idea of Neuro- -RAM Module RAM Module Here: ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds. Divide ? input neurons ? into ? buckets. Divide log? index neurons ? into two. Step 1: select bucket ??using first half of ? ?2 ?0 ?3 ?1 ?2 ?1 [11 10] [10 01] [11 10] [10 10] [00 10] ?2 ?0 ?3 ? ?1

High Level Idea of Neuro High Level Idea of Neuro- -RAM Module RAM Module Here: ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds. ?2 ?0 ?3 ?1 ?2 ?1 [11 10] [10 01] [11 10] [1? 10] [00 10] ?2 ?0 ?3 ? ?1 Selecting ??with ? = ??? ?1 The non-selected ??are depressed and will not fire.

High Level Idea of Neuro High Level Idea of Neuro- -RAM Module RAM Module Here: ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds. ?2 ?0 ?3 ?1 ?2 ?1 [11 10] [10 01] [11 10] [1? 10] 2? ? [00 10] 2? ?2 ?0 ?3 ? ?1 Next: use ? ? decoding neurons to decode the value of the bit ??? ??

High Level Idea of Neuro High Level Idea of Neuro- -RAM Module RAM Module Here: ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds. ?2 ?0 ?3 ?1 ?2 ?1 [11 10] [10 01] [11 10] [10 ?0] [00 10] 2? ? 2? ?3 ? ? Successive decoding: In the beginning ?3 fires only if first bit in ?3 fires. In step ?, ?3fires only if ?? bit in ?3 fires. This continues until getting a stopping signal from selected ?? ?3 ?1 ?2 ?0

Lower Bound for Neuro Lower Bound for Neuro- -RAM with Spiking Neurons RAM with Spiking Neurons Theorem 2 (Lower Bound): Every randomized SNN that solves the INDEX function in ? rounds, requires ? ?log?? auxiliary neurons. ? Roadmap: Step 1: Step 2: Reduction from SNN to Deterministic Circuit Lower Bound for Det. Circuit via VC dimension

Step 0: Reduction from SNN to Feed Step 0: Reduction from SNN to Feed- -Forward SNN ?-round SNN with ? aux. neurons solving INDEX with high probability Forward SNN Feedforward SNN with O(??) neurons 0 1 0 1 0 1 0 1 0 1 0 1 1 2 ? 1 1 ? 1

Step 1: Reduction to Distribution of Deterministic Circuits Step 1: Reduction to Distribution of Deterministic Circuits Probabilistic Circuit ?? ? Distribution over deterministic circuits ? Goal: find , s.t. for every input ? ??????? = 1 ? = ???? ? = 1 | ?

Step 1: Reduction to Distribution of Deterministic Circuits Step 1: Reduction to Distribution of Deterministic Circuits Probabilistic Circuit ?? Distribution over deterministic circuits ? ? ? aux. neurons ? Det. threshold neuron ? whose threshold is sampled from a logistic distribution with mean ?(?) Spiking Neuron u with threshold b(u): 1 Fires with probability 1 + ? (? ?(?))

Step Step 2 2: Reducing to Deterministic Circuits : Reducing to Deterministic Circuits for Subset of Inputs for Subset of Inputs ? ? most X values A deterministic circuit that solves INDEX for This circuits solves 2? 1 functions: ??: ?,???? ? ?,? , ??(?) = ?? ? ?? ?? ?( ????) 2?possible X values Circuit is correct But a FF circuit with ? gates has VC at most ?/log? We have ? ? = ?(?/?????)

Summing Up: Summing Up: FF deterministic circuits for INDEX FF SNN for INDEX SNN for INDEX Exists det. circuit with ? ? ? gates that solves INDEX for half of X-space ??has large VC as solves many functions. Hence must have many gates. ??

Main Results (Neuro Main Results (Neuro- -RAM) RAM) Theorem 1 (Upper Bound): Deterministic circuit with ? ? ? auxiliary neurons that implements Neuro-RAM in ? ? rounds (? ?). Theorem 2 (Lower Bound): Every ?-round randomized circuit requires ? ? ?log?? auxiliary neurons.

Take Home Message Take Home Message Biologically inspired model for SNN Main questions: computational tradeoffs, role of randomness. Randomness not needed for neuro-RAN But does help for similarity testing and compression Remaining problems: exact equality? simpler circuits?