Submodular Maximization and Combinatorial Optimization Insights

Discover the realm of submodular maximization and combinatorial optimization through concepts such as maximum matching, Max-SAT, and interesting special cases. Understand the significance of submodularity in various fields, from economics to sensor covering. Dive into why submodular functions matter and how they are prevalent in different domains like economics, sensor covering, and combinatorics.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

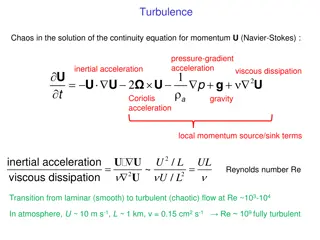

Parameterization in Submodular Maximization Moran Feldman University of Haifa

Combinatorial Optimization max f(S) s.t. S N S obeys a constraint C Given a ground set N. N is the set of edges of a graph. The constraint C allows only legal matchings. f(S) = |S| is the size of the set S. Maximum Matching 2

Combinatorial Optimization (x y z) (x z w) max f(S) s.t. S N S obeys a constraint C S = {y, z} f(S) = 1 Given a CNF formula. An element in N for every variable. f(S) is the number of clauses satisfied by assigning 1 to the variables of S (and 0 to the other variables). Max-SAT 3

An Interesting Special Case max f(S) s.t. S N S obeys a constraint C Generalizes Maximum Independent Set Interesting to study special cases. Should have the right amount of structure. Cannot be approximated 4

An Interesting Special Case max f(S) s.t. S N S obeys a constraint C Well Known Example The function f is linear. C is defined by a totally unimodular matrix (a bit inaccurate). Every element in N has a weight. f(S) is the sum of the weights of S s elements. A matrix is totally unimodular if the determinant of every square submatrix of it is either -1, 0 or 1. Can be solved (exactly) efficiently. Every extreme point solution is integral. 5

An Interesting Special Case max f(S) s.t. S N S obeys a constraint C Another Well-Studied Example Problems in which the objective function f is submodular. What is a submodular function? A class of functions capturing diminishing returns 6

Why Should We Care? Diminishing returns (and thus, submodularity) appear everywhere. Economics Buying 100 apples is cheaper than buying them separately. Sensor Covering The more sensors there are, the less each one covers alone. 7

Why Should We Care? Diminishing returns (and thus, submodularity) appear everywhere. Combinatorics For example, the cut function of a graph. Summarization Given a text, extract a small set of sentences capturing most of the information. Extract a small set of representative pictures from a video. 8

Brief History of Submodular Max. 1970s First results for submodular maximization Combinatorial algorithms: greedy and local search Around 2010 Introduction of continuous tools (multilinear extension, symmetry gap) A new wave of theoretical results A few years later Since submodularity captures summarization, increasing interest in the ML community 9

Introducing Parameterization What about problems with submodular objective functions that are nearly linear? Traditional View Submodularity and linearity are Boolean properties. A nearly linear submodular function is no better than a Newer View Define a parameter called curvature to measure distance from linearity. Approximation ratios in terms of the curvature. Submodular Maximization Problems Allow for better approximation ratios. general submodular function. Linear Maximization Problems 10

Introducing Parameterization we get improved results for submodular maximization problems. By relaxing linearity to be a continuous property, If we relax the property of submodularity, we can get results for non-submodular functions. Similar improvements can be obtained by relaxing other Boolean properties. Maximization Problems Submodular The properties can be of the objective, or the constraint. Linear Extends the usefulness of submodular maximization techniques. Maximization Problems 11

Formal Setting Objective function 5 4 3 Given a ground set N, a set function f: 2N R assigns a number to every subset of the ground set. A set function is submodular if: o f(A + u) f(A) f(B + u) f(B) 7 N A B N, u B. 11 0 6 8 10 11 5 12

Basic Problem Another Definition A set function is monotone if: o f(A) f(B) A B N Problem of Interest Input: a non-negative monotone submodular function f: 2N . a positive integer k. Objective: output a set S N of size at most k maximizing f. Applications The summarization applications mentioned above. k-Cover 13

k-Cover Elements E = {e1, e2, , en} and sets s1, s2, , sm E. Problem: find k sets that cover together as many elements as possible. ( ) S f Elements E = {e1, e2, , en} and sets s1, s2, , sm E. Formally, for every S = {si1, si2, , sik} the objective is = s i s S i Observation: f(S) is a non-negative monotone submodular function. s2 Standard monotone submodular function. Good to keep in mind as intuition. s3 s1 s4 s5 14

Approximability S f(S) 0 Algorithm {a} 1 {b} 2 A simple greedy algorithm guarantees (1 1/e)-approximation. [NWF78] {a, b} 2 Inapproximability No polynomial time algorithm can guarantee (1 1/e + )-approximation. [NW78] 15

Curvature: Relaxing Linearity Applies only to monotone submodular functions. General Monotone Submodular Function Linear Function Marginal Value Marginal Value Adding elements to the set Adding elements to the set Curvature [CC84] Marginal Value Always a number between 0 and 1. A lower number = more linearity. Curvature is 0 if and only if the function is linear. c 1-c Adding elements to the set 16

Optimizing with Curvature Some works tried to reanalyze existing algorithms in terms of the curvature. This leads to suboptimal results. f(OPT) = g(OPT) + (OPT) (OPT) (1 c) f(OPT) Sviridenko, Vondr k and Ward (2017) suggested instead to decompose the objective. Submodular Component g Original Function Linear Component Marginal Value Marginal Value c c 1-c 1-c Adding elements to the set Adding elements to the set 17

New Goal New Problem of Interest Input: a non-negative monotone submodular function g: 2N . a linear function : 2N . a positive integer k. Objective: output S N of size at most k maximizing g + . Theorem [SVW17] There is an algorithm outputting a set S obeying: f(S) (1 1/e) g(OPT) + 1 (OPT) The greedy algorithm finds a set S obeying: f(S) (1 1/e) g(OPT) + (1 1/e) (OPT) 18

Implications When g and are obtained by the curvature based decomposition: f(S) (1 1/e) g(OPT) + (OPT) = (1 1/e) f(OPT) + (1/e) (OPT) (1 c/e) f(OPT) . Smoothly interpolates between the linear and general submodular cases. Tight for every value of c. [SVW17] Maximization of g + sums has found implications also beyond curvature optimization: soft constraints regularizes 19

Submodularity Ratio: Relaxing Submoduarlity Applies only to monotone functions. For a monotone submodular function f : 2N Given two sets S T N, Originally defined by Das and Kempe (2011) under the name -weakly submodular function . R, T ? ? ? ? ? ? ? ? ? . S ? ? ? is always between 0 and 1. Higher value = more submodular. = 1 if and only if f is submodular. For a general monotone function f : 2N The submodularity ratio is the minimal value such that, for any two sets S T N, R, [? ? ? ? ]. ? ? ? ? ? ? ? ? 20

The Greedy Algorithm The Greedy Algorithm 1. While there are more elements that can be added to the solution: 2. Pick among them the element increasing the value by the most. 3. Add it to the solution. Analysis [DK11] Value f(S) OPT S f(S OPT) f(OPT) The Current Solution 21

Analysis (cont.) Conclusion Some element increases the value by at least: [? ??? ? ? ] ? Observation The elements of OPT \ S (together) increase the value by at least: ( ) OPT f Submodularity Ratio . ( ) S f /k /k(1- /k) 1- /k (1- /k)2 (1- /k)k e- Tight for any value of . [HFWK19] f(S0) f(S1) f(S2) f(Sk) f(OPT) ? ?? 1 ? ?(???) +? ?(?0) ? ??? ? ?? ? [? ??? ? ?0] 22

Alternative Parameters -DR Submodularity Ratio [ZQT18] The marginal contribution can grow when elements are added, but at most by a factor of -1. d-Supermodularity Degree [FI14] For each element v, only small set of d elements can make the marginal contribution of v grow. -Meta Submodularity [GSS24] Marginal values with respect to S can increase, but at a rate roughly proportional to /|S| times their current values. 23

Monotonicity Ratio: Relaxing Monotonicity A non-negative function f: 2N is monotone if, for any two sets S T N, f(T) f(S). The monotonicity ratio of f is the maximum m such that f(T) m f(S) for any such S and T. The monotonicity ratio is between 0 and 1, and it is 1 for a monotone function. Maybe indicates that, to get tight results for non-monotone objectives, we need algorithms that do not yield such linear guarantees. T S For many problems, it is not difficult to get a guarantee of the form: m for monotone case + (1 m) The same definition previously appeared in a Ph.D. thesis of [I15], but was minimally studied. known guarnatee Defined and studied in our work. [MF22] known guarnatee for non monotone case. For non-monotone problems we usually do not have tight results. The only tight result known does not have the above form. 24

A Non-monotone Model Problem The Problem Input: a non-negative submodular function f: 2N . Objective: output a set S N of maximizing f. Application: Max-DiCut Instance: a directed graph G = (V, E) with arc capacities ce 0. Objective: find a set S V of nodes maximizing ( ) S f ( ) S = f uc v , u S v S = uc v , u S v S (the total capacity of the arcs crossing the cut). Capacity: 2 Marginal gain: 0 Marginal gain: -1 25

Approximability Theorem There is a polynomial time 1 / (2 m)-approximation algorithm for unconstrained submodular maximization. No polynomial time algorithm can improve the above approximation ratio by a positive constant. The symmetry gap translates into hardness [V13]. Hardness [MF22] Best solution value: 1 Symmetrical fractional solution value: m [1 (1 p)2] + (1 m) 2p(1 p) Best symmetrical fractional solution value: 1 / (2 m) [for p = 1 / (2 m)] m p p 1 - m 26

Relaxing Down-Closeness A constraint is down-closed if removing elements from a feasible set keeps it feasible. Non-monotone submodular optimization subject to non- down-closed constraints was considered a lost cause (except for very simple constraints such as cardinality). n Best solution value: 1 Symmetrical solution value: 1/n Pick all but one No constant approximation [V13] Pick one 27

Parameterizing by Even for min = 0, this only gives - approximation. The state-of-the-art approximation for down-closed constraints is 0.401. [BF24] Durr et al. (2021) suggested bypassing the hardness of non- down-closed constrained by parameterizing with min . min = minimum -norm of any fractional solution The parameter min does not interpolate between general and down- closed constraints. For example, if the size of the ground set is n, and we allow only solutions containing at least k elements, then min = k/n. Theorem There is a polynomial time algorithm guaranteeing (1/4) (1 min ). [D22] This is the best possible approximation in terms of min . [MF23] 28

Interpolating State-of-the-art for down-closed constraints is 0.401. In a recent work (IJCAI 2024), we consider a decomposition of the constraint into a down-closed part, and a general part. Our guarantee depends on: min , and the fraction of the optimal solution value that can be attributed to the down-closed part of the constraint. A basic technique gives 1/e 0.367, and is enhanced to get the better results. In this proof of concept work, we only aimed to utilize the basic technique. Guarantees (1/e) (1 min ) . Recovers the optimal guarantee of (1/4) (1 min ) . Graph for the case of min = 0 All of the value attributed to the down-closed part. None of the value attributed to the down-closed part. 29

? ? Questions ? ? ?