Submodular Maximization within Matroid Constraints

"Explore the application of submodular maximization subject to matroid constraints in various fields such as machine learning, image processing, and algorithmic game theory. Understand the challenges posed by diminishing returns and the unified continuous greedy algorithm. Discover how this approach generalizes classical problems and its impact on sensor coverage, social networks, and image summarization."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

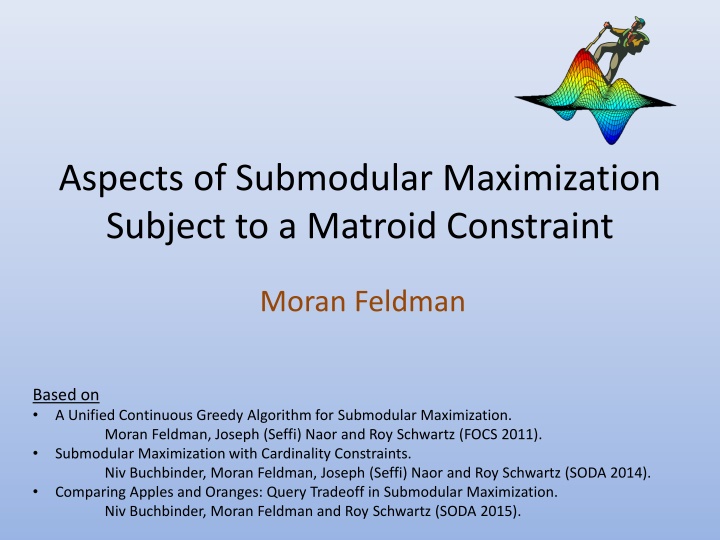

Aspects of Submodular Maximization Subject to a Matroid Constraint Moran Feldman Based on A Unified Continuous Greedy Algorithm for Submodular Maximization. Moran Feldman, Joseph (Seffi) Naor and Roy Schwartz (FOCS 2011). Submodular Maximization with Cardinality Constraints. Niv Buchbinder, Moran Feldman, Joseph (Seffi) Naor and Roy Schwartz (SODA 2014). Comparing Apples and Oranges: Query Tradeoff in Submodular Maximization. Niv Buchbinder, Moran Feldman and Roy Schwartz (SODA 2015).

Submodular Maximization Subject to a Matroid Constraint What? Generalizes Classical Problems Max-SAT, Max-Cut, k-cover, GAP Applications Machine Learning Image Processing Algorithmic Game Theory Why? 2

Sensor Coverage Sensors kL Large sensors Region kS Small sensors Objective Cover as much as possible of the region with sensors. Observation Coverage exhibits a diminishing returns. 3

Influence in Social Networks Objective: Sell SuperPhones. Means: Give k phones for free. 0.6 S S 0.8 0.2 0.4 S 0.3 0.2 Again, we have diminishing returns S 0.9 S 4

Image Summarization Objective Select k pictures representing as much as possible of the trip. Once more, diminishing returns. Can be quantified by image processing techniques. [Tschiatschek et al., NIPS14] 5

The 3 Components of the Problem 98 1. A ground set N of elements Possible positions for large/small sensors Social network users Images 2. A valuation function f Assigns numerical values to subsets Exhibits diminishing returns Such a function is called submodular 6

Example and Formal Definition 7 11 0 6 8 10 11 5 5 4 3 A B N, u B uf(A+u) f(A) f(B+u) f(B) N A B 7

The Third Component Cardinality Constraint Partition Matroid Constraint k1 k2 k3 k Matroid Constraint Graphical Matroid Linear Matroid z More fancy constraints: y x 8

The Problem Instance A ground set N A submodular function f A matroid constraint Objective Find a subset S N obeying the constraint and maximizing f(S). Generalizes NP-hard problems. Exact algorithm is unlikely. Approximation algorithms. S is feasible S is feasible f(S) f(OPT) E[f(S)] f(OPT) Randomized S -Approx. Algorithm 9

What can be done in polynomial time? How fast can it be done? Aspects of Submodular Maximization Subject to a Matroid Constraint Which additional properties of f can help us? Changing the model: online, streaming, secretary 10

Remarks Non-negativity Assumption 0 If negative values are allowed, f(OPT) can be assumed to be 0. Non-zero multiplicative approximation implies an exact algorithm. Value Oracle S f(S) If f is represented by a table, the problem becomes trivial. Assume access to a value oracle. Polynomial time complexity in |N|. 0 {a} 1 {b} 2 {a, b} 2 11

The field of Submodular Maximization Our problem is an important representative problem. Demonstrating: Techniques Aspects Has many applications and a long history: As early as the 1970 s [Fisher et al., Math. Program. Stud. 1978]. Very active field in recent years. 12

First Case Cardinality Constraint Constraint Objective Function Submodular Non-negative Cardinality Some results assume monotone objective. k A B N A B f(A) f(B) 13

Summary of Results The greedy algorithm Natural algorithm Approximation ratio of 1 1/e for monotone functions [Fisher et al., Math. Program. Stud. 1978] The best possible [Nemhauser and Wolsey, Math. Oper. Res. 1978] Random Greedy [Buchbinder et al., SODA 2014] A simple variant of greedy achieving an approximation ratio of 1/e for non-monotone Hardness results in this presentation are unconditional. They are based on information theoretic arguments. functions. In the same work we achieve also the state of the art approximation ratio of 1/e + 0.004. The corresponding hardness is 0.491. [Oveis Gharan and Vondrak, SODA 2011] 14

Faster Algorithms Monotone Functions Non-monotone Functions Oracle Queries as a Complexity Measure Queries are nontrivial in many applications. Independent of the model. Typically, the oracle queries State of the art Ratio: 1 - 1/e - 1/e - Previous result: n k log O O(nk) [Badanidiyurua and Vondrak, SODA 2014] (Random Greedy) represent the time complexity up to polylogarithmic factors. Our result: [Buchbinder et al., SODA 2015] n n k n k 1 2ln + O O(n ln -1) ln ln O k 15

The Greedy Algorithm 1. Start with the empty solution. 2. Do k times: 3. Add to the solution the element contributing the most. Analysis Value f(S) OPT S f(S OPT) f(OPT) The Current Solution 16

Analysis (cont.) Conclusion Some element increases the value by at least: ( ) k Observation The elements of OPT \ S (together) increase the value by at least: ( ) OPT f Submodularity ( ) S f OPT f ( ) S f (1-1/k)k 1/e (1-1/k)2 1/k 1/k(1-1/k) 1-1/k f(S0) f(S1) f(S2) f(Sk) f(OPT) ( ) S ( ) e ( ) S 0 f 1 f OPT ) f e ( ( ) S ( ) ( ) S e + 1 f f f OPT 0 OPT k f k 17

The Average Observation Recall Conclusion Some element increases the value by at least: ( k k Conclusion A random element of M increases the value, in expectation, by at least: ( Observation The elements of OPT \ S (together) increase the value by at least: ( OPT S f Submodularity ) ) ( ) S ( ) S f f S S OPT OPT f f ) ( ) S f Let M be the set of the k elements with the largest marginal contributions to S. Non-monotone functions. Fast algorithms. This simple observation has applications for: 18

The Random Greedy Algorithm 1. Start with the empty solution. 2. Do k times: 3. Let M be the set of the k elements with the largest marginal contributions to the solution. 4. Add a random element of M to the solution. Analysis For monotone functions, approximation ratio of 1 1/e, in expectation, by the Average Observation and the above analysis. For non-monotone functions, the analysis fails only because we can no longer bound: f(S OPT) f(OPT) 19

Intuition - Why is Randomness Important There might be an element u which looks good has a large marginal contribution. However, this element might be evil any solution containing it is poor. The (deterministic) greedy might be tempted to take u. A randomized algorithm has a chance to avoid u. 20

How Bad Can f(S OPT) Be? All the elements of (together) can only decrease the value to 0. ( OPT OPT f OPT ) 0 What happens if S contains every element with probability at most p? u OPT Concave by Submodularity Theorem: If S contains every element u with probability at most p, then: ( ) ( S OPT f E E[f(OPT S)] ) ( ) 1 p f OPT p 21

Random Greedy and Non-monotone Functions An element is selected with probability at most 1/k in every iteration. Each element belongs to Si with probability at most: i 1 1 k 1 Thus, i 1 1 ( ) ( ) E f OPT S f OPT i k Plugging into the above analysis gives an approximation ratio of: 1/e 0.367. 22

Making Random Greedy Fast The elements of M in decreasing marginal contribution order: p1 p2 M: u1 u2 uk pk Probability to be added For Monotone Functions For Non-Monotone Functions p1 + p2+ + pk= 1 p1 p2 pk p1 = p2= = pk = 1/k 23

Fast Algorithm for Monotone Functions 1. 2. 3. 4. Start with the empty solution. Do k times: Randomly choose a subset A containing elements. Add to the solution the element of A contributing the most. 1 ln n k Approximation Ratio How Fast is this Algorithm Each iteration requires O(n/k log -1) oracle queries. k iterations. In total: O(n log -1) oracle queries. A contains in expectation ln -1 elements of M. With probability at least 1- : A M . Hence, p1 + p2+ + pk 1- A S Approximation ratio of 1 - 1/e - By symmetry: p1 p2 pk 24

Fast Algorithm for Non-monotone Functions Why not use the same algorithm? If |A M| > 1, then we always select the best element. Consequently, p1 >> p2>> >> pk, although we need them to be (roughly) equal. Desired Solution Select an element u A M uniformly at random. Unfortunately, determining |A M| requires us to look at all the elements too costly. Solution 25

Fast Algorithm for Non-monotone Functions (cont.) To make |A M| concentrate, we need A to be larger. Algorithm 1. Start with the empty solution. 2. Do k times: 3. Randomly choose a subset A containing elements. 4. Let B be the set of the best elements in A. 5. Add a uniformly random element of B to the solution. ( ) n 8 ln k 2 / 2 ( ) 8 ln 2 / 2 |A M| (|B| = E[|A M|]) B A Marginal Gain 26

What did We Get? Approximation Ratio The algorithm almost mimics the random greedy, and it approximation ratio is e-1 - . Oracle Queries Each iteration requires O(n -2/k log -1) oracle queries. k iterations. In total: O(n -2 log -1) oracle queries. 27

General Matroid Constraint General Algorithmic Scheme Solve a fractional relaxation In the next slides we will: Define the relaxation. Explain how to approximately solve it. Round the solution Rounding can be done without loss. Pipage rounding [Calinescu et al., SIAM J. Comp. 2011] Swap rounding [Chekuri et al., FOCS 2010] 28

Summary of Results Continuous Greedy [Calinescu et al., SIAM J. Comp. 2011] An (1-1/e)-approximation for monotone functions. Optimal even for cardinality constraints [Nemhauser and Wolsey, Math. Oper. Res. 1978]. Measured Continuous Greedy [Feldman et al., FOCS 2011] An 1/e-approximation for non-monotone functions. State of the art previous result was a 0.325 approximation [Chekuri et al., STOC 2011]. Hardness 0.478 [Oveis Gharan and Vondrak, SODA 2011] Improved results for special cases when the function is monotone: Submodular Max-SAT Submodular Welfare 29

Relaxation Ground set: N = {a, b, c} set {a, b} Optimize over [0, 1]N characteristic vector (1, 1, 0) Matroid constraint Objective function Multilinear extension For a vector x,R(x) is a random set containing every element u N with probability xu. The extension is: F(x) = E[f(R(x))]. For integral points: f and F agree. Matroid polytope Convex hull of the feasible solutions 30

Before Presenting the Algorithms The algorithms we describe are continuous processes. An implementation has to discretize the process by working in small steps. The multilinear extension F: Cannot be evaluated exactly (in general). Can be approximated arbitrary well by sampling. 31

The Continuous Greedy For every time point t [0, 1]: Consider the directions corresponding to the feasible sets. Move in the best (locally) direction at a speed of 1. Feasibility: the output is a convex combination of feasible sets. Approximation Ratio OPT is a good direction Monotonicity: real is better than imaginary . y + OPT Real direction F(y OPT) y y OPT Concave by Submodularity y F(y) Imaginary direction 32

Approximation Ratio - Analysis By the above discussion: ( ) dt dF y ( ) ( ) (y ) (y ) F y OPT F f OPT F Monotonicity By non-negativity, f(y) 0 at time 0. Hence, ( 1 ) ( ) t ( ) F y e f OPT At time t = 1, for monotone functions: 1 1 ( ) ( ) F y f OPT e 33

Mesured Continuous Greedy Main Idea Find the best imaginary direction. Walk in the found imaginary direction. Feasibility The matroid polytope is down-monotone. Reducing the step cannot get us outside of the polytope. Gain In some cases, allows running for more time. Recall: ( y F 1 ) ( ) ( ) t e f OPT Allows optimal approximation ratios for: Submodular Max-SAT Submodular Welfare Approximation Ratio The previous analysis still works. x y y P x P y x 34

Non-monotone Functions Uses of Monotonicity in the Analysis Bounding F(y OPT). The real direction is better than the imaginary one. An Old Trick At time t, for every element u, yu 1-e-t(yu t in the continuous greedy). Every element of appears with probability at most 1-e-t in R(y). F(y OPT) e-t f(OPT) u OPT 35

Non-monotone Functions (cont.) By the above discussion: ( ) dt dF y ( ) ( ) = t (y ) F y OPT F (y ) e f OPT F By non-negativity, f(y) 0 at time 0. Hence, ( ) t ( ) F y t e f OPT At time t = 1: 1 ( ) ( ) ( ) . 0 376 F y f OPT f OPT e 36

Future Work Monotone Functions The basic question is answered (what can be done in polynomial time). Many open problems in other aspects: Fast algorithms almost linear time algorithms for more general constraints. Online and streaming algorithms getting tight bounds. Important for big data applications. ? 37

Future Work (cont.) Non-monotone Functions Largely terra-incognita. Optimal approximation ratio For cardinality constraint? For general matroids? Properties that can help Symmetry? Others? Other Aspects Fast algorithms, online, Here be dragons 38

Other Main Fields of Interest Online and Secretary algorithms State of the art result for the Matroid Secretary Problem. [Feldman et al., SODA 2015] Algorithmic Game Theory Mechanism Design Analysis of game models inspired by combinatorial problems. 39

Questions ? Additional Results on Submodular Maximization Nonmonotone Submodular Maximization via a Structural Continuous Greedy Algorithm. Moran Feldman, Joseph (Seffi) Naor and Roy Schwartz (ICALP 2011). Improved Competitive Ratios for Submodular Secretary Problems. Moran Feldman, Joseph (Seffi) Naor and Roy Schwartz (APPROX 2011). Improved Approximations for k-Exchange Systems. Moran Feldman, Joseph (Seffi) Naor, Roy Schwartz and Justin Ward (ESA 2011). A Tight Linear Time (1/2)-Approximation for Unconstrained Submodular Maximization. Niv Buchbinder, Moran Feldman, Joseph (Seffi) Naor and Roy Schwartz (FOCS 2012). Online Submodular Maximization with Preemption. Niv Buchbinder, Moran Feldman and Roy Schwartz (SODA 2015).