Supervised Distributional Methods for Lexical Inference Relations

This study delves into whether current supervised distributional methods effectively learn lexical inference relations. It compares supervised and unsupervised methods, evaluates the learning capabilities, and discusses the implications for lexical inference tasks. Through experiments with various word representations and methods, the research sheds light on the effectiveness and nuances of current approaches in semantic understanding.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Do Supervised Distributional Methods Really Learn Lexical Inference Relations? Omer Levy Bar-Ilan University Israel Ido Dagan Steffen Remus Chris Biemann Technische Universit t Darmstadt Germany

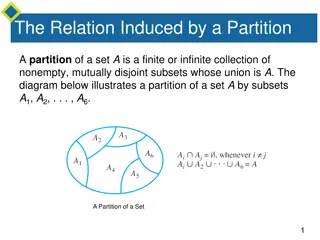

Lexical Inference: Task Definition Given 2 words (?,?) Does ? infer ?? In the talk:? ? refers to hypernymy ( ? is a ? ) Dataset Positive examples: dolphin mammal Jon Stewart comedian Negative examples: shark mammal Jon Stewart politician

Unsupervised Distributional Methods Represent ? and ? as vectors ? and ? Word Embeddings Traditional (Sparse) Distributional Vectors Measure the similarity of ? and ? Cosine Similarity Distributional Inclusion (Weeds & Weir, 2003; Kotlerman et al., 2010) ? ? ? ? cos ?, ? = Tune a threshold over the similarity of ? and ? Train a classifier over a single feature

Supervised Distributional Methods Represent ? and ? as vectors ? and ? Word Embeddings Traditional (Sparse) Distributional Vectors Represent the pair (?,?) as a combination of ? and ? Concat: ? ? (Baroni et al., 2012) Diff: ? ? (Roller et al., 2014; Weeds et al., 2014; Fu et al., 2014) Train a classifier over the representation of (?,?) Multi-feature representation

Main Questions Are current supervised DMs better than unsupervised DMs? Are current supervised DMs learning a relation between ? and ?? (No) If not, what are they learning?

Experiment Setup 9 Word Representations 3 Representation Methods: PPMI, SVD (over PPMI), word2vec (SGNS) 3 Context Types Bag-of-Words (5 words to each side) Positional (2 words to each side + position) Dependency (all syntactically-connected words + dependency) Trained on English Wikipedia 5 Lexical-Inference Datasets Kotlerman et al., 2010 Baroni and Lenci, 2011 (BLESS) Baroni et al., 2012 Turney and Mohammad, 2014 Levy et al., 2014

Supervised Methods Concat: Baroni et al., 2012 ? ? Diff: Roller et al., 2014; Weeds et al., 2014; Fu et al., 2014 ? ? Only ?: ? Only ?: ?

Are current supervised DMs better better than unsupervised DMs?

Previously Reported Success Prior Art: Supervised DMs better than unsupervised DMs Accuracy >95% (in some datasets) Our Findings: High accuracy of supervised DMs stems from lexical memorization

Lexical Memorization Learning that a specific word is a strong indicator of label Example: Many positive training examples like (*, animal) The classifier memorizes that ? = animal is a good indicator Test examples like (*, animal) are correctly classified for free In other words: overfitting Raises questions on dataset construction

Lexical Memorization Avoid lexical memorization with lexical train/test splits If animal appears in train, it cannot appear in test Lexical splits applied to all our experiments

Experiments without Lexical Memorization 4 supervised vs 1 unsupervised Cosine similarity 1 0.9 0.8 0.7 Cosine similarity outperforms all supervised DMs in 2/5 datasets PERFORMANCE (F1) 0.6 0.5 Best Supervised Unsupervised Best Supervised Best Supervised Unsupervised 0.4 Unsupervised Best Supervised 0.3 Conclusion: supervised DMs are not necessarily better Best Supervised Unsupervised Unsupervised 0.2 0.1 0 Kotlerman2010 Bless2011 Baroni2012 Turney2014 Levy2014

Are current supervised DMs learning a relation relation between ? and ??

Learning a Relation between ? and ? Requires information about the compatibility of ? and ? What happens when we use Only ? (ignore ?)? Intuitively, it should fail ? could be anything!

Learning a Relation between ? and ? In practice: 1 0.9 0.8 Almost as well as Concat & Diff 0.7 PERFORMANCE (F1) 0.6 0.5 Best Supervised Best method in 1/5 dataset Best Supervised Best Supervised 0.4 Only y Only y Only y Best Supervised 0.3 Best Supervised Only y 0.2 Only y 0.1 0 Kotlerman2010 Bless2011 Baroni2012 Turney2014 Levy2014 How can the classifier know that ? ? if it does not observe??

If these methods are not a relation between ? and ?, what exactly are they learning? what exactly are they learning? not learning

If these methods are not a relation between ? and ?, what exactly are they learning? what exactly are they learning? not learning

Prototypical Hypernyms Hypothesis: the methods learn whether ? is a prototypical hypernym Prototypical Hypernyms: animal mammal fruit drug country Categories, Supersenses, etc.

Prototypical Hypernyms Hypothesis: the methods learn whether ? is a prototypical hypernym Experiment: Given 2 positive examples (?1,?1) and (?2,?2) (???????,????) and ?? ???,?????? Create artificial negative examples (?1,?2) and (?2,?1) (???????,??????) and (?? ???,????) These artificial examples contain prototypical hypernyms as ? How easily is the classifier fooled by these artificial examples?

Prototypical Hypernyms Recall: portion of real positive examples ( ) classified true Match Error: portion of artificial examples ( ) classified true Bottom-right: prefer over Good classifiers Top-left: prefer over Worse than random Diagonal: cannot distinguish from Predicted by hypothesis

Prototypical Hypernyms Recall: portion of real positive examples ( ) classified true Match Error: portion of artificial examples ( ) classified true Regression slope: 0.935 Result: classifiers cannot distinguish between artificial ( ) and real ( ) Conclusion: classifiers returns true when ? is a prototypical hypernym

Prototypical Hypernyms: Analysis What are the classifiers most indicative features? Indicators that ? is a category word: ??? ? ????? ? ????? ? Partial Hearst (1992) patterns: ?? ?? ? ? ????????? ? ??? ??? ?

Conclusions Are current supervised DMs better than unsupervised DMs? Not necessarily Previously reported success stems from lexical memorization Are current supervised DMs learning a relation between ? and ?? No, they are not Only ? yields similar results to Concat and Diff If not, what are they learning? Whether ? is a prototypical hypernym ( mammal , fruit , country , )

What if the necessary relational information does not exist does not exist in contextual features?

The Limitations of Contextual Features Example: ? ??? ?? ? Contextual features cannot capture ? ??? ?? ? jointly What can they capture? ? ??? ??? ? (separately)