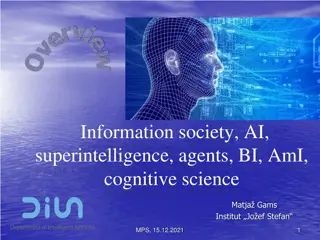

Technical Problems in Long-Term AI Safety by Andrew Critch

Explore the technical and non-technical challenges in ensuring long-term AI safety as outlined by Andrew Critch. Delve into the plausibility of human-level AI and superintelligence, and consider the potential risks associated with the emergence of superintelligent AI. Stay informed and engaged in the conversation on the safety implications of advanced artificial intelligence.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Technical (and Non-Technical) Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Machine Intelligence Research Institute http://intelligence.org/

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Motivation, Part 1: Is human-level AI plausible? There are powerful short-term economic incentives to create human-level AI if possible. Natural selection was able to produce human- level intelligence. Thus, HLAI seems plausible in the long-term. Recent surveys of experts give arrival medians between 2040 and 2050.

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org From http://aiimpacts.org/ :

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org From http://aiimpacts.org/ : Cumulative probability of AI being predicted over time, by group

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Motivation, Part 2: Is superintelligence plausible? In many domains, once computers have matched human performance, soon afterward they far surpassed it. Thus, not long after HLAI, it s not implausible that AI will far exceed human performance in most domains, resulting in what Bostrom calls superintelligence .

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org (optional pause for discussion / comparisons)

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Motivation, Part 3: Is superintelligence safe? Thought experiment: Imagine it s 2060, and the leading tech giant announces it will roll out the world s first superintelligent AI sometime in the next year. Is there anything you re worried about? Are there any questions you wish there had been decades of research on dating back to 2015?

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Some Big questions Is it feasible to build a useful superintelligence that, e.g., Shares our values, and will not take them to extremes? Will not compete with us for resources? Will not resist us modifying its goals or shutting it down? Can understand itself without deriving contradictions as in G del s Theorems?

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Goal: Develop these big questions past the stages of philosophical conversation and into the domain of mathematics and computer science Philosophy Mathematics/CS Technical Understanding Big Questions

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Motivation, Part 4: Examples of technical understanding Vickrey second-price auctions (1961) : Well-understood optimality results (truthful bidding is optimal) Real-world applications, (network routing) Decades of peer-review

Technical Problems in Long-Term AI Safety Nash equilibria (1951) : Andrew Critch critch@intelligence.org

Technical Problems in Long-Term AI Safety Classical Game Theory (1953) : Andrew Critch critch@intelligence.org An extensive form game.

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Key Problem: Counterfactuals for Self-Reflective Agents What does it mean for a program A to improve some feature of a larger program E in which A is running, and which A can understand? def Environment (): def Agent(senseData) : def Utility(globalVariables) : do Agent(senseData1) do Agent(senseData2) end

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org (optional pause for discussion of IndignationBot)

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Example: maximizing What would happen if I changed the first digit of to 9? This seems absurd because is logically determined. However, the result of running a computer program (e.g. the evolution of the Schrodinger equation) is logically determined by its source code and inputs

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org when anagent reasons to do X because X is better than Y , considering what would happen if it did Y instead means considering a mathematical impossibility. (If the agent has access to its own source code, it can derive a contradiction from the hypothesis I do Y , from which anything follows. This is clearly not how we want our AI to reason. How do we?

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Current formalisms are Cartesian in that they separate an agent s source code and cognitive machinery form its environment. This is a type error, and in combination with other subtleties, it has some serious consequences.

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Examples (page 1) Robust Cooperation in the Prisoners Dilemma (LaVictoire et al, 2014) demonstrates non- classical cooperative behavior in agents with open source codes; Memory Issues of Intelligent Agents (Orseau and Ring, AGI 2012) notes that Cartesian agents are oblivious to damage to their cognitive machinery;

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Examples (page 2) Space-Time Embedded Intelligence (Orseau and Ring, AGI 2012) provides a more naturalized framework for agents inside environments; Problems of self-reference in self-improving space-time embedded intelligence (Fallenstein and Soares, AGI 2014) identifies problems persisting in the Orseau-Ring framework, including procrastination and issues with self- trust arising from L b s theorem;

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Examples (page 3) Vingean Reflection: Reliable Reasoning for Self-Improving Agents (Fallenstein and Soares, 2015) provides some approaches to resolving some of these issues; lots more; see intelligence.org/research for additional reading.

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Summary There are serious problems with superintelligence that need formalizing in the way that fields like probability theory, statistics, and game theory have been formalized. Superintelligence poses a plausible existential risk to human civilization. Some of these problems can be explored nowvia examples in theoretical computer science and logic. So, let s do it!

Technical Problems in Long-Term AI Safety Andrew Critch critch@intelligence.org Thanks! To . Owen Cotton-Baratt for the invitation to speak. Patrick LaVictoire for reviewing my slides. Laurent Orseau, Mark Ring, Mihaly Barasz, Paul Christiano, Benja Fallenstein, Marcello Herreshoff, Patrick LaVictoire, and Eliezer Yudkowsky for doing all the research I cited