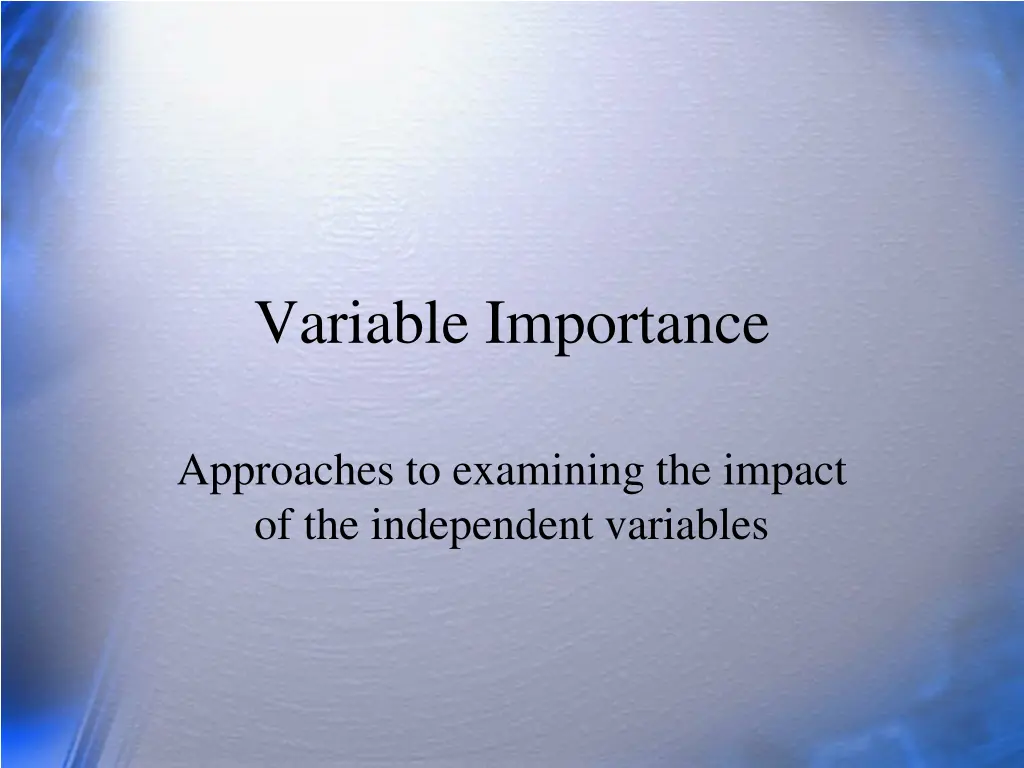

Understanding Approaches to Variable Importance in Regression Analysis

Explore the impact of independent variables in regression, the challenges with correlated variables, and the diverse approaches to measuring variable importance with practical examples.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Variable Importance Approaches to examining the impact of the independent variables

Questions What happens to b weights if we add new variables to the regression equation that are highly correlated with ones already in the equation? What are 4 main approaches to describing the importance of an independent variable in regression? Why might we prefer one approach to another?

The Problem of Variable Importance With 1 IV, the correlation provides a simple index of the importance of that variable. Both r and r2 are good indices of importance with 1 IV. The value r is the slope in z scores, which helps with applied interpretations. With multiple IVs, total R-square will be the sum of the individual IV r2 values, if and only if the IVs are mutually uncorrelated, that is, they correlate to some degree with Y, but not with each other. When multiple IVs are correlated, there are many different statistical indices of the importance of the IVs, and they do not agree with one another. There is no simple answer to questions about the importance of correlated IVs. Rather there are many reasonable answers depending on what you mean by importance.

Venn Diagrams {easy but not always right} Fig 1. IVs uncorrelated. Fig 2. IVs correlated. Y Y X1 UY:X1 UY:X2 Shared X X2 Shared Y X1 X2 r2 for X1, Y. R Y X1 X2 R Y X1 X2 Y 1 .50 .60 X1 X2 Y 1 X1 .50 1 1 .00 1 X2 .60 .40 1 R2=.52+.62=.61 R2=.44 R2 .25+.36; .61 What to do with shared Y?

More Venn Diagrams Desired state Typical state Y X1 Y X1 X2 X3 X2 X3 In a regression problem, we want to predict Y from X as well as possible (maximize R2). To do so, want X variables correlated with Y but not X. Hard to find, e.g., cognitive ability tests.

Measures of Importance (a) zero-order correlation & square: r and r2 (b) the standardized slope (often called beta), and the associated shared variance unique to the independent variable (last-in increment): ? and R2 (c) the standardized slope multiplied by the correlation (? *r), and (d) the average increment (statistic used for general dominance; multiple models). M( R2)

Venn Diagrams Fig 1. IVs uncorrelated. Fig 2. IVs correlated. Y Y .19 .08 X1 X2 X1 X2 .17 .36 .25 R2=.44 = .08+.17+.19 R2=.52+.62=.61 R Y X1 X2 R Y X1 X2 Y 1 .50 .60 X1 X2 Y 1 1 .00 X1 .50 1 1 X2 .60 .40 1

Measure 1: r and r2 Y Y X1 X2 X1 X2 R Y X1 X2 Y 1 .50 .60 X1 X2 R Y X1 X2 Y 1 .50 .60 X1 X2 1 .00 1 .40 1 1 For both models, r and r2 will be the same: ryx1 = .5, ryx2 = .6 (so r2 will be .25 and .36). OK if we just want to pick the best single IV. But ignores any correlation between IVs. Importance ignores other variables.

Measure 2: Beta & Increment Y Y X1 X2 X1 X2 r12 = 0 r12 = .4 X1 X2 X1 X2 r .5 .6 r .5 .6 .5 .6 ? .31 .48 ? R2 .25 .36 R2 .08 .19 Importance as the unique contribution of each X above and beyond all other X variables. Beta and incremental R- square tell the same story except slope vs variance.

Measure 3: Beta*r Y Y X1 X2 X1 X2 r12 = 0 r12 = .4 X1 X2 X1 X2 r .5 .6 r .5 .6 .5 .6 ? .31 .48 ? .25 .36 ? r ?*r .15 .29 These add to R-square for the model. When IVs are correlated, product can be zero or negative.

Measure 4: Average R2 Y Y X1 X2 X1 X2 r12 = 0 r12 = .4 X1 X2 X1 X2 1st .25 .36 1st .25 .36 2nd .25 .36 2nd .08 .19 .25 .36 Ave .17 .28 Ave These add to R-square for the model. When IVs are correlated, product can only be zero if variable never increments; cannot be negative.

Comparison of Stats Y .19 .08 X1 X2 .17 Measure X1 X2 Ratio (X2/X1) 1.2 1.44 1.55 2.38 1.65 r (correlation) r-squared Beta*r Last-in increment Average increment .5 .25 .31 .08 .17 .6 .36 .48 .19 .28

Another Look at Importance In regression problems, the most commonly used indices of importance are the correlation, r, and the increment to R-square when the variable of interest is considered last. The second is sometimes called a last-in R-square change. The last-in increment corresponds to the Type III sums of squares and is closely related to the b weight. The correlation tells about the importance of the variable ignoring all other predictors. The last-in increment tells about the importance of the variable as a unique contributor to the prediction of Y, above and beyond all other predictors in the model. You can assign shared variance in Y to specific X by adding variable to equations in order, but then the importance is sort of arbitrary and under your influence. The average increment is currently most in favor. But you should consider carefully what it is you really want to know. What is your research question? Best predictor alone? Best subset? Requirement to predict above and beyond all other predictors?

Variable Importance Summary Importance is not well defined statistically when IVs are correlated. Variance accounted for says nothing about the theoretical connection among the variables. What is causal? Path analysis is one alternative to condsidering the importance of variables.

Review What is the problem with correlated independent variables if we want to maximize variance accounted for in the criterion? Why do we report beta weights (standardized b weights)? Describe R-square in two different ways, that is, using two distinct formulas. Explain the formulas.

Review Find data on website Job Stressors data (Spector, Dwyer & Jex 88) Compute correlation matrix (all vbls) Compute regression DV = job satisfaction, IVs = conflict and frustration Find r, beta, r*beta Describe importance