Understanding Diagnostic and Standardised Assessment in Education

Learn about diagnostic assessment and standardised testing in junior secondary schooling, as discussed by Prof. Gavin T.L. Brown. Explore the development of assessment tools and the multiple functions of assessment, including improvement, accountability, and evaluation. Understand the different ways assessment can be fulfilled, from formal to informal methods. Gain insights into defining tests and the significance of standardised tests for comparison purposes.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

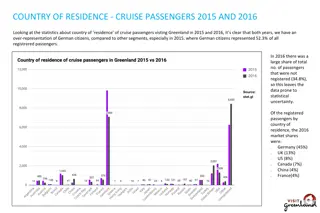

Diagnostic assessment in NZ junior secondary schooling Prof Gavin T L Brown, PhD Faculty of Education & Social Work Lecture to PostGrad Teach First Program, December 2016

Standardised Tests that I have helped develop Brown, G. T. L. (2001-2003). Teachers' conceptions of assessment (TCoA) inventory (Versions 1-3). Unpublished test. Auckland, NZ: University of Auckland. Brown, G. T. L. (2003-2008). Students' conceptions of assessment (SCoA) inventory (Versions 1-6). Unpublished test. Auckland, NZ: University of Auckland. Brown, G. T. L., Hui, S. K. F., Yu, W. M., & Wang, P. (2010). Teachers Conceptions of Assessment in Chinese Contexts (C-TCoA). Unpublished test. Hong Kong: Hong Kong Institute of Education. Croft, C., Dunn, K., & Brown, G. T. L. (2001). Essential Skills Assessment: Information Skills. Manual. Wellington: NZCER. Harris, L. R., & Brown, G. T. (2008). Teachers' Conceptions of Feedback (TCoF) inventory. Unpublished test. Auckland, NZ: University of Auckland, Measuring Teachers Assessment Practices (MTAP) Project. Hattie, J. A. C., Brown, G. T. L., Keegan, P. J., MacKay, A. J., Irving, S. E., Cutforth, S., Campbell, A., Patel, P., Sussex, K., Sutherland, T., McCall, S., Mooyman, D., & Yu, J. (2004, December). Assessment Tools for Teaching and Learning (asTTle) Version 4, 2005: Manual. Wellington, NZ: University of Auckland/ Ministry of Education/ Learning Media. Yuen, S. T., & Brown, G. T. L. (2011). HK Primary Students Conceptions of Assessment Inventory. Unpublished test. Hong Kong Institute of Education: Hong Kong.

Assessment: Multiple Functions Assessment Improvement Accountability Evaluate Schools & Teachers Better Teaching Better Learning Evaluate Students

Assessment Functions can be fulfilled in Multiple Ways Assessment Formal, Teacher Centric Informal Student Centric Standardised Tests & Exams Quizzes, Homework, etc. Peer & Self- Assessment Classroom Interactions

Defining a test A sample of tasks, questions, items drawn from a domain of interest intended to elicit information about learner skill, knowledge, understanding about that domain. Always has error Requires careful preparation, administration, and analysis to lead to best interpretations

Standardised tests Tests that are designed to administered, scored, and interpreted in pre-specified, common ways Usually published by test development companies Contain information about the performance of a NORM group as a basis of interpretation how did your students do compared to the average we already tested?

But what about difficulty? Don t just group content but also think about easy-medium-hard within an appropriate range for the teaching you are doing

Cognitive Demand matters too Surface, lower order thinking skills Deep, Higher order thinking skills Recall, remember, revise, rehearse Relate, extend, abstract Matching 1-1 Connecting within given material Making a list Connecting to related principles, theories, models beyond given material Necessary but not sufficient See Structure of Learning Outcomes (SOLO) Taxonomy. John Biggs & Kevin Collis Highly desirable, but requires surface

A test template/blueprint: Designing coverage systematically What are the important content areas in the a curriculum for reading comprehension OR mathematics or ..? Let s pick no more than 4 areas to test in a 30-40 minute test How many questions of each type do we want for each area? What question types shall we use to collect the data? This is the template for a formal test Content Areas 1. 2. 3. 4. Selected Constructed Surface Deep

Score Theories Classical Item Response Theory Observed Score = True Score + error Probabilistic estimate of ability at which you would get 50% of items correct O = + e: what you get is made up of your TRUE ability, knowledge, skill plus random noise Score = sum of items correct, adjusted by item difficulty, discrimination, & pseudo- chance parameters Used in asTTle & PAT Important limitations: So NOT equal to sum of items answered correctly True score ability may not be fixed (as we measure humans, they learn) True score estimation can only be done with observed scores

Test Scores: The problem Students A, B and C all sit the same test Test has ten items All items are dichotomous (score 0 or 1) All three students score 6 out of 10 What can we say about the ability of these three students?

CTT Scores Item 1 2 3 4 5 6 7 8 9 10 % correct A 60 B 60 C 60 Assumptions: Each item is equally difficult and has equal weight towards total score. Total score based on sum of items correct is a good estimate of true ability Inference: these students are equally able. But what if the items aren t equal or high quality?

So in IRT, we calibrate the items according to how hard they are .what does that do to total score? Item Difficulty 1 -3 2 -2 3 -1 4 -1 5 0 6 0 7 1 8 1 9 2 10 3 % correct A 60 B 60 C 60

A more accurate portrayal of who knows more Item Difficulty 1 -3 2 -2 3 -1 4 -1 5 0 6 0 7 1 8 1 9 2 10 3 % correct asTTle v4 A 60 530 B 60 545 C 60 593 Conclusions: C > A, B; B A because C answered all the hardest items correctly no penalty for skipping or getting easy items wrong

Assessment Scores: Weaknesses of Raw Scores Raw Score is NOT enough to interpret measurement of achievement Is 26/50 a good score? Depends! age of student time of year prior teaching prior achievement test difficulty test error Needs STANDARD SETTING

Rank Order: Norm-Referenced Interpretation Well established: Instructors can order people by competence, ability, performance Assumption: Position is relatively stable; Consequence: Rank resists instruction, so why bother?

Norm-Referenced Interpretations This score is better than how many others? Based on Normal Distribution Curve Makes comparisons possible Make comparisons between tests and students possible Adjusts scores for hard & easy tests Shows if weakness is serious or not All arbitrary mean & standard deviation But difficult to understand fractions of SD

Rank Order Score: Percentile Rank position in test population expressed as number out of 100 Illusion of accuracy; confusion with percentage Note distance between percentiles not equidistant in terms of scores Thus: person @ 60th %ile (about 11/20) is equal to or better than 60% of test takers

Rank Order Score: Stanine Raw score converted to standardized score Fuzzier than %ile takes better account of error in measurement stanine 1-3 4-6 7-9 meaning bottom middle top

How different do scores have to be to show that difference or change exists Error in test scores so . To be beyond chance? Estimate the standard error of measurement Indicator of the most likely score range a student would get without any learning or teaching and without any memory of the test ..

Standard Error of Measurement (SEM) Standard error of measurement an estimate of e: O = + e The SEM shows the range around the observed score in which the True Score falls Similar to: An opinion poll value +/- a certain value SEM The size of the band indicates degree of confidence that an observed score contains the True score +/- 1 sem = 68% of the time +/- 2 sem = 96% of the time +/- 3 sem = 99.99% of the time Formula: SEM=SD* (1-reliability)

Implications of SEM Shows range of accuracy High value indicates low accuracy; low value indicates high accuracy High stakes inferences and decisions should not be made without consulting SEM NB: Standard Errors tend to underestimate within-person variability

Using Norm Referenced Scores to Monitor Learning Standard Deviation Useful scale but difficult to understand and interpret because fractional numbers Percentiles A large gain may represent a very small gain in score especially at middle of distribution false precision Stanines Need gain of 2 stanines to be sure it s real too coarse Solution: More precision scale scores indexed to degree of inaccuracy Example asTTle 22 point gain is statistically significant

Rank Order Scores: Transformed Simplifies distribution move M to some easier to understand value; change SD to some easy to understand value makes comparisons between scores easier EXAMPLES Z-score Mean = 0; SD = 1 2/3 test takers score between -1 and +1 (but negative values) asTTle v4 Reading/Maths Mean Y5-7 = 500; SD = 100 2/3 test takers in that age range score between 400 and 600 NB. Recalibrated April 2009: Level 2 Basic 1250

Problem with Norm-Reference We normalise on our own population. The best in my class must be as good as the population The worst in my cohort must be really bad BUT Rank is independent of actual quality It depends on who else is in the group not actual ability. Bad in a strong group might be good Good in a weak group might be poor Excellence Inadequate

asTTle + SEM Person1 = 1500; SEM = 22 Thus, true ability range with 2/3 accuracy is 1478 1522 Person2 = 1510; SEM = 22 Person1 & Person2 ranges overlap within 1 SEM Thus, true ability most likely the same Person3 = 1530; SEM = 22 Person2 & Person3 ranges overlap within 1 SEM Person3 & Person1 ranges do NOT overlap within 1 SEM Thus, most likely true ability with 68% confidence Person1=Person2; Person2=Person3; Person1<Person3

Year 4 4 4 4 5 5 5 5 6 6 6 6 7 7 7 7 8 8 8 8 9 9 9 9 10 10 10 10 11 11 11 11 12 12 12 12 Quarter 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 Reading 1301 1306 1317 1333 1346 1360 1372 1390 1403 1416 1425 1426 1430 1436 1447 1453 1462 1474 1489 1494 1497 1499 1507 1519 1529 1539 1545 1567 1590 1612 1621 1628 1636 1643 1652 1657 Mathematics 1358 1364 1375 1389 1400 1410 1420 1430 1441 1451 1460 1466 1472 1479 1489 1500 1512 1521 1529 1535 1540 1545 1554 1567 1579 1590 1593 1601 1608 1622 1636 1650 1664 1678 1692 1699 asTTle norms: By year, quarter, subject NB: SEM = 22 points Are differences beyond chance?

asTTle Norms. Reading Norm Score 1700 1650 1600 1550 asTTle Reading Score 1500 1450 1400 NB: SEM=22. Gains of more than 22 points are statistically significant 1350 1300 1250 1200 Year.Quarter

asTTle norms. Actual vs. Expected asTTle Reading Curriculum Levels What does the mis-match imply? Also consider if the difference is bigger than the SEM Mean Curriculum Level Curriculum Expectation 7.00 6.00 Curriculum Levels 5.00 4.00 3.00 2.00

Another way to index test scores Link the tests to the difficulty of learning expected by the curriculum

e-asTTle scores by curriculum level (NOT student grade or year) September 2010

Rank order scores are NOT enoughthere is a temptation to not teach properly because it is difficult to move a child s relative rank. What education needs are Profile or Criteria-related interpretations to guide decisions about what to teach next. These can be found in trait profiles and standards-based scores such as curriculum levels or asTTle scale scores.

Interpretation: Absolute or Criterion- Referenced Comparing scores to a fixed set of categories or criteria Trait Profile Scale Scores (sub-scores) Score is compared to scores of other tests to develop a profile of strengths and weaknesses Error Classification Erroneous responses are studied and categorized according to type Good tests provide both normative and criterion referenced information

Sub-test scales for a sample of NZ standardized tests Test Trait Profile Scale Scores Meaning making with narrative, instructional, persuasive, poetic texts Vocabulary in context Number knowledge, Number strategies, Algebra, Geometry & Measurement, Statistics Word Recognition, Sentence Comprehension, Paragraph Comprehension, Vocabulary Range. PAT Reading Comprehension & Vocabulary PAT Mathematics STAR Reading test Processes & Strategies, Purposes & Audiences, Ideas, Language Features, Structure Number Knowledge, Number Sense & Operations, Algebra, Measurement, Position & Orientation, Probability, Statistics e-asTTle Reading e-asTTle Mathematics e-asTTle Writing (Revision 2012) Purposes: Narrate, Recount, Describe, Explain, Persuade. Scores: Ideas, Structure & Language, Organisation, Vocabulary, Sentence Structure, Punctuation, Spelling

Using a standardised test Requires understanding and following standardised administration instructions if you want to compare to the NORM population or other groups Aids? Time? Environment? Prior teaching? Age group? Exclusion Criteria?

NZ Assessment Toolbox: Secondary School http://toolselector.tki.org.nz/Assessment-areas Level 1 Level 2 Level 3 Level 4 Level 5 Level 6 Yr 11 Level 7 Yr 12 Level 8 Yr 13 Yr 2 Yr 1 SEA Yr 3 Yr 4 Yr 5 Yr 6 Yr 7 Yr 8 Yr 9 Yr 10 Six Yr Net NZ Curriculum Exemplars C o m p u l s o r y English, math, HPE, science, social studies, technology, the arts Te reo M ori, p ngarau, p taiao, hangarau, tikanga -iwi, ng toi, hauora STAR (Supplementary Test of Achievement in Reading) PAT (Progressive Achievement Tests) Reading Comprehension, Vocabulary, Mathematics (& Listening Comprehension) NEMP (1995-2010); NMSSA In all ELA N O T Yr 4 Yr 8 ARBs (Assessment Resource Banks) English, Mathematics & Science asTTle (Assessment Tools for Teaching & Learning) Reading Comprehension, Writing, & Mathematics P nui, Tuhituhi, & P ngarau NCEA L1 NCEA L2 NCEA L3 Scholarship Qualifications assessments

Exemplars http://toolselector.tki.org.nz/Assessment-areas Assessed and graded samples of authentic student work English and Maori medium, levels 1-5 Annotations on samples Scoring rubrics provided Clear links to NZ Curriculum objectives Support for teachers to inform judgments about student achievement against objectives

PAT (Progressive Achievement Tests) Reading Comprehension and Vocabulary; Listening Comprehension; & Mathematics Ages 7-14 Group administration, 30-55 minutes All multiple choice items Parallel forms, A and B for alternate years Rank Order information only Norms as percentiles and stanines But derived with item response theory scoring

ARB: (Assessment Resource Bank) http://arb.nzcer.org.nz/ Assessment resources for teachers English (levels 2-5), Mathematics (2-5), Science (2-6) only Broad range of assessment tasks from simple objectives, to extended responses in problem solving Includes tasks from other sources (e.g. TIMSS) Mixture of closed and open-ended items

Sample ARB This comprehension task assesses student ability to evaluate the ideas and information in a transactional text about an environmental issue. Students are asked to read a text, then respond to four questions. Keywords (comprehension; evaluating; transactional; School Journal; persuasion;) Publication date (02/10/2006) Level 4, Making Meaning

Key Characteristics of asTTle Read more: Archer, E., & Brown, G. T. L. (2013). Beyond rhetoric: Leveraging learning from New Zealand s Assessment Tools for Teaching and Learning for South Africa. Education as Change: Journal of Curriculum Research, 17(1), 131-147. doi:10.1080/16823206.2013.773932 Curriculum Choice Calibration Communication NOT Central Control or Reporting Compulsory Brown, G. T. L. (2013). asTTle A national testing system for formative assessment: How the national testing policy ended up helping schools and teachers. In M. Lai & S. Kushner (Eds.). A National Developmental and Negotiated Approach to School and Curriculum Evaluation (pp. 39-56). London: Emerald Group Publishing. doi: 10.1108/S1474- 7863(2013)0000014003

So whats a poor teacher to do? Use an IRT developed test to get more accurate information asTTle, PAT Check your own test questions before creating a total score and making decisions Difficulty (% correct) remove 0 and 100% items Discrimination (correlation to total) Remove those with r<.10 Then calculate total score and judge the cut score What % = pass, mastery, excellence, etc.