Understanding Results in Modeling & Experiment Design

Delve into the challenging realm of understanding results in modeling, particularly in executing models multiple times and deriving statistical insights. Explore how experiment design plays a crucial role in configuring inputs for model runs and analyzing outcomes. Unravel the complexities of homogenous parallel servers and the implications for service configurations in modeling scenarios.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

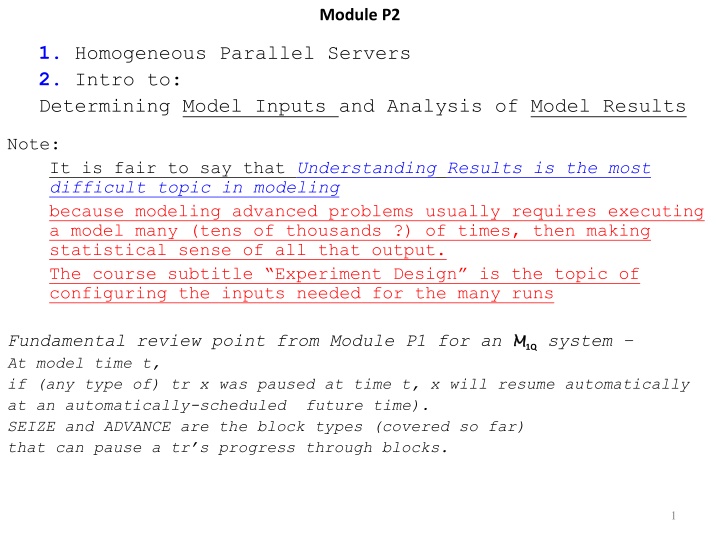

Module P2 1. Homogeneous Parallel Servers 2. Intro to: Determining Model Inputs and Analysis of Model Results Note: It is fair to say that Understanding Results is the most difficult topic in modeling because modeling advanced problems usually requires executing a model many (tens of thousands ?) of times, then making statistical sense of all that output. The course subtitle Experiment Design is the topic of configuring the inputs needed for the many runs Fundamental review point from Module P1 for an M At model time t, if (any type of) tr x was paused at time t, x will resume automatically at an automatically-scheduled future time). SEIZE and ADVANCE are the block types (covered so far) that can pause a tr s progress through blocks. M1Qsystem 1

Generalizing M M1Q to other service configurations We continue to assume Unless indicated otherwise, each customer service is assumed to be non-preemptive; also, conservation of customers holds; and, every server is 100% reliable; Server name scope The name of each server (gpss facility or other type) is global within a model, and that name is accessible to (can be referenced by) any tr type instance A more general server configuration than a single-server is a multiserver system, where a subset of the servers (not just one) could be allocated to each cj service. Multi-server configurations Def - n single servers combined as a homogeneous parallel server ; each server has identical service behavior, and each server is anonymous; each cj is allocated to >=1 free servers for its service Def -n single servers where the servers are not identical (some servers are faster than others or have special capability); such a configuration is a heterogeneous parallel server Def ss denotes a single server (such as, a gpss facility) Def a homogeneous parallel server is abbreviated as hps hps provides more general/flexible service scheduling hps simplifies IT center maintenance/troubleshooting (racks of networked pizza boxes ) Def hps degree, abbreviated nps = total number of identical servers (whether running or not) hps is quite common, both in human-made and natural systems: + any service counter with >1 clerk ( a Walmart multi-clerk RETURNS counter) + a pool of service connection listener processes that listen for internet connection requests + cooperative tasks in an ant colony Reference: https://www.nature.com/articles/s41598-018-30656-7 2

An hps example Parallelism is used in many S to do tasks where it is not immediately obvious Example Most relational databases SQL statements are inherently parallelizable - inherently meaning the system automatically parallelizes operations transparently to user Scanning (read all rows) of a 300 million row table Slow way: 1 process reads the whole table Faster way: 2 processes, each process runs on a different instruction execution core. Each process scans half of the table. Such a database system (in this case Oracle) can also cost-effectively determine an optimal number n of parallel processes for parallelizing each SQL statement. ---------------------------------------------------------------------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Bytes |TempSpc| Cost (%CPU)| Time | Pstart| Pstop | TQ |IN-OUT| PQ Distrib | ------------------------------------------------------------------------------------------------------------------------------------------ | 0 | SELECT STATEMENT | | 17970 | 157K| | 494 (2)| 00:00:06 | | | | | | 1 | PX COORDINATOR | | | | | | | | | | | | 2 | PX SEND QC (ORDER) | :TQ10001| 17970 | 157K| | 494 (2)| 00:00:06 | | | Q1,01 | P->S | QC (ORDER) | |* 3 | FILTER | | | | | | | | | Q1,01 | PCWC | | 4 | SORT GROUP BY | | 17970 | 157K| 17M| 494 (2)| 00:00:06 | | | Q1,01 | PCWP | | 5 | PX RECEIVE | | 918K| 8075K| | 135 (1)| 00:00:02 | | | Q1,01 | PCWP | | 6 | PX SEND RANGE | :TQ10000| 918K| 8075K| | 135 (1)| 00:00:02 | | | Q1,00 | P->P | RANGE | | 7 | PX BLOCK ITERATOR | | 918K| 8075K| | 135 (1)| 00:00:02 | 1 | 28 | Q1,00 | PCWC | | 8 | TABLE ACCESS FULL| SALES | 918K| 8075K| | 135 (1)| 00:00:02 | 1 | 28 | Q1,00 | PCWP | | ------------------------------------------------------------------------------------------------------------------------------------------ In this example, a SQL statement is the customer and database parallel server processes and instruction execution cores are the (combined software + hardware) hps that processes the SQL statement. | | | | | | 3

The simplest Heterogeneous server ; valid modeling of system entities 2-servers (2-clerk service model), the servers having different service characteristics. Heterogeneous service can be more complex than homogeneous systems (even the 2-server case) becauseservers can vary in their service duration distribution, allocation might involve server preference by cj, etc. => scheduling servers has options & is more complex. (gpss for the 2-clerk model does require user-developed blocks logic to handle cj scheduling because a gpss scheduler does not implement the general heterogeneous 2-server case) Def a model s accuracy in representing a system is that model s validity Validity requires correctly representing S s 1) Structure and 2) Behavior 1 ) Structure - Consider service queue representation: Queue discipline many possible algorithms for selecting the next item waiting in the queue to start service: FIFO, LIFO, priority, etc. Also, might have Jockeying (repositioning), Skipping (leave queue, violating FIFO order), Jumping (from one wait queue to another) and Reneging (c leaves S before c starts service) Queue configuration a) common queue = one queue used by all cj, regardless of assigned server, OR b) >=2 distinct queues. Unless indicated otherwise in this course, each service queue is a common queue, regardless of the kind of service configuration, & no queue involves jockeying/skipping/reneging. 2 ) Behavior - Correctly specify not just mean/min/max Ai values, but also a mathematical distribution of Ai values (recall your study of distributions in Stat50 / Eng115) 4

More advanced GENERATE operands for first hps model More advanced GENERATE operands for first hps model From Chapter 7 of the gpss Reference Manual: GENERATE A GENERATE Block creates Transactions for future entry into the simulation. GENERATE A,B,C,D,E (there are 5 possible operands providing flexible entity creation patterns) GENERATEOperands A - Mean inter generation time. Optional. The operand must be Null, Name, Number, String, ParenthesizedExpression, or DirectSNA. You may not use Transaction Parameters. Note ! The used or missing A and B operands along with gpss documentation of their meanings, determines the specific Ai distribution B - Inter generation time half-range or Function Modifier. Optional. The operand must be Null, Name, Number, String, ParenthesizedExpression, or DirectSNA. You may not use Transaction Parameters. C - Start delay time. Time increment for the first Transaction. Optional. The operand must be Null, Name, Number, String, ParenthesizedExpression, or DirectSNA. You may not use Transaction Parameters. D - Creation limit. The default is no limit. Optional. The operand must be Null, Name, PosInteger, String, ParenthesizedExpression, or DirectSNA. You may not use Transaction Parameters. Note ! Default no limit allows for the possibility of a non-terminating simulation E - Priority level. Optional. Zero is the default (= lowest priority). The operand must be Null, Name, integer, String, ParenthesizedExpression, or DirectSNA. You may not use Transaction Parameters. (specifies the relative priority of different tr types) 5

Language elements for parallel server models Language elements for parallel server models Modulo expressions and Review - RN1 is used implicitly (i.e. internally) by gpss to produce random numbers, as needed by a model RNj@val is an expression based on RNj for some j, 1 <= j <= 8. RNj is an SNA (i.e., a built-in function) RNj returns a value in [0-999] ( vs. random functions that use [0-.999999] {Reference Manual, sec. 4.15} ) Example: Command - -> SHOW RN1 will display the value returned by an RN1 call in the .sim window: Modulo expressions and Savevalues Savevalues 09/16/20 07:50:35 SHOW RN1 < - - SHOW expression displays in x.sim file the value of expression 09/16/20 07:50:35 241.0000000 < -- int value in [0,999] RNj@val returns the remainder) of division of RNj by val, giving a random int value in [0,(val-1)] SAVEVALUE - entity type that implements the concept of a named variable in modern languages Each savevalue MUST be initialized (aka declared) using INITIAL statement; values can be stored into and retrieved from any savevalue ; each savevalue is a global entity, and is accessible to every tr Demo:RNjexpr_and_savevalue_Demo.gps < = Single-step the code (Copy is on homePage) ; cj service duration is random in range [2,3]. RNj based expressions can be subexpressions in arbitrary gpss expressions. clear arrMean equ 5 ; Ai mean is constant value 5 Seed2 equ 398759 ; Seed RN2 rmult ,Seed2 ; RN2 is seeded, but not RN1; the , skips the A operand corresponding to RN1 initial x$procDuration,0 ; Initializecj s service duration to value 0 (x$ prefix required on a savevalue name) cj generate arrMean ; A cj arrives every arrMean t.u. s (It is a constant Ai distribution) savevalue procDuration,(RN2@2+2) ; Temporarily store value (2 + rem(RN2 call)/2) in savevalue procDuration advance x$procDuration ; cj does its service (x$ is required for retrieving a savevalue s value) The SAVEVALUE block s B operand reviews the coding practice: parenthesize each block operand expression Note: advance (RN2@2+2) is a way of combining the above savevalue and advance blocks into one block terminate 1 ; cj leaves S 6

suspending a truntil a condition/state changes until a condition/state changes The TEST block The TEST block suspending a tr A GATE block, and the more flexible TEST block has 2 purposes: 1. Pausinga tr based on a comparison result (and Resuming when comparison result becomes true) (Rough analogy: Java suspend() a running thread placed in wait state OR simpy yield eventToWaitFor) or 2. Conditional branching TEST block - use only operands A and B in our first use of TEST block = => The gpss language designers divided the Pausing and Test-and-branch capabilities into several specialized block types. Each TEST block type has a specific combination of operands. The simplest form of TEST block is: TEST O A,B,C < - - 1 space between TEST and relational operator O, and Tab between O to operand columns O - Relational operator. Relationship of Operand A to Operand B for a successful test. Required. O must be E, G, GE, L, LE, or NE (for each of the 6 common numeric and string comparison operators) A - Test value. Required. The operand must be Name, Number, String, ParenthesizedExpression, SNA, or SNA*Parameter. B - Reference value. Required. The operand must be Name, Number, String, ParenthesizedExpression, SNA, or SNA*Parameter. That is, the O operator evaluates the relationship between the value of operands A and B C - Destination Block number. Optional. Operand must be Null, Name, PosInteger, ParenthesizedExpression, SNA, or SNA*Parameter. A TEST Block operates in either "Refuse Mode" or "Alternate Exit Mode". In either case, operands A and B are evaluated and compared. If Operand C is not used, the TEST Block operates in Refuse Mode. When a tr attempts to enter a Refuse Mode TEST Block, and the test result is false, the tr is paused, i.e. not allowed to enter the TEST block until the test condition becomes true. When the condition becomes true, the blocked tr automatically enters the TEST Block and proceeds to Next Sequential Block (NSB). As with SEIZE, gpss manages TEST entry automatically 7

How a TEST block is much more general than a SEIZE block How a TEST block is much more general than a SEIZE block SEIZE facilityName when a tr x encounters SEIZE, gpss automatically calls built-in function f$facilityName and returns value 0 or 1. If 0 is returned, then facilityName is idle, and x can enter the SEIZE block and take ownership of the facility. Otherwise returned value 1 indicates that facilityName is busy, and x is placed in the facility FIFO wait queue VS TEST o A,B,C in this discussion, assume that the C operand is not present. With missing C operand, TEST operates in Refuse Mode When the C operand is present, TEST can implement a branch (transfer control to another address) TEST is much more general because 1 ) any (arithmetic or string) comparison operator o can be applied to operands A and B; the comparison logic must be coded by the model developer 2 ) A and B can be arbitrary expressions 8

gpss implementation gpss implementationof a hps - 1 Part #1 Outline of hps Creation and Initialization For a gpss facility, the only source code needed is SEIZE, and no initializations of any kind. Implementing an hps in a gpss model is more involved than a facility: Need 2 steps: hps Creation 1 ) psName STORAGE some_int 2 ) hps simulates powering up the hps by an initializer tr Such an initializer tr (refer to as init_ps) runs 1 time (assumes server never fails, so no re-starts needed) In most models, init_ps would be scheduled to run at model time t = 0 (i.e., GENERATE s C operand) init_ps has only one action (in gpss, only one block other than GENERATE & TERMINATE x): ENTER psName,init_int ; power up init_int of the some_int servers hps Use by each arriving Cj Assuming init_ps has executed, z of the servers in psName are requested by Cj s tr X when X does: 1 ) the condition test: z <= (current number of free servers) 2 ) tr X is paused until z servers are free for use, and then X can use z anonymous servers 3 ) when 1) is satisfied, tr X proceeds to the next block (i.e. a LEAVE block) and acquires z servers LEAVE psName,z ; Allocate z free servers to Cj; z must be <= (current number of free servers) : : < -- blocks to process tr x s actions 4 ) When finished with service, tr X must do ENTER psName,z ; Give back the allocated z servers to psName If z does not satisfy the condition test in 1) , a runtime error results (z <= w is not tested by model translation) Correctness note Suppose Ck failed the TEST block condition; then Ck is paused and remains paused until other concurrent Cj finish their use of enough servers; then Ck automatically proceeds to LEAVE block. Similar to SEIZE, gpss does data structures and bookkeeping for implementing pause/resume for you ; Server config is hps, nps = some_int 9

gpss implementation of an gpss implementation of an hps hps 2 2 Part 2 Part 2 Model service parameters Model service parameters The source code is different for acquiring servers in the parallel service (ps), but specifying service distributions is also needed as for a single server (ss). (In some of what follows, the previous ps and ss abbreviations are used ) Note - Modelsource code below omits RNj seeding ps_ia_mean ps_service_mean EQU ; ps_request value = 1 = (number of requested servers) is the simplest service scenario, per cj ; In more complex services, ; In more complex services, ps_request ps_request is a random variable, that is, is a random variable, that is, ps_request ps_request EQU 1 ; Each cj requests 1 free server INITIAL x$ps_svrDuration,0; Initialize variable that temp. saves each cj service duration Symbol definitions and processing parameters 1 1 .67 .67 ; Ai mean; simplify stats calculations with mean = 1, thus rate = 1 ; Service duration mean, per server, thus service rate = (3/2) = 1.5 EQU ps_request >= 1 >= 1 ; gpss SNA (built-in function) named M1 returns a tr s (currently) accumulated residence time ps_ResidenceTime TABLE M1,0.5,0.5,20 ; cj residence duration distribution ps_serviceDuration TABLE x$ps_svrDuration,0.5,0.5,20 ; cj service duration distribution 10 4/8/2025

gpss implementation of an gpss implementation of an hps Part#3 Part#3 The service logic code The service logic code hps 3 3 storage storage 8 8 ps ps ; This tr runs exactly one time at elevated priority 1 (priority 0 is default); this forces init_ps tr to execute first init_ps init_ps generate generate 0,,0,1,1 0,,0,1,1 ; Define nps value (must be posInteger) ; Initially create nps servers, exactly one time, at t = 0 ps ps, , 8 8 enter enter < -- Must be numeric ; Initialize, i.e., power on all servers for cj use terminate terminate ; Do not decrement tc: init_ps is not a cj tr type cj cj generate generate (Exponential(1,0,ps_ia_mean)),,,10000 (Exponential(1,0,ps_ia_mean)),,,10000 ; exponential Ai distri., limit is 10000 cj arrivals ; If all servers are busy, arriving cj is suspended by TEST; as soon as >=1 server become(s) free, cj proceeds queue queue ps_res_time ps_res_time ; Start residence duration stats region for this cj TEST GE TEST GE s$ps,ps_request s$ps,ps_request ; cj waits if there is not at least one free server ; Here because >= 1 server is free; thus, cj is guaranteed to immediately get a free server queue queue ps_svr ps_svr ; Start cj service duration stats region leave ps,ps_request ; Allocate one server from the free ones savevalue ps_svrDuration,(Exponential(1,0,ps_service_mean)) ; Temp save cj s expon.-distributed service duration tabulate ps_serviceDuration ; Add cj s service duration to histogram cell advance x$ps_svrDuration ; Do cj service for x$ps_svrDuration t.u. enter ps,ps_request ; Finished cj service, so give back cj s server to hps depart depart ps_svr ps_svr ; Finish of cj service duration stats region depart depart ps_res_time ps_res_time ; Finish residence duration stats region for this cj tabulate ps_ResidenceTime ; Add cj residence duration to wj histogram terminate 1 ; cj leaves service center, and decrement tc Note It is possible in hps for THIS REGION of blocks to be executed by more than one tr a the same model time. More about this soon. 11

Part#4 Part#4 - - hps hps scheduling complexities scheduling complexities Assuming the following ideal properties are implemented for an hps as for a ss 1. No server in a hps ever fails 2. Each cj service is non-preemptive 3. Each service duration is > 0 t.u. 4. No simultaneous arrivals per tr type, but >= 2 different tr type entities COULD BE CREATED at same time 5. Conservation of customers, i.e., each cj that arrives is eventually served 6. Each cj arrival (aj), start service (sj), and finish service (fj) is a simple event taking 0 t.u. 7. To keep hps models simple in this course, assume that when cj requests m > 1 servers, all m servers are requested and later released at the same model times. hps scheduling cj for service is more complex than ss service - Examples A ) It is possible for more than one cj to start service at the same model time (for nps > 1) Let nps = 4 in separate examples A) and B) below. Notation: aj(n) means: cj arrives and will (eventually) request n servers; and sj stands for cj starting service, and fj stands for cj finishing service. ------------2------------4------5------6------------8------------------------------------------ t Both c2 and c3 must wait until c1 finishes because just after s1 there is only 1 free server. All 4 servers will be free at time 6, thus, both c2 and c3 can start service at time 6 a1(3) a2(2) a3(2) f1 s2 s1 s3 B ) Simultaneous finish times are also possible ------------2------3------4------------6------------8----- t a1(2) a2(1) f1 Assume c1 has service duration 2, and c2 has service duration 1; at t=3, c2 can start f2 Thus, c1 and c2 finish at the same time

gpss implementation of an gpss implementation of an hps hps 5 5 Part#5 Part#5 More discussion/explanation for some of the Demo More discussion/explanation for some of the Demo hps The init_ps tr is created with GENERATE 0,,0,1,1 ; Schedule exactly one init_ps tr at model time 0 operand D is 1; if Operand D is null, then indefinitely-many init_ps tr would be created at t=0 because (by default) there would be no creation limit for this type of tr -- clearly, not what we want. hps code code operand E is 1; this overrides the default tr priority value 0 (lowest priority level) Doing this ensures that the init_ps tr is the first tr that executes when the model starts running, and runs at t = 0 because 0 will be the time increment of the first (and only) execution of an init_ps tr We do not want any cj tr instances to execute before the servers have been powered up Code sequence : SAVEV -- > TAB - -> start of ADV in our hps demo ( start of ADV means the tr now scheduled on FEC ) is possibly a model correctness problem consider the question: If tr X is executing these blocks at model time t, what if tr Y is also executing these same blocks at time t ? ps_svrDuration is a global variable, so consider the following interleaved sequence of tr X and tr Y actions at the same model time t a) tr X executes the savevalue block and saves value 5 and starts its pause via ADVANCE b) tr Y executes the same savevalue block at same model time as X, and Y saves 3 and starts its pause via ADVANCE ------------------------------------------- Is the sequence: a) followed by b) a possible Problem ??? Answer: it might be, depending on other event timing logic in the model. What we do know is that the SAVEVALUE variable (refer to as svv) value 5, written by tr X, has been overwritten to 3 by tr Y. The meaning of svv s value is the service duration of an Cj tr. The problem is that after tr Y starts service, any other observer tr Z will not know which of tr X and tr Y has its service duration value stored in svv. The fact that svv is a global-in-the-model variable causes the ambiguity. 13

gpss Report comparing two hps runs of our hps code: only difference is hps degree Case 1: nps=2 (=STORAGE A operand) exp. mean 1 for Ai distr, & exp mean 0.67 for service distr; 100000 cj QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY PS_RES_TIME 15 0 100000 0 0.756 0.755 0.755 0 PS_SVR 2 0 100000 0 0.670 0.670 0.670 STORAGECAP. REM. MIN. MAX. ENTRIES AVL. AVE.C. UTIL. RETRY DELAY PS 2 0 0 2 100002 1 1.330 0.665 0 0 TABLE MEAN STD.DEV. RANGE RETRY FREQUENCY CUM.% STORAGE UTIL. display is SS_RESIDENCETIME 0.000 0.000 0 misleading! PS_RESIDENCETIME 0.755 0.734 Case 2: nps=8: : exp., mean 1, interarrivals distr, and exp, mean 0.67 service distr; 100000 cj QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY PS_RES_TIME 7 0 100000 0 0.668 0.668 0.668 0 PS_SVR 7 0 100000 0 0.668 0.668 0.668 0 STORAGECAP. REM. MIN. MAX. ENTRIES AVL. AVE.C. UTIL. RETRY DELAY PS 8 0 0 8 100008 1 7.332 0.917 0 0 TABLE MEAN STD.DEV. RANGE RETRY FREQUENCY CUM.% SS_RESIDENCETIME 0.000 0.000 0 PS_RESIDENCETIME 0.668 0.671 0 Unlike FACILITY stats, = (1-UTIL.) for STORAGE entities on Report. is 4 times less for nps=8 vs. nps=2 ( .08 vs. .32) This illustrates linearly reducing by adding servers (this example achieves theoretically ideal speedup ). This speedup relationship does NOT hold in a real S where hps servers must compete for resources as they serve each Cj There is some service waiting for ps=2 because AVE.TIME is slightly larger for residence duration vs. service duration (0.755 vs 0.670) VS. ps=8, no Cj waits as service & residence duration are both 0.668, and QUEUE PS_RES_TIME MAX=7 < 8=nps

Concurrent execution of sequences of gpss blocks Concurrent execution of sequences of gpss blocks The reason for pause/suspend during attempted block entry is fundamentally the same for both SEIZE and TEST blocks: a condition to permit block entry to SEIZE/TEST is not satisfied Given gpss blocks b1 bn, where each bj has Refuse Mode None . Also assume no bj is an ADVANCE. Recall that such blocks bj will NEVER pause/suspend an executing transaction. = > By default, all blocks bj execute at the same fixed model time t AND no bj can be paused. Then, in theory, at model time t, any number m>=1 of such block sequences B could be executed. Also, no two of such executing sequences B can interleave execution of their blocks. Generally, the order of execution of >=2 such block sequences is random (as determined internally by gpss). If priority is required in a model, transactions of different types can have different priorities. Some Q & A Q#1 For gpss in general, could tr X interrupt tr Y? A#1 YES, but we will not model interrupts in our gpss work in CSC148 Q#2 Will the second half of CSC148 involve models where process functions (simpy terminology corresponding to a gpss transaction) can interrupt other process functions? A#2 YES Q#3 In M service duration x for C1 (first customer). Could other trs and/or events start/happen before Z finishes? M1Qassume n(t)=0 when tr Z = GEN SEI ADV REL starts at time t. Also assume ADV determines A#3 YES. When the ADV block starts, the tr Z is suspended at time t, and Z will not resume until time t+x. == > For any y < x, at any time in the interval [t,t+y] other trs (such as C2 arrival) can occur 15

Some Obscure statement syntax in the Student version of gpss Some Obscure statement syntax in the Student version of gpss - - 1 of 2 1 of 2 Here are the quirky block/statement syntax examples that 1) a gpss beginner is most likely to encounter and 2) that can be difficult to diagnose. They are the result of source code parser limitations rather than gpss system flaws. 1. Do not put any whitespace (not even one space/blank character) between a comma and a next operand Example: GENERATE Aoperand, Boperand will NOT post a model translation error. Instead, operands following the , are ignored. In this block example the B operand is ignored and Boperand is treated as the C operand. Then operands 10, 7 produce a constant, mean 10 distribution instead of the intended uniform distribution. Moreover, 7 becomes the C operand. NOT THE INTENTION! 2. Surround operands that are gpss expressions with () Example: GENERATE exponential(1,0,ia_mean) should have the operand parenthesized (gpss docs use the term parenthesized expression ). This code results in the translation error 09/25/20 23:51:45 Model Translation Begun. 09/25/20 23:51:45 Line 25, Col 11. Invalid character in GPSS Statement. Operand A. 09/25/20 23:51:45 generate exponential(1,0,ia_mean) ; ia distr. is exp(ia_mean) 09/25/20 23:51:45 **** Model Translation Aborted **** Correct the error by coding the A operand as (exponential(1,0,ia_mean)) The third call argument is the distribution mean. This might conflict with your statistics background or some textbook: the exponential distribution parameter, , is the rate, not the mean. 16

Some Obscure statement syntax rules in the Student version of gpss Some Obscure statement syntax rules in the Student version of gpss 2 of 2 3. Each STORAGE statement operand must be a positive integer (cannot be a symbol or an expression) 2 of 2 Example: numServers EQU 64 my64 storage numServers generate 5 terminate 1 09/26/20 10:33:51 Model Translation Begun. 09/26/20 10:33:51 Line 2, Col 13. Invalid name in GPSS Statement. Operand A. 09/26/20 10:33:51 my64 storage numServers 09/26/20 10:33:51 **** Model Translation Aborted **** Unfortunately, this does violate the symbolic coding principle: avoid numeric values as block/statement operands (Fortunately, storage is one of the few violations of symbolic coding in the gpss language) Debugging techniques A) AC1 is the built-in function that returns current model time B) The SHOW Command is used to display the value of any model expression. It can be used at any time during a model run when the run is either terminated or suspended C) Setting a breakpoint A block of the form STOP ,label (A operand is not used in this block mode) will suspend a model run whenever the code is about to enter the block at location label . When execution suspends at this place, SHOW can be used, an .rpt file can be dumped, and various other kinds of analysis can be done. 17

Proof of formula for a ss Def x^ stands for a statistical value of random variable x observed in a real S or a simulation of S . Def - denotes the true, theoretical utilization in S. By Law of Large Numbers, for stable system S, ^ -- > as longer & longer simulations are done. For stable S, assume/use here: Little s Law (abbr. LL ): L = * w, where L = avg(number of cj in S) Utilization in a single-server system with arbitrary Ai and service duration distributions Def - E(s) = expected or mean value of avg(service duration) = 1/ = reciprocal of the service rate Now consider just the server itself (ignore the queue) as an entire system S . Apply LL as follows: Cj is in S when Cj is being served, otherwise Cj is not in S when Cj is not being served (instead, Cj would be waiting in the system containing Cj) So, in S, avg(w) = avg(service duration) = E(s) = 1/ (1) Holds because the only residence time component is the service duration, when, by definition, Cj is in S avg(number of Cj in S) = by definition = L^ = (T T0)/T , where T0= total time in [0, T] where there are no Cj is S. In n(t) vs t graph for S , n(t) can only have values 0 or 1. L = avg(number of cj is S ) = (total time for which n(t)=1) / T = , by utilization definition Thus, L = andby LL and by (1), L = w = = = so in any stable ss, = (1) ( ( ) ) n(t) Example: If A1,A2,A3 are 12, 9, and 5, and T=35, then L^ = 26/35 1 0_0_________A1________ A2_______A3 ___T - - > t Note - In an arbitrary S with service queuing, L is generally not equal to (as it was in restricted S above) Example: In M M1Q with Ai and services exp distr., we later show: L = ( ) thus, for L > . In h1 code, with both Ai and service duration exp. Distributed, with and shown, => L = 0.7/0.3 = 2.33 gpss runs: Start 100000 & Start 2000000 give L^ = 2.341 & 2.329 respectively => L^ slowly converges to L 18

S Server erver stability and stability and hps hps utilization formula proof utilization formula proof M1Q Service Utilization and System Stability in M Suppose in M1Q Service rate = departure rate from the server = Cj /t.u. Thus, by (0), ss LQ = avg(number of Cj in the queue) will increase indefinitely at a rate of ( ) Cj/t.u. = = >Therefore, stability requires (as stated in module P1) that or equivalently < 1. Terminology: and its distribution are often (in textbooks & published papers) referred to as the offered load on S. It quantifies the workload that must be processed by the server. A note on LL: it actually should be stated as: avg(L) = * avg(Wcj) where is assumed constant. Utilization formula for hps having degree nps; each cj requests 1 free server Re-using the argument for utilization in the 1-server case just proven, consider a system S as the homogeneous multiserver only (that is, the waiting queue is not included in S) It can be shown (and we will assume) thatLL also holds in hps (assume 1 server per cj) Then, by Little s Law, L = = ( ) ( ( ) ) But the expression ( ) must be adjusted for parallel servers, as follows: We know that 0 <= L(t) <= nps (because we are omitting the queue from the restricted scope of S). Also, measured as (the % of busy servers) = L / nps Substituting for L in (2) gives: In any stable hps, = = ( ( nps ) ) means avg(% of all nps serversthat are busy) A stability requirement for a hps is < 1, so for an hps with nps > 1, nps Note1 - it is remarkable that nether LL nor the derivations of depend on the Ai and service distributions. (However, recall that the above derivation assumes each cj is served by exactly 1 server) Note2 - h1 and our demo hps system both satisfy LL (for sufficiently-long simulation duration) (0) (2) ( ( ) ) 19

Erlangs formula Erlang s formula Little s Law (LL) was historically preceded by the work of Dutch telephony scientist Agner Erlang. Erlang is best known as an original contributor to networking traffic analysis (decades before the Internet). He developed the following equation that clearly is LL stated in telephone system context: (concurrent number of in-progress phone calls) = (call arrival rate)*(average call duration) Agner Krarup Erlang Born 1 January 1878 L nborg, Denmark Died 3 February 1929 (aged 51) Copenhagen, Denmark Occupation Mathematician, statistician, and engineer Demo that LL holds in our nps = 2 hps model we just finished covering: With exponential distributions and rates = 1 and = 1.5 , and RN1 seed = 255074, do: 100000 cj, L = .rpt value = AVE.CONT. = 0.753, and since = 1, and w = AVE.TIME = 0.753 => in agreement with Little s Law, correct to 3 places to right of decimal point Finally, S is stable (required by LL) since, substituting into the stability condition for ss: nps = 1/(2*1.5) = .33 < 1 Thus, S is stable. 20

Choosing gpss histogram operands Choosing gpss histogram operands - - 1 of 2 1 of 2 Correctly displaying an rv s distribution in histogram form must be done carefully. Otherwise, important properties of the distribution can be mis-represented / visually mis-leading. Illustration: h1, with exponentially distributed Ai and service duration, with same distribution means as h1, compare 2 histograms of cj residence duration, Wcj; 10000 cj; all else, including the seed is the same Run#1 histogram D operand = 5 FACILITY ENTRIES UTIL. AVE. TIME AVAIL. OWNER PEND INTER RETRY DELAY BARBER 10001 0.709 7.060 1 10001 0 0 0 1 QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY RES_TIME 19 2 10002 0 2.529 25.167 25.167 0 W_BARBER 18 2 10002 2857 1.820 18.108 25.349 0 The largest mass occurs for the largest range of Wcj values. This indicates that 1) more histogram cells are needed and 2) the horizontal axis must expand to the right so that the distribution of values > 17.5 is revealed. 21

Choosing gpss histogram operands Choosing gpss histogram operands - - 2 of 2 2 of 2 Run#2 histogram D operand = 60 FACILITY ENTRIES UTIL. AVE. TIME AVAIL. OWNER PEND INTER RETRY DELAY BARBER 10001 0.709 7.060 1 10001 0 0 0 1 QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY RES_TIME 19 2 10002 0 2.529 25.167 25.167 0 W_BARBER 18 2 10002 2857 1.820 18.108 25.349 0 A glance at the .rpt section that displays the above distribution in table format shows that 99% of the histo mass lies to the left of the cell at about 100 (translates into the left-most 20 cells; this illustrates how .rpt histogram data can help in choosing TABLE C and D operands): RANGE FREQUENCY CUM.% 97.500 - 102.500 50 98.60 102.500 - 107.500 24 98.84 The important point is that experimentation is needed for histo displays for initial model runs. Run#1 histogram s rightmost cell oontains most of the count mass. Run#2 reveals many w values greater than the mean, but each such value occurs with very low frequency. Notice that the x-axis length for Run#2 is 10 times larger than for Run#1. 22

Analyzing and modeling Simulation Inputs h3 will be a data collection in-the-field problem 23

Intro to: Data collection for Input Modeling & Model Output Analysis Intro to: Data collection for Input Modeling & Model Output Analysis h1 illustrated strong dependence of model results just on assumed Ai distribution A major part of model Analysis involves Input Modeling for any S Example: Assume a stable ss has = 70%, or expressed as a fraction, = 0.7. For exponentially distributed Ai and service durations, theoretically, L = / (1 ) = 0.7/0.3 = 2.33. However, if Ai and service duration distributions are replaced by uniform distributions, having the same means then (with variance = (1/2)*mean), L is approximately 0.80, => approx. 3 times less Determine Model inputs (Part of building appropriate model) The basic steps for simulating a stochastic (contains randomness) S are: 1. Gather data d (often referred to as the raw data ) during S operation or simulation. VS. in course assignments, the instructor provides Model inputs and statistical properties. Ex: h1 ss barber queuing model: Ai & service distributions and their parameters are given. When modeling a real S, random variable distributions are not known in advance. Significant expense & effort must be spent measuring S behavior and collecting d. 2. Determine suitable representationsof d in model (done using distribution fitting ) 3. After a model is implemented, acceptance by the project client includes: Validate that the simulation model represents S with sufficient accuracy = = > Quote by statistician George Box, 1976 - All models are wrong, but some are useful Of course, what Box means is: no model exactly emulates all details of complex S , but Box also means - when a model is sufficiently accurate, it can be very useful Interpret Model results model (Does the model produce acceptable results?) 24

M MnQ nQ model stability model stability Validating the first version(s) of a new model can be challenging. One form of sanity check uses the fact that Little s Law (LL) holds in any stable model for any Ai and service duration distributions. Look at some hps server examples If LL does not hold, the model design/logic may have errors, OR the model is actually unstable. Below are 2 examples, one where LL holds, and another where LL does not hold The hps examples below use: = 1 and service duration mean = 1/ = 4; Example 1 M8Q that is, an hps with nps = 8 and exponentially distributed Ai and service durations; each cj requests 1 server; load free scenario; 100000 cj processed. LL holds. QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY CJW 22 6 100006 0 4.056 4.063 4.063 0 Report UTIL.^ observed = 50.1%; theoretically = ( ) = 1/(8*(1/4)) = 1/2, agreeing with gpss Example 2 Same distributions as Example 1; each cj requests a uniformly-distributed number of servers in the range [1,5] load free scenario; 25000 cj processed QUEUE MAX CONT. ENTRY ENTRY(0) AVE.CONT. AVE.TIME AVE.(-0) RETRY CJW 9654 9647 34647 0 4612.745 4568.551 4568.551 0 STORAGE CAP. REM. MIN. MAX. ENTRIES AVL. AVE.C. UTIL. RETRY DELAY PS 8 4 0 8 68667 1 0.007 0.001 9644 0 LL does not hold in this unstable model. Theoretically, = /(nps* ) nps=8, but, cj can request multiple servers, on average 3. This has the effect of reducing nps to (nps/3). Thus is estimated as 25 1 / ((8/3)*(1/4)) = 12/8 > 1 There are 9647 cj waiting when the model finished !

Choice of model process distributions Case study Choice of model process distributions Case study - - 1 of 4 1 of 4 Suppose you were hired to design a DES simulation of customer flow through an existing service Suppose you were hired to design a DES simulation of customer flow through an existing service center such as storeReturnsDesk or a web site s call Center, etc.). center such as storeReturnsDesk or a web site s call Center, etc.). The project must The project must Measure & Evaluate this system s Efficiency Measure & Evaluate this system s Efficiency , as measured by values such as: , as measured by values such as: avg(queueLength), avg(service duration), avg(cj residence duration), (operations cost/hour), etc. avg(queueLength), avg(service duration), avg(cj residence duration), (operations cost/hour), etc. All service (ss and hps) modeled so far has 2 things in common: 1. there is ONE service execution, i.e., one visit in S for service, per cj 2. Ai and service duration distributions are Unchanged during S operation Service models emphasized in first half of this course: many S are service entities, AND most large complex S usually have many subsystems that are service entities Best Practice: Sample arrival & service info. MUST be gathered early in project Analysis phase, long before any DES model coding Significant challenges in data collection - 1. Data GATHERING can be time consuming, complicated, and costly 2. Events and S behavior can be time-of-day (tod) dependent. If S has tod dependence, a model for S must implement different distributions for different ranges of model execution time (i.e., distributions morph/change over 24-hour periods) 26

Choice of model process distributions Case study Choice of model process distributions Case study - - 2 of 4 In many real S, we can manually capture the times when simple events (aj, sj, fj) occur. During data collection, the analyst must be stealth/unobtrusive: the analyst should NOT interfere with S s operations, nor in any way influence cj arrivals/departures and/or other behaviors A data sample d shown below, is assumed to be in a spreadsheet. Left 2nd - 5th columns are gathered manually. The others, cj#, w and (next Ai time) can be auto-calculated by the spreadsheet. Table Data set d = Donut shop Customer Arrivals/Service/Departure (during a non-busy time) 2 of 4 t.u. = seconds AI and service distributions are user-defined (i.e. raw recorded data) Cj# Cj Arrival TIme L at Cj arrival (not counting Cj) Cj Cj W (sec) Next Ai time (sec) StartService FinishService 1 10:04:40 0 10:05:00 10:07:30 170 - 2 10:04:58 1 10:07:45 10:08:40 222 18 3 10:11:30 0 10:11:45 10:12:15 45 392 4 10:16:05 0 10:16:25 10:17:10 65 etc. 5 10:17:40 0 10:17:52 10:18:29 49 6 10:27:20 0 10:27:43 10:30:02 162 7 10:44:55 0 10:44:58 10:45:28 30 8 10:45:00 1 10:45:30 10:47:40 160 9 10:47:20 1 10:47:45 10:49:51 151 10 10:48:00 1 10:49:55 10:50:50 170 11 10:57:45 0 10:57:50 10:58:42 57 12 10:58:40 1 10:58:48 11:00:00 80 27 13 11:02:38 0 11:02:50 11:03:50 72

Choice of model process distributions Case study Choice of model process distributions Case study - - 3 of 4 Discussion about this small sample data set d 3 of 4 Assume 1 t.u. = 1 minute; Data collection interval == > T = 1 hour; 13 arrivals; max(L) = 1, min(L) = 0 6 arrivals in first 30 minutes, 7 arrivals in last 30 minutes == > approx. constant in equal-sized intervals An approx. constant is good . In general, difference among time intervals of data is caused by: * changing mean, but distribution remaining fixed (means same probability mass fcn (pmf)) OR * changing distribution (one distribution transforms into another distribution) Approximated arrival rate for d = (total number of arrivals)/(total model time) = 13/60 cj/minute. d exhibits some very common Ai distribution properties observed in many real-world S: #1 Arrival events are somewhat rare (for an appropriate t.u. scale) #2 Arrivals are independent the number of arrivals in disjoint intervals are independent random variables #3 The average rate of events per t.u. is constant, and two arrivals cannot happen at the same time G2 is pmf of frequency of Xi = number of 5-minute intervals having Xi arrivalsx6) frequency frequency x | x | x | x x | x x (3)x (3) | x I x | x x x | x x I x x x | x x x (0) x (1) _____30 _____60 _____90 _____120 _____150 _____180 _____210 _____ W _____0 _____1 _____2 _____3 _____4 Xi G1:Distribution of residence durations W G2: Distribution of 5-minute intervals having xi arrivals G1 is bi-modal, likely from (short customer service & wait) vs. (long duration customer service & wait) G2 In G2, the pmf has: (0,6), means there were six 5-minute time intervals having 0 arrivals, (1,3) means three 5-minute intervals with 1 arrival, etc. But d is insufficient data for determining G2 s distribution. 28

More about the G2 distribution More about the G2 distribution The G2 pmf has so few points that its distribution is not obvious many common distributions could have a similar such small number of pmf graph points (but Slide#34 gives a clue !) It is important to thoroughly understand the significance of G2 S observation for T = 60 minutes: imagine dividing [0,60] into equal-sized y-minute intervals. Specify y=5 for G2 Other interval sizes y could be chosen. (Generally, larger should choose smaller interval size because the Ai distribution we will work with assumes rare event occurrences, per interval). Because we want to model rare events per time interval y, y must be chosen large enough so that events are in fact rare.. d is a very small sample, but nonetheless, it illustrates How To Organize & Analyze d. Scaling the x-axis for G2 What are the possible arrival counts (aka, number of arrivals Xi) in a random 5-minute interval? Consider Xi as a random variable To scale the x-axis, determine the range of observed Xi values First, Xi < 0 is impossible (because it is an event count) Clearly Xi = 0 or 1, or other small counts are possible (in fact in G2, there were 6 of the 5-minute intervals having 0 arrivals). There was a TOTAL of 13 Xi observations distributed over the 5 Xi values 0,1,2,3,4 Also, in the G2 x-axis, Xi > 4 is irrelevant because the largest 5-minute arrival count Xi was 4. ==> In general, with a histograms of rv frequencies,here, Xi being the rv, use the largest occurring Xi value as the right-most point on the histogram s x-axis 29

Distributions behaviors Case study Distributions behaviors Case study - - 4 of 4 What is Good and what is Bad about our example d ? 4 of 4 The Good + With proper setup/preparation, data is easy to collect (no behaviors/events to possibly miss). Can automate several of each cj s stats using a spreadsheet For example, for a given cj, w = (time service finishes) (time of arrival) = fi ai. + There are some sanity (meaning data quality) checks that can be applied to data sets (such as LL) The Bad there is a lot! 1. G2: as a distribution type is inconclusive: its shape is unlike any well-known distribution 2. d is too small of a sample. Recall from statistics - a data sample must be large enough to include all representative behaviors. This translates into a requirement for a Minimum Number of Data Points, mndp for d. Guidelines for mndp will be covered soon. Note: Many intro. Stats courses recommend mndp=30. In truth, mndp depends on S and model objectives (the point being that there is nothing magic/bullet-proof about the number 30 ) The time of day (tod) is very relevant in industries such as fast food, donut shops, service counters, etc. (that is why many donut shops close by noon every day, but open at 5AM). Also, 16-18 hours per day operations such as Starbucks are sensitive to rush hours. To have the most validity, a simulation should be validated for ALL offered loads (arrivals scenarios). A direct consequence is: n data collections having the mndp property are required, not just one, because the arrivals process (i.e. its distribution) might change over time interval of interest. 30

Validating Littles Law for Donut shop data Validating Little s Law for Donut shop data Little s Law (LL) For a very wide range of service systems S with a waiting line, in the long run (means: over an arbitrarily long time), a stable system S satisfies the equation L = * w, where L is the average number of customers in S, each cj uses 1 server, even in an hps server; is the rate of customer arrivals (must be constant) , and W is average customer residence duration. (First proved mathematically by John Little in 1961) ( L and w are steady-state (i.e. long-term average) values over an entire model run ) Let c denote a customer, and 1 t.u. = 1 minute < -- define this for ensuring calculations consistency = => We illustrate: LL holds, even though d is insufficient sample size for inferring stat. conclusions L = avg(L) = ( (the value of L as each customer c arrives) ) / (total number of c) = 5/13 c, = (number of c that arrived) / (observation duration) = 13/60 c/minute, and W = (total time spent in S by all c) / (total number of c) = (1433 seconds / 13) seconds/c but, must convert to minutes, giving ((1433/60) / 13) seconds/c = 1.84 minutes/c Then L = (5/13) cc= 0.3846 c, and * w = ((13/60) c/minute) * (1.84 minutes) = 0.2167 * 1.84 = 0.3987 c. Thus, the values from even this very small sample d are in quite close agreement with LL. Since LL is so easy to calculate, use it as a simple check for consistency of the raw data gathered for a service application. 31

cj service staging cj service staging In all models so far, either single-server or parallel server, each arriving cj has access to one service process, after which cj leaves S In a more general service scenario, each cj is served by a sequence of n >= 1 service processes. There are many such examples: A fast-food driveThru (FF) a service in FF can have multiple servers & windows/stages such as; order/pay/get food FF illustrates a sequence of exponential services, each service having (usually different) service duration mean mk A FF system in gpss represents a service sequence by: ADVANCE serviceDuration1 < - - first service : : : ADVANCE serviceDurationn < -- nth service In the rest of this module, focus mainly on aspects of arrival distributions. Our work with arrival distributions can usually also be applied to many other random variables in a model. 32

Exponentially Exponentially- -distributed event occurrences distributed event occurrences Very common properties observed for many event types (whether S is a service system or not):: a) events are relatively rare in equal-sized time intervals [t1,t2] in [0,T] b) event independence: in each [t1,t2] the number of events is an identically-distributed rv c) event rate has a constant mean over [0,T] We usually (but not always) focus on arrival events as the event type of interest in this module A single Ai arrival stream with properties a), b), and c) involves2 relevant distributions: 1. Poisson ( isdistribution of number of arrivals in each small fixed-size time interval y in [0,T]) 2. Exponential (is distribution of wait time between arrivals, i.e., the Ai distribution) Poisson provides distribution of number of events occurring in small fixed-size intervals, and Exponential provides distribution of Ai times We will use the following mathematical result in this course: When Poisson is determined to be distribution of arrival counts, then the Ai distribution will be exponential. The converse is also true. ** Textbooks/Practitioners say Poisson (arrival) process and exponential (arrival) process Variations of a single-stream exponential-based service distribution depend on service configuration: Exponential (only ONE service station/stop per cj) vs. Hypoexponential = sequence of (>= 2 service stations/stops per cj)each an exponential distribution having equal or different means Hypoexponential example Fast Food Drive Through with >= 2 windows to navigate 2-Window Example: Order food at Talk Box (= service#1) - - - > Pay and Get Food order (=service#2) Likely an exponential process, mean m1 Likely exponential process, mean m2 22

Poisson distribution Poisson distribution pmf for a Poisson-distributed random variable X has characteristic twin peaks = max. probabilities; the peak exists, regardless of the mean for any member of this family of distributions. k (the x-axis scale) is range [0,a small pos. int] of relative event frequencies seen in fixed-size intervals As the chart indicates, the Poisson distribution becomes less useful as increases beyond 7 or 8. For each value, the colored circles correspond to probability of k events occurring per small time interval. The dots of a given color are joined to produce a continuous curve (the curve serves to make it easier to discern the points of the function for a given ) ). Keep in mind that Poisson is a discrete, not continuous, distribution. Discrete because possible event counts Xi are only non-negative int values. Examples of Poisson distributed processes that have been modeled well: 1) Expected number of pieces of mail in a household, per day 2) Number of decay events, per second, from a radioactive source 3) Number of phone calls to a call center per hour 4) Deviation from the mean in residence utility (house utility voltage fluctuates more than you think) Notice visually, as increases, the pmf flattens (and approaches a normal distribution) Poisson_and_exponential.gps(on 148 homePage) <-- model histogram matches =1 pmf above Next 2 slides histograms from Poisson_and_exponential.gps model runs --> In source code, a user-defined approximation to exponential CDF used, not the built-in exponential(x,y,z) pmf above

Exponentially distributed Ai process Results distributions for 1 million arrivals Ai mean = 1.0 t.u. Ai sequence for cj has a continuous exponential distribution corresponding to the Poisson interval arrivals count distribution. Each interarrival time x is a real (can be integer or fractional) number > 0 The left-most histogram bar is the number of Ai values satisfying 0 < Ai < 1; similarly, the second left-most bar is the number of Ai values satisfying 1 <= Ai < 2. etc. The (almost 0) probabilities (histogram bar heights) for x values > 15 visualizes the POSSIBLE interarrival values, such as 30, but the high IMPROBABILITY of such a value occurring . Working with continuous distributions often involves determining prob(a < x < b), that is, the probability that the value of random variable x is in the range between a and b. 35

Poisson distributed arrival process gpss model of Poisson arrivals ModelResults distributions for 1 million arrivals a) Choose a fixed-size time interval as 1 t.u. and b) on average, 1 arrival per t.u.. Relative frequencies (i.e., bar heights) of arrival counts 0 vs. 1 vs. 2vs. are shown in histogram below Count of arrivals in equal-sized intervals will be a discrete distribution Min. possible count is 0: left-most bar = relative frequency of fixed-size intervals where there were 0 arrivals. Next smallest possible count is 1 (that is why cells in fractional ranges are all empty) giving the relative frequency of time intervals where there was 1 arrival, etc. The twin peaks of a Poisson distribution (even for this small mean) are evident. That is, 0 or 1 event occurring in an interval is equally-likely. Note The histogram (shape) is dependent on the SIZE of the fixed-length time intervals. 36

Poisson distributed arrival process calculated frequencies/probabilities gpss histogram bar heights are STATISTICAL (obtained from a gpss model run) so they are simulation model-generated, not theoretical values. In contrast to the (statistically-based) histogram bars derived from simulation, the table below of probabilities is obtained by a Python function that evaluates the THEORETICAL Poisson distribution frequencies at the indicated j values for the Poisson distribution having mean 1. Possible arrival count j 0 1 2 3 4 5 >5 (Source code: somePoissonPMF_evals.py on course homePage) Probability (X = j) for j = 0, 1, 2, 0.36787944117144233 0.36787944117144233 0.18393972058572117 0.06131324019524039 0.015328310048810098 0.0030656620097620196 Not displayed Twin peak probabilities = 1/e The probabilities in this pmf will play a fundamental role in distribution fitting, studied soon. Poisson is derived from a seemingly unrelated process: a sequence of coin flips Rest of this module Origin, Derivation & Use of the Poisson distribution 37

Evolution of distributions: Bernoulli Evolution of distributions: Bernoulli -- -- > Geometric Consider one flip of a coin, with its simple 2-point pmf. This is one binary trial, aka Bernoulli trial The Bernoulli distribution pmf below is simple 2-point function. p = prob(H is result of 1 coin flip) 1-p - x (T, (1-p)) p x (H,p) H ------------T 2.Let y be a random variable whose value is the number of independent trials until a first success . Success meaning is application-dependent. Let p be prob that a trial is a success and q = (1-p) is prob of a trial failure. => This experiment has the geometric distribution The pmf is p(x) = qx-1p for x=1, 2, 3, , and 0 otherwise. The ith trial is success is independent of trial (i-1) result, thus, the q and p probability factors are multiplied to calculate p(x) Many applications: part reliability/time-to-failure, etc > Geometric -- -- > Poisson > Poisson 1. The random variable is the result of one trial, i.e., Head(H) or Tail(T) 3. More general than geometric distribution, let X be a random variable for the probability of x successes, in any order, from n Bernoulli trials = => This is the binomial distribution. The pmf for X is The above expression often denoted: b(x; n, p) = > binomial distribution has parameters n and p. For X=x, (n-x) trials were failures {as with successes, which trials fail does not matter} For x = 0, meaning 0 successes, P(X=0) is (1-p)n and when ALL trials are success, P(X=n) = pn The two terms of P(X=x) having powers x and (n-x) represent the number of successes and failures respectively from n trials. The coefficient is the number of ways for x successes, namely, n! / ( x!(n-x)! ) 38

Poisson as limit of Binomial distribution Poisson as limit of Binomial distribution For example, with p=0.5 and n=2, P(X=1) =2! * (.5)1*(.5)1 = 0.5. Let f and s stand for failure and success, respectively, per trial. Then {ff,ss,sf,fs} = the complete sample space. 2 of the 4 experiments had exactly 1 success). Thus P(X=1) is 2/4 = 0.5 Is the Poisson distribution related to the binomial distribution? Surprisingly, Yes, Poisson is the limit (consider huge n) of the Binomial distribution s pmf Binomial random variables: When n independent Bernoulli trials are performed, each trial has result success or failure. Each trial has constant probability p of success and (1-p) of failure. Let x = number of successes in n trials. Your application/system defines what a trial success vs. failure means: an arrival occurred or did not occur, a customer sale happened or did not happen, a phone call was received or no call occurred), etc. 4. A Poisson distributed random variable can represent a binomial random variable with parameters (n,p) when n is large and p is small <- prob(event success should be small) -- > A Poisson process models rare, independent events with constant rate over a long time. Do this by mathematically specifying the binomial distribution s parameters n, p, and x , taking into account the Poisson process properties. Specifically, consider the binomial distribution for VERY LARGE n, VERY SMALL p, and x ranging over small int values >= 0. 39

Poisson as limit of Binomial distribution Poisson as limit of Binomial distribution Let = (number of events that occurred)/T = (number of events) per t.u., assumed constant Elapsed time T is interpreted as n Bernoulli trials. Suppose n = 100, and that p = (probability of a trial s success) = .42. We expect 42 events in 100 trials, so = n*p. The mean of the binomial distribution is np. Omit the proof, but assume it from now on. Example: for n=2 trials, and p=0.5, n*p = 2*0.5 = 1 => avg number of successes for 2 trials is 1 Expressing p as /n, and with terms re-arrangement, P(X=x) for the binomial distribution, can be rewritten as: n(n-1) (n-x+1) x(1 /n)n nx x! (1 /n)x . . . (1) When n - -> and is small, the left-hand factor (i.e., black terms ratio) approaches 1 We are considering only small x values vs. n. Thus, factors of the form (n-j)/n are each approx. 1. Thus, the left-hand factor (the ratio expression colored black) - -> 1 Denominator of right-hand factor (colored orange) - -> 1 since /n -- > 0 as n - -> . By calculus, limit n -> (1 /n)n- -> e- The limit of ( (red middle ratio)*(Numerator of the right factor) ) gives familiar Poisson pmf: P(X=x) - -> e- ( x / x!) = e- ( x / x!) , for x = 0,1,2,3, for small int x . . . (2) P(X=x) is relative frequency (i.e.) probability that x events occur in an observation interval. Note: There is no interpretation/assumption in the above mathematical analysis about WHAT kind of event is involved. Simeon Poisson invented this distribution for 40 predicting the outcome of French jury verdicts for various civil cases.

Notes on Simeon Poisson first applications of the Poisson distribution French mathematician, engineer, and physicist, who made several major mathematics and physics advances. The Poisson Distribution is named for its discoverer, who first applied it to the deliberations of French juries; in that form it did not attract wide attention. The classic Poisson example is the data set of von Bortkiewicz (1898), for the chance of a Prussian cavalryman being killed by a horse kick. Ten army corps were observed over 20 years, giving a total of 200 observations of one corps for a one-year period. The period or module of observation is thus one year. The total deaths from horse kicks were 122, and the average number of deaths per year per corps was thus 122/200 = 0.61. This is a rate of less than 1. It is also obvious that it is meaningless to ask how many times per year a cavalryman was not killed by the kick of a horse. In any given year, we expect to observe, well, not exactly 0.61 deaths in one corps (that is not possible; deaths occur in modules of 1). Here, then, is the classic Poisson situation: a rare event, whose average, but constant, rate is small, with observations over many small intervals of time. Sim on Denis Poisson (1781 1840) 41

Random variable (aka variate) value generation - 1 of 2 Many simulations need random variable values that follow a given distribution. Simulations usually use random numbers for generating values of a random variable. Outline here one widely-used approach. Example:pdf for f(x) = 1/a, on interval [0,a], a > 0, is a uniform distribution The probability density function (pdf) f(x) has constant height, so f(x) = (1/a), that is, f(x) does not depend on x prob 1/a --------- 0__xi_____a For any 0 <=xi <=a, prob(x <=xi)= from 0 to xi (1/a)dy = hatched region s area = ((y/a) evaluated at xi) ((y/a) evaluated at 0) = (1/a)*xi The integral of f(x) is (1/a)*x often written F(x); F(x) is the cumulative distribution function (CDF) of the pdf. For any x in f(x) domain, probability(x <= xi) = (1/a)*xi (1) Example1:prob(next xi value) <= (a/3) = (1/a)*(a/3) = 1/3. Example2: prob(next xi is between a/3 and a/2 = (1/a)*((a/2)-(a/3)) = 1/6. The CDF for the uniform distribution is easy to work with: although we used calculus to derive the CDF, the graph/geometry of f(x) is simple. Create uniformly-distributed random variable values xi on [0,a] by: 1) get a random number 0 < ri< 1 and 2) Consider ri as the probability in (1). Then ri = (1/a)*xi, and solving for xi gives xi = a*ri Using (2), each given ri corresponds to one uniformly distributed rv value a*ri. = > the inverse function a*x of the CDF (1/a)*x is applied to the CDF to solve for xi: a * (1/a)*xi = a * ri (2) 42

Random variable (aka variate) value generation - 2 of 2 Generating values for an arbitrary distribution can be difficult (not simple as with the uniform distribution) Inverse Transform technique In theory, Inverse Transform method can be used for any distribution D, but is easiest to compute when the CDF of the pdf of D, has an inverse function, F-1 (Inverse function, NOT algebraic reciprocal). Saw that F(x)=(1/a)*x has F-1(x)=a*x Another example: f(x)= x2and g(x)= x1/2 and g(f(z) = (z2)1/2 = z <-- g is f-1 Objective: given a sequence of uniformly distributed random numbers 0 < R < 1, compute corresponding sequence {Xi} of values that are distributed according to pdf of specified distribution D. Illustrate inverse transform for F(X) = the CDF of exponential distribution. Use CDF function inverse as in uniform distr, but finding its CDF inverse is harder. From calculus, exponential pdf has CDF: F(X) = R = 1 e- x, X > 0, mean 1/ ...(a) (Given (a), need to invert , i.e., get the X value that follows the exponential CDF given the probability represented by random number R; Fortunately, we can solve the equation (a) for X by recalling that ln(x) and ex are inverse functions: R and X are indexed as Ri and Xi; each Ri generates exp. distributed value Xi. In the right equality of (a), solving for Xi: Xi = -(1/ )*ln(1-Ri) . . .(b) => (b) means: For given random number 0 < Ri< 1 for i = 1,2,3, evaluate r.h.s of (b) to get Xi, an exponentially distributed random value. Note Deriving (b) used the fact that if 0 < Ri < 1 the same is true for (1-Ri) Library function call to ln and one multiplication needed for calculating an Xi, and1 / is a constant => (b) is efficient Note - The gpss library function exponential() uses (b) for producing exponentially distributed values Note - If the CDF for a given pdf does not have an inverse, then (often complicated) approximation algorithms are used for calculating random values for a given pdf and its CDF. 43

Exponential variate value generation, source code, using Python3 Python 3.6.1 implementation of exp variate generation From the Python Version 3.6 Documentation (9.2.2. Power and logarithmic functions)math.log(x[, base]) With one argument, return the natural logarithm of x (to base e). With two arguments, return the logarithm of x to the given base, calculated as log(x)/log(base). import math import random # Random number & seeding module Note: Python automatically re-seeds, per run # User prompt for an int or float rate value rate = float(input("Please enter a numerical exponential distribution rate: ")) assert (rate > 0 and isinstance(rate,float)) # Distribution mean must be positive and type float print("User-input exponential distribution rate: ", rate) def exprand(lambdr,rNumber): """ Return an exponentially-distributed random variate with RATE lambdr Each variate value is generated using the Inverse-transform method """ return -math.log(rNumber) / lambdr # Display 20 exponentially-distributed values having a specified rate for j in range(20): rNum = random.random() # Get a random number in the interval (0,1) print ("Random number: ",(1-rNum)," Exp-distr. variate: ",exprand(rate,1-rNum)) (Source code: expVariates.py) - Note: to make the demo transparent, symbolic coding not employed 44

Exponential variate value generation, source code, using Python3 (Source code: expVariates.py) - Note: to make the demo transparent, symbolic coding not employed Please enter a numerical exponential distribution rate: 1 User-input exponential distribution rate: 1.0 1-(nextRandom number): 0.1334033194429115 Exp-distr. variate: 2.0143782624300535 1-(nextRandom number): 0.0072988636041446675 Exp-distr. variate: 4.920036613610841 1-(nextRandom number): 0.24882506990076014 Exp-distr. variate: 1.3910051599266688 1-(nextRandom number): 0.5445545875596253 Exp-distr. variate: 0.6077870889993243 1-(nextRandom number): 0.35782909336584556 Exp-distr. variate: 1.0276997994025083 1-(nextRandom number): 0.19051833470915847 Exp-distr. variate: 1.65800684385422 1-(nextRandom number): 0.30214636042995147 Exp-distr. variate: 1.1968437418206694 1-(nextRandom number): 0.7117766089938408 Exp-distr. variate: 0.33999116821357855 1-(nextRandom number): 0.33048165918396744 Exp-distr. variate: 1.107204115378612 1-(nextRandom number): 0.3405197283935717 Exp-distr. variate: 1.0772822155891417 1-(nextRandom number): 0.5469050977532725 Exp-distr. variate: 0.6034799874929792 1-(nextRandom number): 0.39181172324065494 Exp-distr. variate: 0.9369738524271075 1-(nextRandom number): 0.10795670824386583 Exp-distr. variate: 2.226024981813427 1-(nextRandom number): 0.44023274402569923 Exp-distr. variate: 0.8204517282270388 1-(nextRandom number): 0.8989265935823852 Exp-distr. variate: 0.10655390125659035 1-(nextRandom number): 0.09889991414326649 Exp-distr. variate: 2.3136469084704654 1-(nextRandom number): 0.9010583633710386 Exp-distr. variate: 0.1041852472552186 1-(nextRandom number): 0.3784024773285808 Exp-distr. variate: 0.9717968949744152 1-(nextRandom number): 0.936467515785283 Exp-distr. variate: 0.06564044452791082 1-(nextRandom number): 0.8934890268932894 Exp-distr. variate: 0.11262122549523604 45

Exponential variate value generation Some exponential distribution pdfs with various rates Probability density function Dddddd CDFs for various values pdfs for various means Given a random number 0 < ri < 1, thinking of ri as a probability, then xi = -(1/ )*ln(1-ri) is the domain value in the pdf for which a random value y obtained from this distribution is < xi has probability ri. Graphically, probability( y is < x ) is the cumulative probability = the value of the CDF at x = height of the CDF graph at x Question: What is the probability that an exponentially-distributed with mean 1 random variable X s value is < 1 ? Answer: visually, approx. .62 (dashed red line intersection with the purple CDF) The diagram is, of course, not perfectly accurate. Mathematically, the much more exact answer is: Prob(y < 1) = prob(exp distributed rv y is less than mean 1) = CDF evaluated at 1 = 1 e- x where x and are both 1 = (1 1/e) = 1 - .368x = approx. .632 ( just above 0.6 on p(X<= x) ) y-axis 46

gpss DIY generating exponentially distributed random values - 1 of 2 ; genExpDistrValues.gps ; exponential CDF interpolation calculations in gpss ; WJM, CSC, CSUS ; Usage: Start nValues+1, nValues defined in source code Model Termination tc <- 0 ; The following is a 24-point approx. to an exponential distr.'s CDF ; 1) the inter-arrivals are Exponentially distributed, and, therefore ; 2) the frequency of arrivals within equal-sized intervals is Poisson distributed. ; Note - corresponding pmf has probability 0 for domain points > 8 RN1Seed EQU 394857 rmult RN1Seed ; 24-point Approximation of the CDF, mean 1, for exponentially-distributed values ; The pairs ... /num1,num2/..., called the "function follower" are the x,y coordinates of ; the CDF for the exponential distribution EXPDIS FUNCTION RN1,C24 0.0,0.0/.1,.104/.2,.222/.3,.355/.4,.509/.5,.69/ .6,.915/.7,1.2/.75,1.38/.8,1.6/.84,1.83/.88,2.12/ < -- If y is a random variable distributed exponentially .9,2.3/.92,2.52/.94,2.81/.95,2.99/.96,3.2/.97,3.5/ .98,3.9/.99,4.6/.995,5.3/.998,6.2/.999,7.0/.9997,8.0 ; Because expdis is a "random" (gpss terminology) function (see function doc), ; during each expdis call, each RN1 value is converted to a random real "rr" in (0,1) of greater precision; ; then expdis returns the interpolation of the second component of the function follower's first component ; that is "closest" via linear interpolation to rr ; Example: suppose a random# returned by RN1 is converted to the greater precision value 0.8000348, call it "pv" ; pv is extremely close to the first coordinate of pair /.8,1.6/, thus the value returned to user is ; interpolated to a value slightly larger than 1.6, call that value "1.6plus" ; Thus, for mean=1 prob(an exponentially distributed value) is <= 1.6plus = pv (as sketched in class last time) ; Abbreviation: "exp" for exponential/exponentially with = 1, then 0.8 = probability(y <= 1.6) 47

gpss DIY generating exponentially distributed random values - 2 of 2 driver_p nValues clear EQU EQU 1 100 ; driver tr parameter, each driver tr writes one exp distr value ; Number of exp distr values to create ; Define model execution error interrupt vectors ; Whenever ANY tr branches to the B operand label, model execution suspends ; at the block whose label is that B operand stop ,openError stop ,writeError ; File open tr openFile generate 0,,,1,1 ; Open the file one time as first tr executed open ( gpssExpValues.txt"),1,openError ; Create file of exp distributed values terminate ; tr to calculate and store successive exp distributed random values, mean = 1 driver generate 1,,,nValues ; Each tr writes one exp distr value, limit of nValues tr instances assign driver_p,fn$expdis ; Store next exp distr value in this tr s parameter named driver_p write p$driver_p,1,writeError ; Write next exp distr value to file terminate 1 ; File management fileMgt generate (nValues+1),,,1 ; Run one time after nValues values were written by driver tr instances close ,1 ; Close file terminate 1 ; Last termination of model run < -- How to set a breakpoint ; Error handling breakpoints openError terminate writeError terminate ; Here if file open failed ; Here if a file write failed (Default: The OPEN block s filename A operand is in the same folder/directory as the source file) sampleExpValues.txt 48