Virtual Switch Extension for Adaptive CPU Core Assignment

Explore the Virtual Switch Extension (VSE) for adaptive CPU core assignment in softirq, addressing challenges in datacenter multi-tenancy and network virtualization. Learn about performance impacts, existing models, and solutions for efficient packet processing and load distribution.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

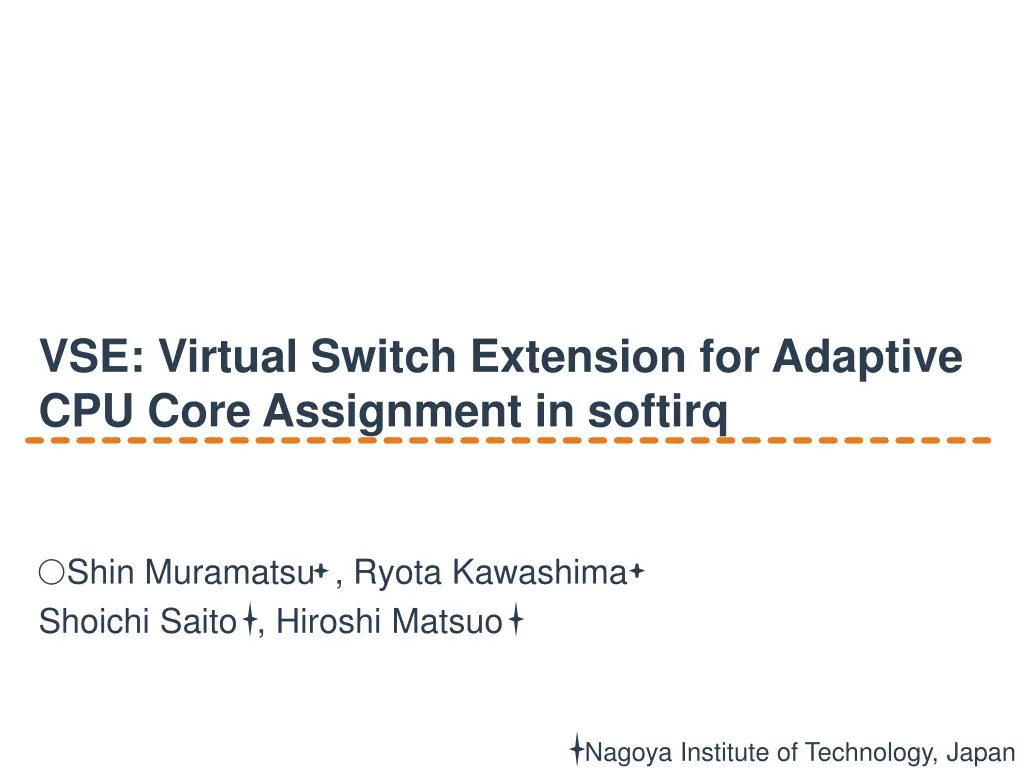

VSE: Virtual Switch Extension for Adaptive CPU Core Assignment in softirq Shin Muramatsu , Ryota Kawashima Shoichi Saito , Hiroshi Matsuo Nagoya Institute of Technology, Japan

Background The spread of public cloud datacenters(DCs) Multi tenancy is supported in many DCs Multiple tenants VMs run on the same physical server An overlay protocol enables network virtualization 1

Overlay-based Network Virtualization tenant B tenant B tenant A tenant A VM VM VM VM Decapsulates Encapsulates IP tunnel Virtual Switch Virtual Switch Tunnel header Physical Server Physical Server Traditional Datacenter Network 2

Problems in Receiver Physical Servers core 1 core 2 core 3 VM1 core 4 VM2 Packet Processing vSwitch VXLAN Protocol Stack SWIRQ Packet Processing Driver HWIRQ Physical ServerLoad distribution is required NIC 3

Receive Side Scaling(RSS) core 1 core 2 core 3 VM1 core 4 VM2 vSwitch vSwitch vSwitch Flow/VM collision VXLAN VXLAN VXLAN Flow collision Protocol Stack Protocol Stack Protocol Stack Driver Driver Driver The queue number is determined by hashed values calculated from packet headers Queue 1 Queue 2 Queue 3 Queue 4 Dispatcher RSS enabled NIC 4

Performance Impact of Two types of Collision 1. Two flows were handled on the same core 2. A flow and a VM were handled by the same core non-collision collision non-collision collision flow-VM 2-flows 1800 1800 1600 1600 1400 1400 1200 1200 Throughput(Mbps) Throughput(Mbps) 1000 1000 800 800 600 600 400 400 200 200 0 0 64 1400 8192 64 1400 8192 packet size(bytes) packet size(bytes) Tunneling protocol : VXLAN + AES (heavy-cost processing) Packet processing load was concentrated on a particular core The core was used for packet processing instead of the VM 5

Problems in Existing Models core is deterministically selected for HWIRQ Heavy flows can be processed on a particular core Heavy flows and VMs can be processed on the same core Performance decreases 6

Proposed Model (Virtual Switch Extension) Software component for packet processing on the network driver VSE determines CPU core for SWIRQ Current core load is considered Appropriate core selection tenant A tenant B VM VM OF-based flow table VSE VSE has an OpenFlow-based flow table Controllers can manage how to handle flows Priority flows are processed on low-loaded cores Network Driver Vendor-specific functionality is not used Vendor lock-in problem can be avoided 7

Architectural Overview of VSE Controller Inserts flow entries tenant B tenant B tenant A tenant A VM VM VM VM Relays Relays Flow Table Match L2-L4 headers ... Actions SWIRQ ... VSE VSE Core Table Load ... ... Number #1 VM_ID ... Network Driver Network Driver ... Traditional Datacenter Network 8

How VSE Works core 1 core 2 VM1 core 3 core 4 vSwitch vSwitch VXLAN VXLAN Protocol Stack Protocol Stack VSE Driver Flow Table Core Table Match Actions SWIRQ: Number #1 Load VM_ID - 3 4 70 10 - VM1 s flow Queue 1 ... ... ... Queue 2 ... ... ... Queue 3 #2 #3 #4 Queue 4 1 - - Hash Function 10 80 VM1 is running VM s flows can be matched RSS-NIC 5 9

Implementation Exploiting Receive Packet Steering (RPS) VSE determines the core for SWIRQ by using Flow and Core tables notifies the determined core number to RPS RPS executes SWIRQ to the notified core Protocol Stack SWIRQ:4 RPS Match VM1 s flow ... Action SWIRQ:4 ... core:4 VSE 10

Performance Evaluation The network environment VM1 VM2 VM2 VM1 Iperf Server Iperf Server Iperf Client Iperf Client UDP communication core2 core1 Virtual Switch Virtual Switch VXLAN + AES 40 GbE network Physical Server1 Physical Server2 Machine Specifications Physical Server1 Physical Server2 VM OS CPU Memory Virtual Switch Network NIC MTU CentOS 6.5(2.6.32) ubuntu-server 12.04 1core 2Gbytes - - virtio-net 1500bytes Core i5 (4cores) Core i7 (4cores) 16Gbytes OpenvSwitch 1.11 40GBASE-SR4 MellanoxConnetX(R)-3 1500bytes 11

Evaluation Details Evaluation models Models Function HWIRQ target core4 core1~4 core1~4 default rss vse RSS: off, VSE: off RSS: on, VSE: off RSS: on, VSE: on Iperf clients Protocol Packet sizes Total evaluation time The number of flow generations 20 times Flow duration time UDP (Tunneling : VXLAN + AES) 64/1400/8192bytes 20 minutes 1 minute 12

Results : Total Throughput of Two VMs default rss vse default rss vse 64bytes 1400bytes 50 1600 Total Throughput(Mbps) Total Throughput(Mbps) 40 1200 30 800 20 400 10 0 0 0 15 VSE distributed the packet processing load properly VSE can appropriately distribute packet processing load 5 10 20 0 5 10 15 20 All fragmented packets were handled on a single core All fragmented packets were handled on a single core time(m) time(m) rss rss vse vse default default rss vse 8192bytes 1 1 2000 Ratio of SWIRQ per core Ratio of SWIRQ per core Total Throughput(Mbps) 1500 0.5 0.5 1000 500 0 0 0 0 5 10 15 20 13 core1 core1 core2 core2 core3 core3 core4 core4 time(m)

Conclusion and Future Work Conclusion We proposed VSE that distributes received packet processing load Throughput can be improved using VSE Future work implements the protocol between a controller and VSE adaptively changes SWIRQ target based on current core load 14