Web Crawlers and Their Types

Web crawlers, also known as Spider or Search Engine bots, play a crucial role in automatically searching and indexing content across the Internet. This technology enables programs to follow links from one webpage to another, downloading content and creating comprehensive indexes. Various types of crawlers such as Beautiful Soup4, Scrapy, and Selenium offer unique features for efficient crawling and data extraction.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Crawler (An Suvin) 2022-11-18

Table of Contents 1. What is crawler? 2. Types of crawlers 3. ISF Site crawlers 4. Code Analysis 2

1. What is crawler? Programs that follow links from one webpage to another and are used to search automatically , Web crawlers, also known as Spider or Search Engine bots, download content from the entire Internet and create indexes. 3

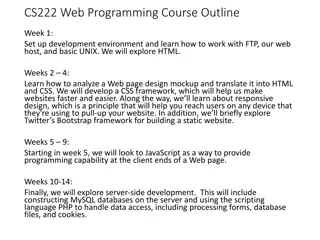

2. Types of crawlers 01 02 03 Beautiful Soup4 Scrapy Selenium 5

2_1. Scrapy Frameworks developed for crawling Various features and plug-ins are available , 'robots.txt' , Parallel processing, 'robots.txt' compliance, download speed control can be set Plug-in is not compatible well 6

+) Robots.txt Robots.txt URL . The Robots.txt file tells the search engine crawler the URL the crawler can access from the site. (The location of that file) http:// www.example.com/robots.txt 7

+) Navers robots.txt http:// www.naver.com/robots.txt 8

2_2. Beautiful Soup4 HTML, XML python Python library to extract information from HTML, XML files Requests urllib HTML , bs4 Use Requests or urlib to download HTML and extract data to bs4 HTML Suitable for static pages because HTML is downloaded from the server 9

2_3. Selenium Framework used for automation testing JavaScript Enables crawling of data generated by JavaScript rendering HTML , Beautiful Soup4 Use this method to parse HTML in your browser to reduce the need for Beautiful Soup4. Requires slow speed and large amount of memory Multi-process can be improved 10

3_1. Crawler Flow 1. , 1. Before starting the crawler, check the data 2. 2. Save crawled information to dictionary 3. 3. Save data to Excel 12

3_2. Site Analysis 1. Career = , 2. Title = Rhine Ruhr 2025 . . . 3. Employment = 4. Date of Apply = 5. Date of write = Content = 14

4. Code Analysis ##### Before starting the crawler, check the data driver = webdriver.Chrome('Chrome Driver Path') driver.get("https://sport-strategy.org/seek_job") driver.implicitly_wait(5) # Check the total number of pages end_button = driver.find_element(By.CSS_SELECTOR, '.pg_page.pg_end') end_button.click() end_page_num = driver.find_element(By.CSS_SELECTOR, '.pg_current.pg_page.pg-for-css') total_page = int(end_page_num.text) print("total number of pages :", total_page) # Current: p.193 # Back to the first page for start crawling start_button = driver.find_element(By.CSS_SELECTOR, '.pg_page.pg_start') start_button.click() 16

4_1. implicitly_wait VS sleep N load If the web page is loaded within N seconds, move on to the next process implicitly_wait sleep N Waiting for N seconds 17

4_2. find_element VS find_elements find_element find_elements locator element , return Web element list return (0 indexing) If there are multiple elements corresponding to the same locator, return the first element Return the list of web elements (indexing from 0) 18

4_3. By property find_element(By.< >, < > ) find_element (By. <property>, <property Value>') ID NAME XPATH TAG_NAME LINK_TEXT CALSS_NAME PARTIAL_LINK_TEXT CSS_SELECTOR 19

4_3. By property CSS_SELECTOR CSS Using the CSS Selector to Select , Using tags, multiple classes and ID selectors can be entered find_element(By.CSS_SELECTOR, .pg_page.pg_end ) This is an example. 20

4_3. By property XPATH XPATH XPATH makes it easy to access certain elements XPATH If properties overlap, resolve using XPATH find_element(By. XPATH, //*[@id= fboardlist ]/div/div[1]/div[1] ) This is an example. 21

##### Save crawled information to dictionary dic = {} d_index = 0 zero = 0# Save 0 (For the number of cards) tweleve = 12 # Save 12 (The number of cards in a page is 12.) # From beginning to end of page & card within page from beginning to end for p in range(2, total_page + 2): title = driver.find_elements(By.CSS_SELECTOR, '.list__item__title') content = driver.find_elements(By.CSS_SELECTOR, '.list__item__bot__content') content_num = 0 for card in range(zero, tweleve): career = driver.find_elements(By.XPATH, f'//*[@id="fboardlist"]/div/div[{card + 1}]/div[1]') try: dic[d_index] = [title[card].text, career[0].text, content[content_num].text, content[content_num + 1].text, content[content_num + 2].text] # if card does not exist except: 22

content[content_num + 1].text, content[content_num + 2].text] # if card does not exist except: break d_index += 1 content_num += 3 if total_page == total_page + 1: break url = f'https://sport-strategy.org/seek_job?page={p}' driver.get(url) driver.implicitly_wait(5) print("Crawling is over.") print("I'll save it in Excel") ##### Save data to Excel list_number = list(range(1, d_index + 1)) rows = d_index # Total number of cards cols = 5 # Number of columns 23 initialization = [[0 for c in range(cols)] for r in range(rows)]

##### Save data to Excel list_number = list(range(1, d_index + 1)) rows = d_index # Total number of cards cols = 5 # Number of columns initialization = [[0 for c in range(cols)] for r in range(rows)] for i in range(len(dic)): initialization[i] = dic[i] df = pd.DataFrame(initialization, list_number, columns=['Title', 'Career', 'employment', 'Date of Apply', 'Date of Write']) with pd.ExcelWriter( excel file path') as writer: df.to_excel(writer, sheet_name='sheet1') print("The file has been saved.") 24

Result 25