Decision Trees for Effective Data Analysis

Learn about decision trees, a tree-structured model for classification, regression, and probability estimation. Discover how decision trees can be effective in scenarios with variable interactions and fewer relevant features. Explore the structures, tests, leaf types, and optimizations associated with decision trees for comprehensive data analysis.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

Decision Trees Geoff Hulten

Overview of Decision Trees A tree structured model for classification, regression and probability estimation. CART (Classification and Regression Trees) Can be effective when: The problem has interactions between variables There aren t too many relevant features (less than thousands) You want to interpret the model to learn about your problem Despite this, simple decision trees are seldom used in practice. Most real applications use ensembles of trees (which we will talk about next week).

Reminder: Components of Learning Algorithm Model Structure Loss Function Optimization Method

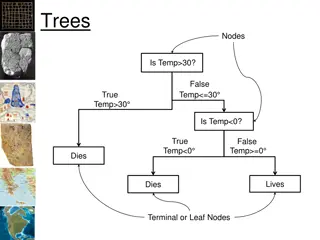

Structure of a Decision Tree Internal nodes test feature values One child per possible outcome Leaves contain predictions Humidity Outlook Wind Prediction Normal Rain Strong High Sunny Normal

Decision Trees: Basic Tests ??= 0? Binary Feature False True Categorical Feature ??? ?????1 ?????? Numeric Feature ??< 101.2? False True

Decision Trees: Leaf Types Classification ? = 1 Regression ? = 101.2 Probability Estimate p ? = 0 = .3 ? ? = 1 = .3 ? ? = 2 = .4

Summary of Decision Tree Structure Supports many types of features and predictions Can represent many functions (may require a lot of nodes) Complexity of model can scale with data/concept

Not Not the Loss Function the Loss Function for Decision Trees ? 1 ? ?=1 ???? ?^,? = 0 ?? ?^= ? ???? 1 Training set error rate Using this to: Give a simple example of optimizing Give an example of why this fancy log(blah, blah) stuff matters

An Example of Growing Decision Trees ?1= 1? ?2= 1? False False True True ? = 0 ? = 1 ? = 0 ? = 1 ? = 0 ? = 1 ? = 0 ? = 1 ?3= 1? False True ? = 0 ? = 1 ? = 0 ? = 1

Trouble with Training Set Error Error 33% Error 33% Error = 0%

Entropy of a distribution ??????? ? = ? ? = ? ???2(? ? = ? ) ? {?} ? = 0 ? = 1 ???????(?) 10 0 5 5 For Binary Y 1 0.9 0.8 0.7 Entropy(S) 0.6 0.5 0.4 0.3 0.2 0.1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 P(y=0)

Loss for Decision Trees ? 1 ? ?=1 ???? ? = ???????(???? ??) ? = 0 ? = 1 4 4 ?1= 1? False True For Binary Y 1 0.9 ? = 0 ? = 1 ? = 0 ? = 1 0.8 3 1 1 3 0.7 Entropy(S) 0.6 0.5 0.4 ??= 1? 0.3 0.2 False True 0.1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 ? = 0 ? = 1 ? = 0 ? = 1 P(y=0) 4 0 0 4

Decision Tree Optimization Loss(S, ??) lossSum = 0 for ???? in SplitByFeatureValues(S, ??) lossSum += Entropy(???? ) * len(????) return lossSum / len(S) InformationGain(S, ??) return Entropy(S) Loss(S, ??) BestSplitAtribute(S) informationGains = {} for i in range(# features): informationGains[i] = InformationGain(S, i) if AllEqualZero(informationGains): return <NONE> return FindIndexWithHighestValue(informationGains)

Splitting Non-binary Features Categorical Features Numerical Features One child per possible value Split at each observed value One vs the rest Split observed range evenly Set vs the rest Split samples evenly

Stopping Criteria? When no further split improves Loss Run out of features Min loss reduction to split Leaf is pure When the model is complex enough Number of nodes in the tree Maximum depth of the tree Loss penalty for complexity Hybrid Very common Decision trees tend to overfit When there isn t enough data to make good decisions Min number of samples to grow Pruning?

Predicting with Decision Trees Take the new sample, pass it through the tree until it reaches a leaf: Binary classification Return most common label among training samples at the leaf Probability estimate Return (smoothed) probability distribution defined by samples at leaf Categorical classification Return most common label among training samples at the leaf Regression Return the most common value at leaf Linear regression among samples at leaf

Interpreting Decision Trees Understanding Features Near the root Prominent paths Taken by many samples Used many times Highly accurate Big loss improvements in aggregate Taken by important (expensive) samples

Decision Tree Algorithm Recursively grow a tree Partition data by best feature Reduce entropy Parameters How to partition by features (numeric) How to control complexity Flexible and simple Feature types Prediction types Base to important algorithms AdaBoost (stumps) Random forests Gradient boosting machines