HEP Data Production and Performance Study at HPC-TianheII

"Explore the experience and challenges in HEP data production at HPC-TianheII, focusing on motivation, container-based virtualization, system environment, and data storage. Learn about the highly heterogeneous resources and future outlook of this high-performance computing facility."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

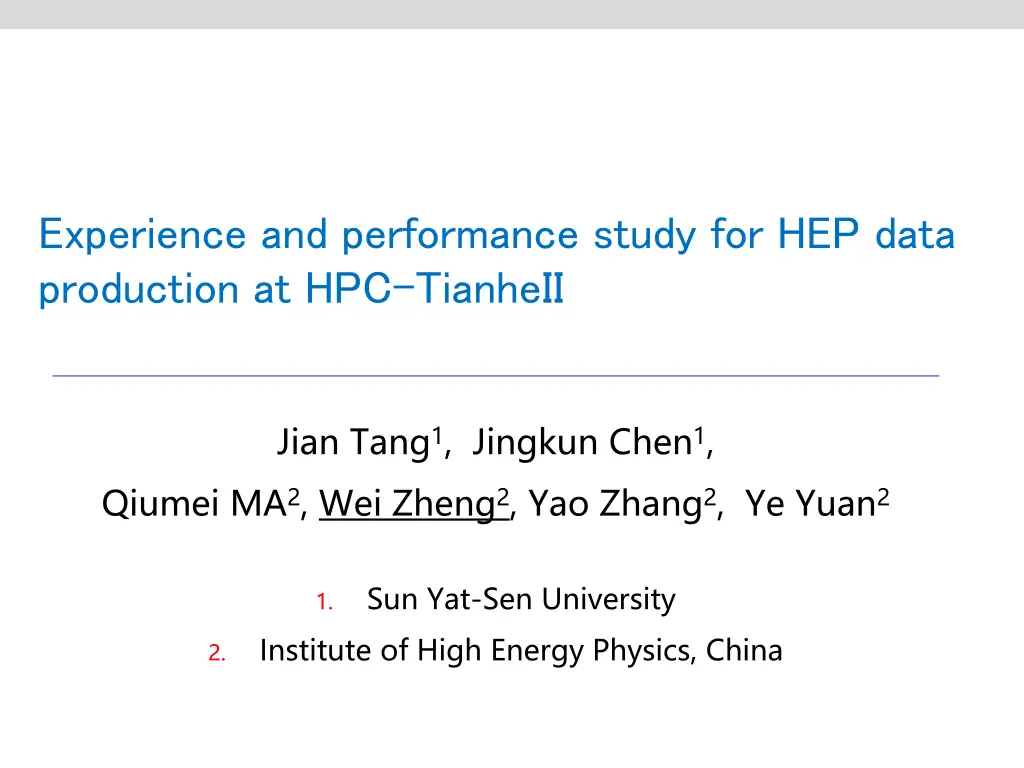

Experience and performance study for HEP data production at HPC-TianheII Jian Tang1, Jingkun Chen1, Qiumei MA2, Wei Zheng2, Yao Zhang2, Ye Yuan2 Sun Yat-Sen University Institute of High Energy Physics, China 1. 2.

Outline 2 Motivation Introduction Problems & Solutions Container-based Virtualization Current status Next work & Future 2025/8/21

Motivation The significantly increased computational requirements for the current and future HEP experiment place new requirements on computing resource The increasing availability of resources of High Performance Computing HPC and cloud computing gives us an opportunity to extend the computing resource Future highly heterogeneous resources HPC facilities cloud computing ( commercial and research) volunteer computing campus clusters Our work aims to study the exploitation of heterogeneous processing resource for the HEP offline analysis 3

Challenges to use the new types of computing resources Very particular setups and policies Different hardware structure and software setup Scaling Dynamic data management and data access High throughput model High bandwidth requirements 4

Introduction to Tianhe-2(Milky Way2) Located in Sun Yat-sen University, National Supercomputing center in Guangzhou, China, http://en.nscc-gz.cn/ 16,000 node, total 3,120,000 CPU core 30.65Pflops(world's fastest at 2013 ~ 2015) 88GB memory / node CPU:64G, MIC:24G 12.4PB disk array

System environment of Tianhe-2 OS & Software Linux version 3.10.0-514.el7.x86_64 (Centos7) gcc version 4.8.5 default support multi-thread compiling OpenMP and MPI Load software using module Network NO internet connection Use vpn and scp,ssh by key login 6

File system and data storage Space for software, quota 50GB Production data, quota 2T data need to transfer to other sites, irods is not supports yet scp for upload and download sftp client: such as Xmanager, FileZilla, WinSCP, FlashFTP

Job submission system Resources: Limit for Number of node and core 500 nodes~5000 nodes maximum, 24 cores each node 12,000 cores ~ 120,000 maximum allowed Job submission slurm system with array support node scheduling 20 jobs max no time limit share with other users for 617 nodes currently about 2000000 CPU hours left

HTC vs HPC HTC High Throughput Computing Many jobs are typically similar but not highly parallel Jobs do not communicate with each other Can use heterogeneous resources, low environmental reliability requirements Easy job migration, cross-domain computing HPC High Performance Computing Requires very high computational performance Single job can be parallel across nodes, and communicate frequently between processes Require intensive computing resources High environmental consistency and reliability requirements, low fault tolerance 9

Migration to HPC Problem of direct installation Only Centos7 support by Tianhe-2, different from our local cluster SL6(same with Centos6), some software installation limited by OS Miss many base software and external libraries Mysql server can not be running well, mysql process often be killed, server port is occupied Insufficient rapid technical support 10

Migration Solution Solution Use container virtualization technology Container advantages Permission to install almost any required software in the container Not affected by the physical machine operating system environment Convenient debugging and testing Jobs computing environment is highly consistent Existing various types container images at IHEP 11

Requirements of BESIII container migration Singularity software is required to run the BESIII software image file Singularity version 2.6 or above Non-root users can writable mount home and data disks, have permission to only read mounted system directories /usr /var User sysu_jtang_2 can execute singularity commands One Docker/Singularity node Run mysql5.0 container User sysu_jtang_2 should in the docker group so that start 3306 socket port 3306 port can be accessed by the login node and the compute node 12

Singularity vs Docker Singularity Lighter and suitable for HPC, MPI ,GPU, infiniband, focus on calculation User permissions are consistent inside and outside the container /dev, /sys and /proc automount Docker Pays more attention to supply service Convenient and stable deployment and service. More isolation compared to singularity 13

Workflow of Container virtualization Preparation for migration Test and debug in the Container virtualization at IHEP Create complete and available container images 14

Build BESIII container image Software container image Base image BaseSL69->WorkNodeSL69 BOSS install Location: /afs/ihep.ac.cn/bes3/offline/Boss/release_number External libraries: /afs/ihep.ac.cn/bes3/offline/ExternalLib Mysql container image Base image SL55 Mysql 5.0 server Database size 3.4 GB 15

Boss running on Tianhe-2 Boss Version: 7.0.4 Auto writable mount user home disk /BIGDATA1/sysu_jtang_2 16

Database running on Tianhe-2 Mysql server Start mysql5.0 singularity instance on login node Non-root user sysu_jtang2 3306 Port, can access from login/compute node 17

Job submission Job submission mode Exclusive node Resource utilization : Job number N*Nodes*Cores [root@tianhe ~]# cat jobrun.sh source /home/BESIII/cmthome-7.0.4/setupCMT.sh source /home/BESIII/cmthome-7.0.4/setup.sh source /home/BESIII/workarea/7.0.4/TestRelease/TestRelease-00-00- 86/cmt/setup.sh cd /home/BESIII/workarea/7.0.4/TestRelease/TestRelease-00-00-86/run boss.exe jobOptions_sim.txt [root@tianhe ~]# cat submit.sh singularity exec --bind ${HOME}:/home WorkNode69forBossV2.img /home/Myjob.sh [root@tianhe ~]# yhrun -n 240 -N 10 p bigdata $Home/submit.sh 18

Next work & Future Next work Large-scale job running Performance testing and optimization Separation dependent software libraries from image, Reduce the size of the image Provide multiple versions of the boss image Future Automatic data transmission Cross-domain scheduling, jobs can be scheduled to remote sites transparently GPU Parallel application More sites and resources will join in the future 19