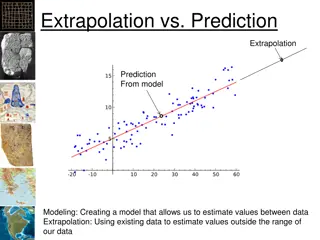

Understanding Machine Learning Extrapolation Limitations

Discover the limitations of machine learning models in extrapolating data beyond the original training range. Learn how tree-based methods like Random Forest and Boosted Models struggle with new data, and why neural networks also face challenges. Explore the implications for model deployment and testing.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

MACHINE LEARNING EXTRAPOLATION Felice russo 2020

This presentation is a set of simple experiments I made to help clarify the extrapolation issue of machine learning methods. In this experiment I fit RF, NN, Boosted and XGBoost models to some super-simple artificial datasets with two columns, x and y; and then try to predict new values of y based on values of x that are outside the original range of y. An obvious limitation of RF, Boosted Forest and XGBoost when predicting y based on values of x that are outside the range of the original training set, they presume y will just be around the highest value of y in the original set. These tree-based methods (more detail in the next slides) basically can t extrapolate the way we d find most intuitive, whereas the neural net do ok only for a simple linear trend. The limitation of the tree-based methods in extrapolating to an out-of-sample range are obvious when we look at a single tree. Here a single regression tree fit to linear trend as shown in the next slide. The tree algorithm uses the values of x to partition the data and allocate an appropriate value of y (this isn t usually done with only one explanatory variable of course, but it makes it simple to see what is going on). So if x is less than 11, y is predicted to be 4; if x is between 11 and 28 y is 9; etc. If x is greater than 84, then y is 31. What happens in the single tree is basically repeated by the more sophisticated random forest,boosted forest and the extreme gradient boosting models. Hence no matter how high a value of x we give them, they predict y to be around 31. The implication? Just to bear in mind this limitation of tree-based machine learning methods - they won t handle well new data that is out of the range of the original training data.

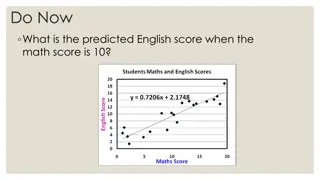

Lets suppose to have two variable x and y both time dependent. X is a linear function of t while y=x+/-e (rnd error) t 1 2 3 4 5 6 7 8 9 x 1 2 3 4 5 6 7 8 9 10 y 3.70 4.43 3.56 5.49 3.81 3.05 3.66 5.38 3.83 4.31 10 Let s divide the database as usual in 3 groups. The Test is choosen in the last part of time window in order to be completely unknown for the machine learning algorithm used. A second test will be by using the typical Trainging/Validation and Test groups randomly chosen.

Neural network with 2 layers and 3 activation functions The NN model isn t able to extrapolate the behavior of test data never seen before even though in the same range of training/Validation data

As for NN, the RF too shows very low extrapolation capability

If the 3 groups Training, validation and test are choosen randomly then the KNN model works very well even though will fail once deployed on new data never seen.

As for the NN, for RF too If the 3 groups Training, validation and test are chosen randomly then the model works very well even though will fail once deployed on new data never seen.

The NN model again with a different dependency between x and y isn t able to extrapolate the behavior of test data never seen before even though in the same range of training/Validation data. The NN extrapolate the trend seen in the training and validation data

The RF too, again with a different dependency between x and y isn t able to extrapolate the behavior of test data never seen before even though in the same range of training/Validation data. THe RF extrapolate assigning the last value seen in the training/validation groups. This is expected due to the fact that RF is decision tree based.

If the 3 groups Training, validation and test are chosen randomly then the RF model works very well even though will fail once deployed on new data never seen.

If the 3 groups Training, validation and test are chosen randomly then the NN too works very well even though will fail once deployed on new data never seen.

Less complex model If the relationship between y and x is linear than the NN can predict well the future values if the model isn t to much complex (few layers and few activation functions)

KK performance Training, Validation and test chosen randomly

The Boosted Forest give problems on the future values never seen exactly as for RF. Same behavior for XGBoost algo too.

No problem when the Training/Validation and Test are chosen randomly

The Bootstrap RF give problems on the future values never seen exactly as for RF. Same behavior for XGBoost algo too.