Machine Learning: Introduction to Nearest Neighbor and Distance Metrics

"Explore supervised learning topics like measuring performance, nearest neighbor, and distance metrics in machine learning. Understand the probability scenarios and get insights into loss and error functions for effective learning."

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

ECE 5424: Introduction to Machine Learning Topics: Supervised Learning Measuring performance Nearest Neighbor Distance Metrics Readings: Barber 14 (kNN) Stefan Lee Virginia Tech

Administrative Course add period is over If not enrolled, please leave. Virginia law apparently. (C) Dhruv Batra 2

HW0 HW0 is graded Average: 81% Median: 85% Max: 100% Min: 35% The lower your score, the harder you should expect to work. (C) Dhruv Batra 3

HW0 Question 1: Sam is an odd and not-so-honest person who carries around a bag containing 3 fair coins (heads and tails) and 1 coin which has heads on both sides (which he uses to cheat his friends out of small amounts of cash). He selects a coin randomly from the bag and flips it 4 times and the coin comes up heads each time. He bets you $10 the next flip will be heads. What is the probability the next flip will be heads? Only 1 coin is drawn from the bag, this coin is flipped 4 times. What is the probability of the 5th flip being heads? Want: P(H | 4H) P(H | 4 H) = P(H | fair) P(fair| 4H) + P(H | unfair) P(unfair|4H) P(fair | 4H) = P(4H | fair) P(fair) / P(4H) (Bayes Rule) P(4H) = P(4H | fair) P(fair) + P(4H | unfair) P(unfair) (C) Dhruv Batra 4

Recap from last time (C) Dhruv Batra 5

(C) Dhruv Batra 6 Slide Credit: Yaser Abu-Mostapha

Nearest Neighbor Demo http://www.cs.technion.ac.il/~rani/LocBoost/ (C) Dhruv Batra 7

Proportion as Confidence Gender Classification from body proportions Igor Janjic & Daniel Friedman, Juniors (C) Dhruv Batra 8

Plan for today Supervised/Inductive Learning (A bit more on) Loss functions Nearest Neighbor Common Distance Metrics Kernel Classification/Regression Curse of Dimensionality (C) Dhruv Batra 9

Loss/Error Functions How do we measure performance? Regression: L2 error Classification: #misclassifications Weighted misclassification via a cost matrix For 2-class classification: True Positive, False Positive, True Negative, False Negative For k-class classification: Confusion Matrix ROC curves http://psych.hanover.edu/JavaTest/SDT/ROC.html (C) Dhruv Batra 10

Nearest Neighbors (C) Dhruv Batra Image Credit: Wikipedia 11

Instance/Memory-based Learning Four things make a memory based learner: A distance metric How many nearby neighbors to look at? A weighting function (optional) How to fit with the local points? (C) Dhruv Batra Slide Credit: Carlos Guestrin 12

1-Nearest Neighbour Four things make a memory based learner: A distance metric Euclidean (and others) How many nearby neighbors to look at? 1 A weighting function (optional) unused How to fit with the local points? Just predict the same output as the nearest neighbour. (C) Dhruv Batra Slide Credit: Carlos Guestrin 13

k-Nearest Neighbour Four things make a memory based learner: A distance metric Euclidean (and others) How many nearby neighbors to look at? k A weighting function (optional) unused How to fit with the local points? Just predict the average output among the nearest neighbors. (C) Dhruv Batra Slide Credit: Carlos Guestrin 14

1-NN for Regression Here, this is the closest datapoint y x (C) Dhruv Batra Figure Credit: Carlos Guestrin 15

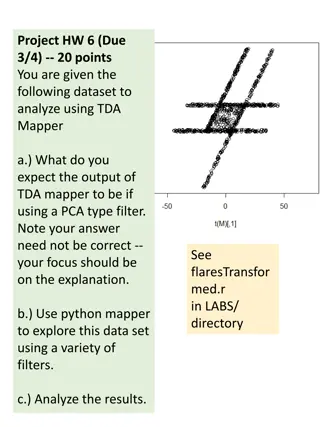

Multivariate distance metrics Suppose the input vectors x1, x2, xN are two dimensional: x1 = ( x11 , x12 ) , x2 = ( x21 , x22) , xN = ( xN1 , xN2 ). One can draw the nearest-neighbor regions in input space. Dist(xi,xj) = (xi1 xj1)2 + (xi2 xj2)2 Dist(xi,xj) =(xi1 xj1)2+(3xi2 3xj2)2 The relative scalings in the distance metric affect region shapes Slide Credit: Carlos Guestrin

Euclidean distance metric Or equivalently, A where Slide Credit: Carlos Guestrin

Notable distance metrics (and their level sets) Mahalanobis (non-diagonal A) Scaled Euclidian (L2) Slide Credit: Carlos Guestrin

Minkowski distance Image Credit: By Waldir (Based on File:MinkowskiCircles.svg) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons (C) Dhruv Batra 19

Notable distance metrics (and their level sets) Scaled Euclidian (L2) L1 norm (absolute) Mahalanobis (non-diagonal A) Linf (max) norm Slide Credit: Carlos Guestrin