Understanding Markov Chain Mixing Times and Total Variation Distance

Dive into the intricacies of Markov chain mixing times with an introduction to key concepts such as Total Variation Distance. Explore topics like Coupling, Convergence Theorem, and Measuring Distance from Stationary through informative examples and propositions. Follow along as the text delves into definitions, examples, easy computations, and proofs to enhance your understanding.

Download Presentation

Please find below an Image/Link to download the presentation.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author. If you encounter any issues during the download, it is possible that the publisher has removed the file from their server.

You are allowed to download the files provided on this website for personal or commercial use, subject to the condition that they are used lawfully. All files are the property of their respective owners.

The content on the website is provided AS IS for your information and personal use only. It may not be sold, licensed, or shared on other websites without obtaining consent from the author.

E N D

Presentation Transcript

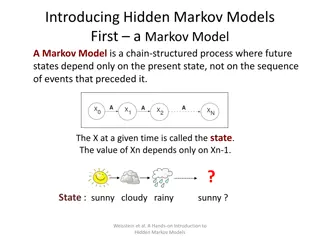

Markov Chains and Mixing Times By Levin, Peres and Wilmer Chapter 4: Introduction to markov chain mixing, Sections 4.1-4.4, pp. 47-61. Presented by Dani Dorfman

Planned topics Total Variation Distance Coupling The Convergence Theorem Measuring Distance from Stationary

Total Variation Distance

Definition Given two distributions ?,? on we define the Total Variation to be: ? ???= max ? ? ? ?(?)

Example 1 ? 1 ? Coin tossing frog. ? ? ? ? ? ? 1 ? ? ? ? = 1 ?, = ? + ? ? + ? Define ?0= 1,0 , ?= ??? (?) (= ? ??(?) ) An easy computation shows: ?? ??= ?= 1 ? ?? 0

An Easy Computation Induction: ? = 0: 0= 1 ? ?0 0 ? ? + 1: ?+1= ??+1? ? ? = 1 ? ??? + ? 1 ??? ? ? = ? 1 ? ? ??? + ? ? ? = 1 ? ? ??? + ? ? + ?= 1 ? ? ??? 1 ? ? ? ? = 1 ? ? ?

Proposition 4.2 Let ? and ? be two probability distributions on . Then: ? ???=1 2 ? ? ? ?(?) ? ? ? ?? ? ? ?(?) ??? ?? ?

Proof Define ? = ?|? ? ?(?) , Let ? be an event. Clearly: ? ? ? ? ? ? ? ? ? ? ? ? ? ? Parallel argument gives: ? ? ? ? ? ? ?? ? ? ?? ? ?? ? ?? Note that both upper bounds are equal. Taking ? = ? achieves the upper bounds, therefore: ? ???= ? ? ? ? = 1 2 + (? ?? ?(??) =1 ? ? ? ? 2 ? |? ? ?(?)|

Remarks From the last proof we easily deduce: ? ???= [? ? ? ? ] ? ,? ? ?(?) Notice that ?? is equivalent to ?1 norm and therefore: ? ??? ? ???+ ? ???

Proposition 4.5 Let ? and ? be two probability distributions on . Then: ? ???=1 2 max ? 1 sup ? ? ? ? ? ? ?(?) ? ? ?? ???

Proof Clearly the following function achieves the supremum: ? (?) = 1 ? ? ? ? 0 ? ? ? ? < 0 1 Therefore: 1 2 ? ? ? ?(?) +1 ? ? ? ? ? ? ?(?) = 1 2 ? ? ?(?) = 2 ? ,? ? ? ? 0 ? ,? ? ? ? <0 1 2 ? ???+1 ? ???= ? ??? 2

Coupling & Total Variation

Definition A coupling of two probability distributions ?,? is a pair of random variables ?,? s.t ? ? = ? = ? ? ,? ? = ? = ? ? . Given a coupling ?,? of ?,? one can define ? ?,? = ?(? = ?,? = ?) which represents the joint distribution ?,? . Thus: ? ? = ? ?,? ,? ? = ?(?,?) ? ?

Example ?,? represent a legal coin flip. We can build several couplings: (?,?) s.t (?,?) s.t ? = ? ? ? ? = ? = ? =1 1/2 0 ? ? ? = 0 ?,? ? ? = ?,? = ? =1 1/4 1/4 ? ? ? =1 4 2 1/4 1/4 0 ? = ? = 1/2 2

Proposition 4.7 Let ? and ? be two probability distributions on . Then: ? ???= inf ?,??(? ?)

Proof In order to show ? ??? inf ?,?? ? ? , ? note that: ? ? ? ? = ? ? ? ? ? ? ? ? ?,? ? ?(? ?) Thus it suffices to find a coupling ?,? ?.? ? ? ? = ? ???. ? =

Proof Cont. ? ?? ???

Proof Cont. Define the coupling (?,?) as follows: With probability p = 1 ? ??? take ? = ? according to the distribution ????. O/w take ?,? from ? = ? ? ? ? ? > 0 ,?? according to the distributions ??,??? correspondingly. Clearly: ? ? ? = ? ???

Proof Cont. All that is left is to define ??,???, ????: 1 ? ? ? ? ? ? ? ? > 0 ???? ? ? ? ? 0 ???? ?(?) = ? ??? 1 ? ??? 0 ? ? ? ? ??(?) = 0 ???(?) =min{? ? ,?(?)} 1 ? ??? Note that: ? = ?????+ 1 ? ?? , ? = ?????+ 1 ? ???

The Convergence Theorem

Theorem 4.9 Suppose that ? is irreducible and aperiodic, with stationary distribution ?. Then ? 0,1 ,? > 0 ?.?: ???, ??< ??? ? ma? ?

Lemma (Prop. 1.7) If ? is irreducible and aperiodic, then ? > 0 ?.?: ?,? ???,? > 0 Proof: Define ? ? = {?|???,? > 0}, then ? gcd ? ? ? is closed under addition. From number theory: ? ?? ? > ??? ? . From irreducibility ?,? ??,?< ? ?.? ???,??,? > 0. Taking ? ? + max ? ?? ends the proof. = 1.

Proof of Theorem 4.9 The last lemma gives us the existence of ? ?.? ?,? ???,? > 0. Let be the matrix with rows, each row is ?. ? > 0 ?.? ?,? ? ?,? ?? ? = ? ?,? . Let ? be the stochastic matrix that is derived from the equation: ??= 1 ? + ?? [? = 1 ?] Clearly: ? = ? = . By induction one can see: ? ???= 1 ?? + ????

Proof of Induction Case ? = 1 comes by definition. ? ? + 1: ??(?+1)= ?????= 1 ?? + ??????= 1 ?? + ????P?= 1 ?? + ???? 1 ? + ?? = 1 ?? + ??1 ? ?? + ??+1??+1= 1 ?? + ??1 ? + ??+1??+1= 1 ??+1 + ??+1??+1

Proof of Theorem 4.9 Cont. The induction derives: ???+?= ?????= Therefore, 1 ?? + ?????? ? ???+? = ??(???? ) Finally, ? ???+??, ? ?? ??

Standardizing Distance From Stationary

Definitions Given a stochastic matrix ?with it s ?, we define: ???, ??? ? ? = max ? ?,? ???, ??(?, ?? ? ? = max

Lemma 4.11 For every stochastic matrix ? and her stationary distribution ?: ? ? ? ? 2?(?) Proof: The second inequality is trivial from the triangle inequality. Note that: ? ? = ? ? ? ?(?,?).

Proof Cont. ??(?, ) ???= max ? ???,? ?(?) = ?(?) ???,? ??(?,?) max ? ? ???,? ???,? max ? ? ? ? ? ???,? ???,? ???, ???, ?(?)max = ? ? ?? ? ? ? ? = ?(?) ? ? ?

Observations ? ? = max ?? ??? ? ? ? = max ?P ???? ?,?

Lemma 4.12 The ? function is submultiplicative, ?.?. ?,? ? ? + ? ? ? Proof: Fix ?,? , Let (??,??) be the optimal coupling of ???, ,???, . Note that: ??+??,? = (????) ?,? = ? ? . ???,? ???,? = ? ????,? ? The same argument gives us: ??+??,? = ? ????,? .

Proof Cont. Note: ??+??,? ??+??,? = ? ????,? Summing over all ? yields: ??+??, ??+??, ? ????,? ??=1 ? ????,? ??(??,?) 2 ? 1 2 ? ? ? ? ?? ?? ? ? ????,? ??(??,?) ? ? ?

Remarks From submultiplicity we note that ?(?) is non-increasing. Also: ? ? ?? ? ?? ??(?)